[bug] Prompt "no pod found with selector" error while building docker image at workflow

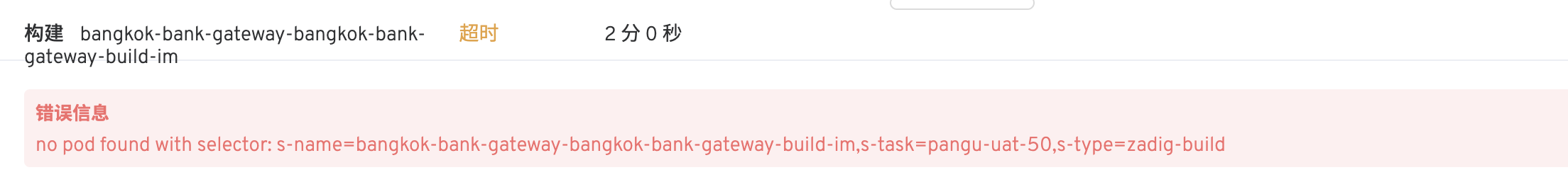

What happened? I have occurred an error while building a docker image at workflow and caused a timeout error, which hadn't occurred at other building jobs except for this one.

The error message is as follows:

After that, I found the s-name looks like had truncated, then I calculated the number of characters of ”bangkok-bank-gateway-bangkok-bank-gateway-build-im“ by command: echo -n bangkok-bank-gateway-bangkok-bank-gateway-build-im | wc -c, which showed it contains 50 characters.

What did you expect to happen? I suppose the service name must be less than 50, it would be better if you restrict the service name limit at the Frontend UI.

How To Reproduce it(as minimally and precisely as possible)

Steps to reproduce the behavior:

- Create a service name with more than 50 characters

- Build this service at workflow

- See errors -->

Install Methods

- [ ] Helm

- [x] Script base on K8s

- [ ] All in One

- [ ] Offline

Versions Used zadig: 1.13.0

kubernetes: v1.21.14

Environment

Cloud Provider: Tencent Cloud

Resources: 8C 16G MEM

OS: CentOS Linux release 7.6.1810 (Core)

Services Status

kubectl version

kubectl get po -n `zadig-installed-namespace`

# paste output here

[root@k8smaster ~]# kubectl get po -n zadig

NAME READY STATUS RESTARTS AGE

aslan-64c4fcd54-s7dt5 2/2 Running 0 2d2h

config-6b4dbf487c-qt6sl 1/1 Running 0 2d2h

cron-5c97d77868-6xn9l 2/2 Running 0 4d19h

dind-0 1/1 Running 0 4d18h

discovery-b95879566-c59w7 1/1 Running 0 4d19h

gateway-54f8d8778-gsx4k 1/1 Running 0 4d19h

gateway-proxy-55d95f655d-dm7g5 1/1 Running 0 4d19h

gloo-76f75f9778-n9qgl 1/1 Running 0 4d19h

hub-server-78cbdcb844-8v95h 1/1 Running 0 4d19h

nsqlookup-0 1/1 Running 0 4d18h

opa-67897f7df8-vvdgq 1/1 Running 0 4d19h

picket-557474d684-2mrkw 1/1 Running 0 4d19h

podexec-694bbd94f7-sswjn 1/1 Running 0 4d19h

policy-7c5bfdd8bc-k8wzf 1/1 Running 0 4d19h

report-report-build-zadig-build-5-z6ttf 0/1 ImagePullBackOff 0 5d23h

resource-server-76844579d8-mhcpw 1/1 Running 0 4d19h

unionpay-workflow-dev-119-buildv2-b9vgt-gq8m4 0/1 Completed 0 45d

unionpay-workflow-dev-84-buildv2-9r59c-77827 0/1 Error 0 46d

unionpay-workflow-dev-84-buildv2-bjl69-6mtbh 0/1 Completed 0 46d

unionpay-workflow-dev-84-buildv2-css4q-5t9v7 0/1 Error 0 46d

unionpay-workflow-dev-84-buildv2-dq7jj-hmcz2 0/1 Error 0 46d

unionpay-workflow-dev-84-buildv2-fm85j-nkjc2 0/1 ContainerCreating 0 46d

unionpay-workflow-dev-84-buildv2-jf2lh-gw7j6 0/1 Completed 0 46d

unionpay-workflow-dev-84-buildv2-rggbv-ldcvz 0/1 Completed 0 46d

unionpay-workflow-dev-84-buildv2-th5xn-jnr2s 0/1 Completed 0 46d

user-77b59fc7c6-ddwg2 1/1 Running 0 4d19h

warpdrive-665fc68cf-hnr8x 2/2 Running 0 4d19h

warpdrive-665fc68cf-jvtlk 2/2 Running 0 4d19h

zadig-ingress-nginx-controller-87cdd67d6-87zqd 1/1 Running 0 4d18h

zadig-minio-567dd7c6d-rdh8m 1/1 Running 0 4d19h

zadig-mongodb-7bb95f69c9-k9fn9 1/1 Running 6 30d

zadig-mysql-0 1/1 Running 0 7d16h

zadig-portal-659bc8d567-xxwxg 1/1 Running 0 4d19h

zadig-zadig-dex-58dc4c8b58-cgg65 1/1 Running 0 4d19h

[root@k8smaster ~]# kubectl version

Client Version: version.Info{Major:"1", Minor:"21", GitVersion:"v1.21.14", GitCommit:"0f77da5bd4809927e15d1658fb4aa8f13ad890a5", GitTreeState:"clean", BuildDate:"2022-06-15T14:17:29Z", GoVersion:"go1.16.15", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"21", GitVersion:"v1.21.14", GitCommit:"0f77da5bd4809927e15d1658fb4aa8f13ad890a5", GitTreeState:"clean", BuildDate:"2022-06-15T14:11:36Z", GoVersion:"go1.16.15", Compiler:"gc", Platform:"linux/amd64"}

kubectl describe pods `abnormal-pod`

kubectl logs --tail=500 `abnormal-pod`

# paste output here

It seems that way. I can confirm this is a bug and it will be fixed in the next version.