Configuration and model installer for new model layout

Restore invokeai-configure and invokeai-model-install

This PR updates invokeai-configure and invokeai-model-install to work with the new model manager file layout.

It is marked as "draft" at the moment because it hasn't undergone testing of all the edge cases, but it seems functional. I'll take it out of draft as soon as I've tortured it a bit.

@ebr I have addressed the following issues:

- "tile" controlnet not loading (fixed load)

- "sd-model-finetuned-lora-t4" not loading (removed from list of starter LoRAs; torch.load() crashing on this file for some reason)

- ".safetensors" files appearing in model listing rather than just the base name. This also fixes duplicated entries appearing.

- Yes, HuggingFace pipeline models now appear in both the models directory and the main HuggingFace cache, consuming double the disk space. I'm simply downloading the models to the local directory, and HuggingFace is doing its caching thing in the background. I could remove the cached copy, but I don't know what other programs on the user's computer might be using it.

- I'm also seeing the warning about implicitly cleaning up the temporary directory, but I'm not sure where it's coming from. Will investigate.

- I have adjusted the TUI size requirements. Could you try again on your system?

Thank you!!

- Confirmed fixed :heavy_check_mark:

- Confirmed workaround :heavy_check_mark:

- Confirmed fixed :heavy_check_mark:

- Sounds good now that I know to expect it, and actually this may be a very good thing to avoid re-downloads when users reinstall or install in another location - HF will just use its cache. The downside is that users who install on a non-system drive may unexpectedly run out of disk space. But I agree that deleting anything in the users' cache directory would be arguably even worse. Not sure how to solve this right now aside from documenting it, but also maybe this isn't worth holding up 3.0. :white_check_mark:

- I think it may be just telling us to use a context manager, but I'm not sure if these warnings are coming from our code or HuggingFace. :grey_question:

- TUI was still line-wrapping for me, but then I tested in several emulators and realized it's not universally happening. It's fine in

gnome-terminal(no line wrapping, line overflow is hidden until resize). But bothterminatorandalacrittyare having the issue. Considering the multitude of system configurations and terminal emulators out there, this is probably an area we will never be able to get perfectly right, and can say this is "good enough". :heavy_check_mark:

One new issue that just came up:

-

FileNotFoundError: [Errno 2] No such file or directory: '/home/clipclop/invokeai/configs/models.yaml'unlessINVOKEAI_ROOTis set to a location of an existing runtime directory. To be clear, my.venvis in the repo root, and my runtime dir is on another drive. So this is certainly an edge case, but will present itself for developers with a similar setup

Thank you!!

- Confirmed fixed heavy_check_mark

- Confirmed workaround heavy_check_mark

- Confirmed fixed heavy_check_mark

- Sounds good now that I know to expect it, and actually this may be a very good thing to avoid re-downloads when users reinstall or install in another location - HF will just use its cache. The downside is that users who install on a non-system drive may unexpectedly run out of disk space. But I agree that deleting anything in the users' cache directory would be arguably even worse. Not sure how to solve this right now aside from documenting it, but also maybe this isn't worth holding up 3.0. white_check_mark

- I think it may be just telling us to use a context manager, but I'm not sure if these warnings are coming from our code or HuggingFace. grey_question

- TUI was still line-wrapping for me, but then I tested in several emulators and realized it's not universally happening. It's fine in

gnome-terminal(no line wrapping, line overflow is hidden until resize). But bothterminatorandalacrittyare having the issue. Considering the multitude of system configurations and terminal emulators out there, this is probably an area we will never be able to get perfectly right, and can say this is "good enough". heavy_check_mark

I'm gnashing my teeth! There's code in there that is supposed to detect the rows and columns of the current terminal emulator and either (1) resize the window to the proper dimensions if too small and the emulator supports it; or (2) tell the user to maximize the window if resizing fails. Are you seeing neither of these behaviors with terminator and alacritty ? I will try installing these on my Linux system and see what can be done.

One new issue that just came up:

FileNotFoundError: [Errno 2] No such file or directory: '/home/clipclop/invokeai/configs/models.yaml'unlessINVOKEAI_ROOTis set to a location of an existing runtime directory. To be clear, my.venvis in the repo root, and my runtime dir is on another drive. So this is certainly an edge case, but will present itself for developers with a similar setup

That's disturbing. The same root-finding code should be used for the web client and you should see the same error there (unless it's bypassing the code in some way). The logic is in invokeai.app.services.config and it goes like this:

- If

-rootis passed on the command line, use that. - If INVOKEAI_ROOT is set, use that.

- Look at the parent of

.venvand if there is aninvokeai.yamlconfiguration file there, use that directory. - Otherwise use

~invokeai

Is invokeai-web finding the correct models.yaml file? If so, I wonder how it is doing this?

I tried to test a migration from prenodes tag using (invokeai-configure --root="/home/invokeuser/userfiles/" --yes) and got this error

[2023-06-21 13:44:39,495]::[InvokeAI]::INFO --> ** Migrating invokeai.init to invokeai.yaml

╭─────────────────────────────── Traceback (most recent call last) ────────────────────────────────╮

│ /home/invokeuser/venv/bin/invokeai-configure:8 in <module> │

│ │

│ 5 from invokeai.frontend.install import invokeai_configure │

│ 6 if __name__ == '__main__': │

│ 7 │ sys.argv[0] = re.sub(r'(-script\.pyw|\.exe)?$', '', sys.argv[0]) │

│ ❱ 8 │ sys.exit(invokeai_configure()) │

│ 9 │

│ │

│ /home/invokeuser/InvokeAI/invokeai/backend/install/invokeai_configure.py:844 in main │

│ │

│ 841 │ │ if not config.model_conf_path.exists(): │

│ 842 │ │ │ initialize_rootdir(config.root_path, opt.yes_to_all) │

│ 843 │ │ │

│ ❱ 844 │ │ models_to_download = default_user_selections(opt) │

│ 845 │ │ if opt.yes_to_all: │

│ 846 │ │ │ write_default_options(opt, new_init_file) │

│ 847 │ │ │ init_options = Namespace( │

│ │

│ /home/invokeuser/InvokeAI/invokeai/backend/install/invokeai_configure.py:631 in │

│ default_user_selections │

│ │

│ 628 │ return opts │

│ 629 │

│ 630 def default_user_selections(program_opts: Namespace) -> InstallSelections: │

│ ❱ 631 │ installer = ModelInstall(config) │

│ 632 │ models = installer.all_models() │

│ 633 │ return InstallSelections( │

│ 634 │ │ install_models=[models[installer.default_model()].path or models[installer.defau │

│ │

│ /home/invokeuser/InvokeAI/invokeai/backend/install/model_install_backend.py:98 in __init__ │

│ │

│ 95 │ │ │ │ prediction_type_helper: Callable[[Path],SchedulerPredictionType]=None, │

│ 96 │ │ │ │ access_token:str = None): │

│ 97 │ │ self.config = config │

│ ❱ 98 │ │ self.mgr = ModelManager(config.model_conf_path) │

│ 99 │ │ self.datasets = OmegaConf.load(Dataset_path) │

│ 100 │ │ self.prediction_helper = prediction_type_helper │

│ 101 │ │ self.access_token = access_token or HfFolder.get_token() │

│ │

│ /home/invokeuser/InvokeAI/invokeai/backend/model_management/model_manager.py:261 in __init__ │

│ │

│ 258 │ │ elif not isinstance(config, DictConfig): │

│ 259 │ │ │ raise ValueError('config argument must be an OmegaConf object, a Path or a s │

│ 260 │ │ │

│ ❱ 261 │ │ self.config_meta = ConfigMeta(**config.pop("__metadata__")) │

│ 262 │ │ # TODO: metadata not found │

│ 263 │ │ # TODO: version check │

│ 264 │

│ │

│ /home/invokeuser/venv/lib/python3.10/site-packages/omegaconf/dictconfig.py:517 in pop │

│ │

│ 514 │ │ │ │ │ else: │

│ 515 │ │ │ │ │ │ raise ConfigKeyError(f"Key not found: '{key!s}'") │

│ 516 │ │ except Exception as e: │

│ ❱ 517 │ │ │ self._format_and_raise(key=key, value=None, cause=e) │

│ 518 │ │

│ 519 │ def keys(self) -> KeysView[DictKeyType]: │

│ 520 │ │ if self._is_missing() or self._is_interpolation() or self._is_none(): │

│ │

│ /home/invokeuser/venv/lib/python3.10/site-packages/omegaconf/base.py:231 in _format_and_raise │

│ │

│ 228 │ │ msg: Optional[str] = None, │

│ 229 │ │ type_override: Any = None, │

│ 230 │ ) -> None: │

│ ❱ 231 │ │ format_and_raise( │

│ 232 │ │ │ node=self, │

│ 233 │ │ │ key=key, │

│ 234 │ │ │ value=value, │

│ │

│ /home/invokeuser/venv/lib/python3.10/site-packages/omegaconf/_utils.py:899 in format_and_raise │

│ │

│ 896 │ │ ex.ref_type = ref_type │

│ 897 │ │ ex.ref_type_str = ref_type_str │

│ 898 │ │

│ ❱ 899 │ _raise(ex, cause) │

│ 900 │

│ 901 │

│ 902 def type_str(t: Any, include_module_name: bool = False) -> str: │

│ │

│ /home/invokeuser/venv/lib/python3.10/site-packages/omegaconf/_utils.py:797 in _raise │

│ │

│ 794 │ │ ex.__cause__ = cause │

│ 795 │ else: │

│ 796 │ │ ex.__cause__ = None │

│ ❱ 797 │ raise ex.with_traceback(sys.exc_info()[2]) # set env var OC_CAUSE=1 for full trace │

│ 798 │

│ 799 │

│ 800 def format_and_raise( │

│ │

│ /home/invokeuser/venv/lib/python3.10/site-packages/omegaconf/dictconfig.py:515 in pop │

│ │

│ 512 │ │ │ │ │ │ │ f"Key not found: '{key!s}' (path: '{full}')" │

│ 513 │ │ │ │ │ │ ) │

│ 514 │ │ │ │ │ else: │

│ ❱ 515 │ │ │ │ │ │ raise ConfigKeyError(f"Key not found: '{key!s}'") │

│ 516 │ │ except Exception as e: │

│ 517 │ │ │ self._format_and_raise(key=key, value=None, cause=e) │

│ 518 │

╰──────────────────────────────────────────────────────────────────────────────────────────────────╯

ConfigKeyError: Key not found: '__metadata__'

full_key: __metadata__

object_type=dict

@ebr It turns out that both terminator and alacritty accept the xterm window resizing command and report the new size of the window, but don't actually change the window size. So I put in a check for these emulators and fall back to asking the user to resize the window manually. I also did some more work on the forms to reduce their vertical heigh requirements.

If this works for you, please re-review and remove the requested changes.

I tried to test a migration from prenodes tag using (invokeai-configure --root="/home/invokeuser/userfiles/" --yes) and got this error

[2023-06-21 13:44:39,495]::[InvokeAI]::INFO --> ** Migrating invokeai.init to invokeai.yaml ╭─────────────────────────────── Traceback (most recent call last) ────────────────────────────────╮ │ /home/invokeuser/venv/bin/invokeai-configure:8 in <module> │ │ │ │ 5 from invokeai.frontend.install import invokeai_configure │ │ 6 if __name__ == '__main__': │ │ 7 │ sys.argv[0] = re.sub(r'(-script\.pyw|\.exe)?$', '', sys.argv[0]) │ │ ❱ 8 │ sys.exit(invokeai_configure()) │ │ 9 │ │ │ │ /home/invokeuser/InvokeAI/invokeai/backend/install/invokeai_configure.py:844 in main │ │ │ │ 841 │ │ if not config.model_conf_path.exists(): │ │ 842 │ │ │ initialize_rootdir(config.root_path, opt.yes_to_all) │ │ 843 │ │ │ │ ❱ 844 │ │ models_to_download = default_user_selections(opt) │ │ 845 │ │ if opt.yes_to_all: │ │ 846 │ │ │ write_default_options(opt, new_init_file) │ │ 847 │ │ │ init_options = Namespace( │ │ │ │ /home/invokeuser/InvokeAI/invokeai/backend/install/invokeai_configure.py:631 in │ │ default_user_selections │ │ │ │ 628 │ return opts │ │ 629 │ │ 630 def default_user_selections(program_opts: Namespace) -> InstallSelections: │ │ ❱ 631 │ installer = ModelInstall(config) │ │ 632 │ models = installer.all_models() │ │ 633 │ return InstallSelections( │ │ 634 │ │ install_models=[models[installer.default_model()].path or models[installer.defau │ │ │ │ /home/invokeuser/InvokeAI/invokeai/backend/install/model_install_backend.py:98 in __init__ │ │ │ │ 95 │ │ │ │ prediction_type_helper: Callable[[Path],SchedulerPredictionType]=None, │ │ 96 │ │ │ │ access_token:str = None): │ │ 97 │ │ self.config = config │ │ ❱ 98 │ │ self.mgr = ModelManager(config.model_conf_path) │ │ 99 │ │ self.datasets = OmegaConf.load(Dataset_path) │ │ 100 │ │ self.prediction_helper = prediction_type_helper │ │ 101 │ │ self.access_token = access_token or HfFolder.get_token() │ │ │ │ /home/invokeuser/InvokeAI/invokeai/backend/model_management/model_manager.py:261 in __init__ │ │ │ │ 258 │ │ elif not isinstance(config, DictConfig): │ │ 259 │ │ │ raise ValueError('config argument must be an OmegaConf object, a Path or a s │ │ 260 │ │ │ │ ❱ 261 │ │ self.config_meta = ConfigMeta(**config.pop("__metadata__")) │ │ 262 │ │ # TODO: metadata not found │ │ 263 │ │ # TODO: version check │ │ 264 │ │ │ │ /home/invokeuser/venv/lib/python3.10/site-packages/omegaconf/dictconfig.py:517 in pop │ │ │ │ 514 │ │ │ │ │ else: │ │ 515 │ │ │ │ │ │ raise ConfigKeyError(f"Key not found: '{key!s}'") │ │ 516 │ │ except Exception as e: │ │ ❱ 517 │ │ │ self._format_and_raise(key=key, value=None, cause=e) │ │ 518 │ │ │ 519 │ def keys(self) -> KeysView[DictKeyType]: │ │ 520 │ │ if self._is_missing() or self._is_interpolation() or self._is_none(): │ │ │ │ /home/invokeuser/venv/lib/python3.10/site-packages/omegaconf/base.py:231 in _format_and_raise │ │ │ │ 228 │ │ msg: Optional[str] = None, │ │ 229 │ │ type_override: Any = None, │ │ 230 │ ) -> None: │ │ ❱ 231 │ │ format_and_raise( │ │ 232 │ │ │ node=self, │ │ 233 │ │ │ key=key, │ │ 234 │ │ │ value=value, │ │ │ │ /home/invokeuser/venv/lib/python3.10/site-packages/omegaconf/_utils.py:899 in format_and_raise │ │ │ │ 896 │ │ ex.ref_type = ref_type │ │ 897 │ │ ex.ref_type_str = ref_type_str │ │ 898 │ │ │ ❱ 899 │ _raise(ex, cause) │ │ 900 │ │ 901 │ │ 902 def type_str(t: Any, include_module_name: bool = False) -> str: │ │ │ │ /home/invokeuser/venv/lib/python3.10/site-packages/omegaconf/_utils.py:797 in _raise │ │ │ │ 794 │ │ ex.__cause__ = cause │ │ 795 │ else: │ │ 796 │ │ ex.__cause__ = None │ │ ❱ 797 │ raise ex.with_traceback(sys.exc_info()[2]) # set env var OC_CAUSE=1 for full trace │ │ 798 │ │ 799 │ │ 800 def format_and_raise( │ │ │ │ /home/invokeuser/venv/lib/python3.10/site-packages/omegaconf/dictconfig.py:515 in pop │ │ │ │ 512 │ │ │ │ │ │ │ f"Key not found: '{key!s}' (path: '{full}')" │ │ 513 │ │ │ │ │ │ ) │ │ 514 │ │ │ │ │ else: │ │ ❱ 515 │ │ │ │ │ │ raise ConfigKeyError(f"Key not found: '{key!s}'") │ │ 516 │ │ except Exception as e: │ │ 517 │ │ │ self._format_and_raise(key=key, value=None, cause=e) │ │ 518 │ ╰──────────────────────────────────────────────────────────────────────────────────────────────────╯ ConfigKeyError: Key not found: '__metadata__' full_key: __metadata__ object_type=dict

Thank you. The ability to migrate from earlier versions is not yet wrapped into the configure script. I'll add it today.

Thank you. The ability to migrate from earlier versions is not yet wrapped into the configure script. I'll add it today.

Sorry didnt realised it hadn't been added yet, fyi if it helps fresh install with --yes works how it should for me, but --default_only asks for user input (not sure what args are wanted to be automated)

I tried to test a migration from prenodes tag using (invokeai-configure --root="/home/invokeuser/userfiles/" --yes) and got this error .... ConfigKeyError: Key not found: 'metadata' full_key: metadata object_type=dict

Thank you. The ability to migrate from earlier versions is not yet wrapped into the configure script. I'll add it today.

I'm getting the

ConfigKeyError: Key not found: 'metadata'

error as well.

I tried running the migrate_models_to_3.0.py script but didn't help. Trying a fresh install (venv and all) now, but on a slow connection...

I tried to test a migration from prenodes tag using (invokeai-configure --root="/home/invokeuser/userfiles/" --yes) and got this error

....

ConfigKeyError: Key not found: 'metadata'

full_key: __metadata__object_type=dictThank you. The ability to migrate from earlier versions is not yet wrapped into the configure script. I'll add it today.

I'm getting the

ConfigKeyError: Key not found: 'metadata'

error as well.

I tried running the migrate_models_to_3.0.py script but didn't help. Trying a fresh install (venv and all) now, but on a slow connection...

Try a fresh pull and then run invokeai-configure --root xxx on an existing 2.3 root directory. It should non-destructively upgrade the folder, and there shouldn't be a ConfigKeyError.

@lstein Tested Migration From 2.3 and prenodes, but it seems to miss any model that doesn't have a full path in the models.yaml

eg.

Missed this model (and several others)

path: models/converted_ckpts/Counterfeit-V2.5_fp16_diffusers

but this one migrated

path: /home/invokeuser/userfiles/models/converted_ckpts/disneyPixarCartoon_v10_diffusers

@lstein Tested Migration From 2.3 and prenodes, but it seems to miss any model that doesn't have a full path in the models.yaml

eg. Missed this model (and several others)

path: models/converted_ckpts/Counterfeit-V2.5_fp16_diffusersbut this one migrated

path: /home/invokeuser/userfiles/models/converted_ckpts/disneyPixarCartoon_v10_diffusers

Thanks for testing. Looks like we're nearly there.

@lstein Tested Migration From 2.3 and prenodes, but it seems to miss any model that doesn't have a full path in the models.yaml eg. Missed this model (and several others)

path: models/converted_ckpts/Counterfeit-V2.5_fp16_diffusersbut this one migratedpath: /home/invokeuser/userfiles/models/converted_ckpts/disneyPixarCartoon_v10_diffusersThanks for testing. Looks like we're nearly there.

I found the problem (stupid change on my part earlier today). Relative path models should be migrated correctly now.

I found the problem (stupid change on my part earlier today). Relative path models should be migrated correctly now.

I can confirm it works like it should now and picks ups all the models

I found the problem (stupid change on my part earlier today). Relative path models should be migrated correctly now.

I can confirm it works like it should now and picks ups all the models

Fantastic.

I put in a check for these emulators and fall back to asking the user to resize the window manually. I also did some more work on the forms to reduce their vertical heigh requirements.

If this works for you, please re-review and remove the requested changes.

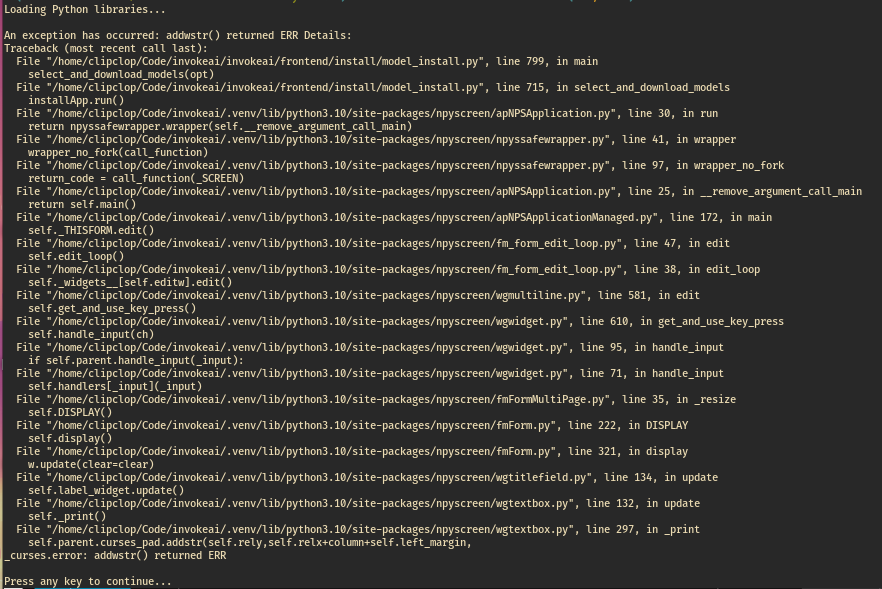

@lstein, unfortunately now whenever I unmaximize the window (but not in any other cases, like resizing by dragging), I get a gnarly traceback:

It was not crashing like this last time I tested; I was using the maximize/unmaximize trick as a quick way to un-wrap the lines.

The TUI looks and works perfectly when the terminal window is of a reasonable size or supports auto-resize, which I think will be the case for most users. happy to live with my edge cases as long as the majority of users have no issues with this UI. I'll remove the requested changes, but maybe you want to consider reverting b727442 just to avoid the crash.

I put in a check for these emulators and fall back to asking the user to resize the window manually. I also did some more work on the forms to reduce their vertical heigh requirements. If this works for you, please re-review and remove the requested changes.

@lstein, unfortunately now whenever I unmaximize the window (but not in any other cases, like resizing by dragging), I get a gnarly traceback:

It was not crashing like this last time I tested; I was using the maximize/unmaximize trick as a quick way to un-wrap the lines.

The TUI looks and works perfectly when the terminal window is of a reasonable size or supports auto-resize, which I think will be the case for most users. happy to live with my edge cases as long as the majority of users have no issues with this UI. I'll remove the requested changes, but maybe you want to consider reverting b727442 just to avoid the crash.

I can't reproduce the unmaximizing crash on alacritty or terminator and so can't confirm that the indicated commit is contributing to the problem. I will try on a Windows system.

@lstein I re-tested this and all works. I also pushed a commit fixing UI model selection that broke due to change in naming from pipeline to main