Investigate and if possible implement caching of fabric docker images for integration tests

The fabric integration tests have to download fabric docker images each time an integration test is run. This is time consuming and the image versions do not change on a regular basis.

Investigation into the possibility of caching external docker images should be done which can be applied here (and should also be useful if we change to how to set up a fabric for testing (test-network, minifabric)

If caching of docker images are not possible then an alternative could be for us to cache the downloading of the binaries and build a local image (which may be quicker) bearing in mind that the binaries are compiled for ubuntu and not alpine which means we would need to use a ubuntu base (but I believe ubuntu has a minimal base which would suffice)

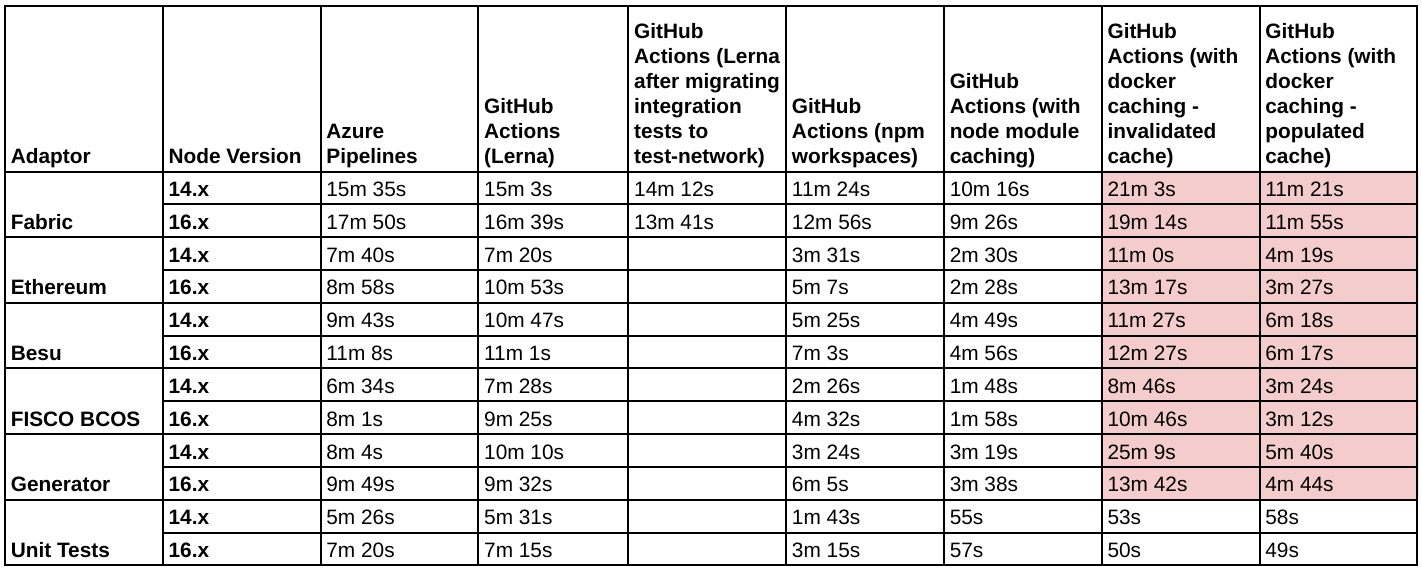

After the draft implementation in #1419, it seems unlikely that we could get any improvement in the performance of the pipelines by caching the docker images. The workflow execution times for the various scenarios look like this as of now:

There are two major issues with caching the docker images in GitHub Actions workflows:

- The process of saving and restoring the docker images to and from compressed archives is a slow process. By observing the execution times in the draft PR, the save step took anywhere between 6m 27s to 20m 11s and the restore step took anywhere between 2m and 4m, which leads to significantly worse workflow execution times.

- The size of the image cache for any particular workflow ranges from 1.5GB to 1.9GB for each of the 5 adaptors we have integration tests for, which is very high and we might potentially hit the cache size limit of 10GB for public repositories in GitHub when there are multiple unique caches from multiple PRs simultaneously.

Closing as investigation has shown that there is little value to doing this