Added multitoken training for textual inversion. Issue 369

I used multiple tokens to represent a concept by adding num_vec_per_token number of tokens to the tokenizer which can be initialized with the initial_token. The tokens would be labeled as placeholder_token_i where i is the ith token. This does make it more verbose but it does accomplish the goal. An alternative is doing this in an inherited tokenizer class.

I also added multiple ways to initialize the tokens. For example, if the initialize_rest_random is set to true, if you have an initial token of "white dog", it'll just set all the tokens before it to random noise. But the default is for the initial tokens to be assigned in a cyclic way. For example, if num_vec_per_token is 4 and initial_token is "white dog". The weights would initially be "white dog white dog".

The documentation is not available anymore as the PR was closed or merged.

@patil-suraj Sounds good! I'll also fix the merge conflict now. And let me know if you want me to work on the tokenizer approach like the original implementation. I have a guess on how to do it!

This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the contributing guidelines are likely to be ignored.

@patil-suraj could you take a look here? :-)

cc @patil-suraj here - let me know if you won't have time for this at the moment. I think this is still important though no?

And then how do we use multitoken embeddings during inference? Do we have to explicitly prompt with "a holiday card with {frida0} {frida1} {frida2}", or is there a way to just prompt "with {frida}" and the tokenizer unpacks that to its three tokens?

@keturn Thanks for the q! For now, I'm doing the "a holiday card with {frida0} {frida1} {frida2}" approach but one thing I found for the original implementation is that you can concatenate the embedding of the tokens into a single vector. So, for example, it can be used like that for automatic111's API.

But to do this, I think the tokenizer for the text encoder in the transformers library might need some changes/make a new tokenizer for that. Will try it out!

So, for example,

learned_embeds = accelerator.unwrap_model(text_encoder).get_input_embeddings().weight[placeholder_token_ids]

learned_embeds_dict = {}

learned_embeds_dict[args.placeholder_token] = learned_embeds.detach().cpu()

torch.save(learned_embeds_dict, os.path.join(args.output_dir, f"learned_embeds_{global_step}.bin"))

can be a file you can use for the latent-diffusion/automatic111 repo.

Hello, while working on a personal project I think I have an idea of the tokenizer by inheriting now so I'll try pushing that today or tomorrow

Hi. I added a new multitokenCLIPTokenizer which was inspired by @keturn 's suggestion of switching between

ok. Currently a bit buggy but fixing.

Ok! Fixed bug I'll post some results here once I'm done with training some examples

Hi, could this be used to load automatic1111 embeddings to diffusers sd model? I was trying to achieve this with an extra tokenizer class similar to multitokenCLIPTokenizer and adding the loaded embeddings to the text_encoder. However i get black images on stable-diffusion-2-1 and images on stable-diffusion-2-1-base are nothing like the previews from the textual inversion embedding i loaded. Am i missing something?

@pkurz3nd interesting. Can you show some examples? I heard one issue is that in automatic111 you might use a different vae like anything-v3-vae. I'll try adding a load script today if you want to test it out!

Hi, thanks for the quick reply. here is my notebook. https://colab.research.google.com/drive/1faGxmnfs3kARqa7GTmGiWKsqd-cPAE1v?usp=sharing

this is the embedding i was testing with: https://huggingface.co/spaablauw/FloralMarble

when i load it in torch, it says: 'sd_checkpoint': '4bdfc29c', 'sd_checkpoint_name': 'SD2.1-768'

it would be awesome if you could check out the script, i am also keep trying.

sounds good lemme check

@pkurz3nd Ok so checked your code. This might not be the sole issue but I don't think you assign the loaded embedding matrix to the tokenizer embeddings. So basically the tokenizer would prob just have random embeddings. Will make a load method and test it out on my end!

sry you are right, i somehow stopped writing on the very last line haha

this is the updated notebook https://colab.research.google.com/drive/1-U0IdnYDGI_MJV6uC89VVhtTN51F0S_L?usp=sharing

works like a charm now with the stabilityai/stable-diffusion-2-1-base version

with the stabilityai/stable-diffusion-2-1 version i still get black images but thats a different problem

thanks for looking into it

No worries! Looks awesome btw. For a blank image, it might be either a safety checker problem which u can disable by setting safety_checker=None or it might be an fp16 problem but I don't think you are using fp16.

I think I recreated what you did in this colab notebook tho I'm not able to get as good results as you

Found a bit of a bug for training. Fixed and retraining now. I'll change this pr to WIP for a bit while I confirm there aren't any more bugs

i was running your notebook, i got an error that text in tokenizer.encode(...) is None, and think there is a bug in the very last line of the load_multitoken_tokenizer funtion

for i, placeholder_token_id in enumerate(placeholder_token_ids):

token_embeds[placeholder_token_id] = token_embeds[i]

thanks for the bug check! will fix in a bit

@pkurz3nd should be fixed!

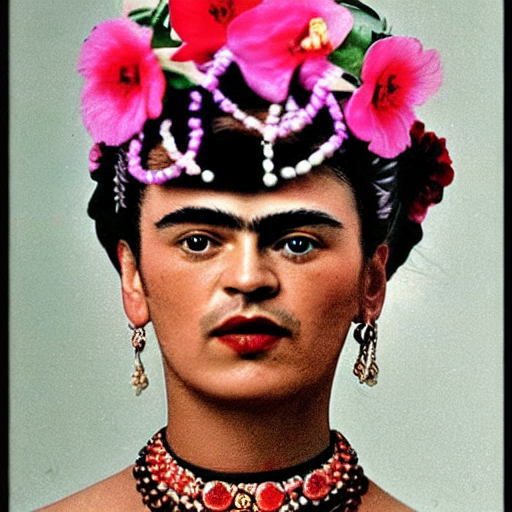

Currently working on a weird bug where regardless of what initializer token I set for training, I get various variations of this lady.

this has never happened before but I thought it would be pretty interesting so I put it here.

this has never happened before but I thought it would be pretty interesting so I put it here.

That's Frida Kahlo, which makes some sense if you're trying to train the name Frida.

@keturn Ohhhh so the tokenizer isn't replacing the texts properly. Thanks a bunch

The issue turned out to be that I was overloading the encode method but not the call method. So the models were learning but logging the wrong image since in the pipeline the call method is used. Will fix it in a bit!

Ok! Should be fixed. I'll start writing some tests on the hugging face test for cleaner code.

While I'm doing this pr, I think I'll add progressive tokens from latent-diffusion. The basic idea is, if I understand correctly, you first train on one token then you add another and another, etc. I am curious how it'll do compared to training from scratch on say 10 tokens.