[BUG]: Out of memory when training stable diffusion on RTX2070

🐛 Describe the bug

When training stable diffusion, crashed report: CUDA out of memory.

Is it possible to run on RTX2070?

Thanks!

Environment

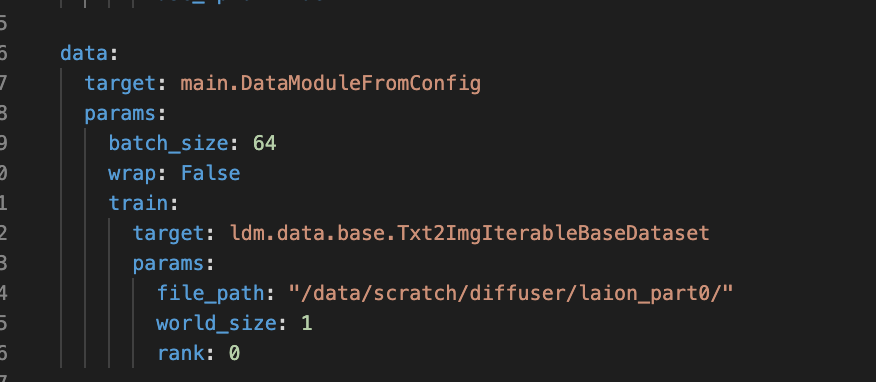

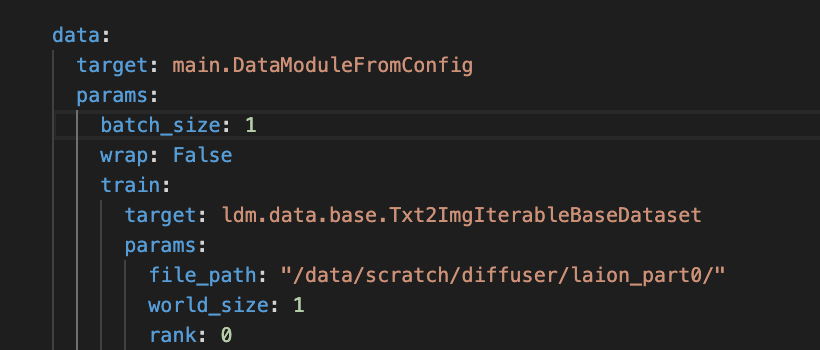

OS: Ubuntu 20.04 GPU: RTX2070 + 8GB RAM Python: 3.8 pytorch: 1.11 cuda: 11.3 Colossalai: 0.1.10 Stable Diffusion pre-traind models downloaded from https://huggingface.co/CompVis/stable-diffusion-v1-4/tree/main train_colossalai.yaml: only modified "data"->"file_path", there are 4 jpg files in size of 256*256 for testing

I don't think whoever wrote that article claiming that 8GB GPU's could easily be used for training has answered any questions about it yet.

That includes their Reddit account they used for spam marketing this release, absolutely zero engagement with the community.

what's your train.yaml, if you want to train on RTX2070, you must decrease your batch size from 64 to > 4.