GPU memory used for stable diffusion

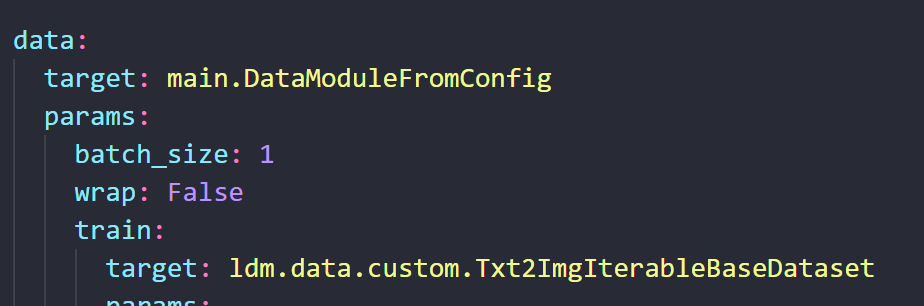

I finetune the stable diffusion on one RTX3090 with config

python main.py --logdir /tmp -t --postfix test -b configs/train_colossalai.yaml

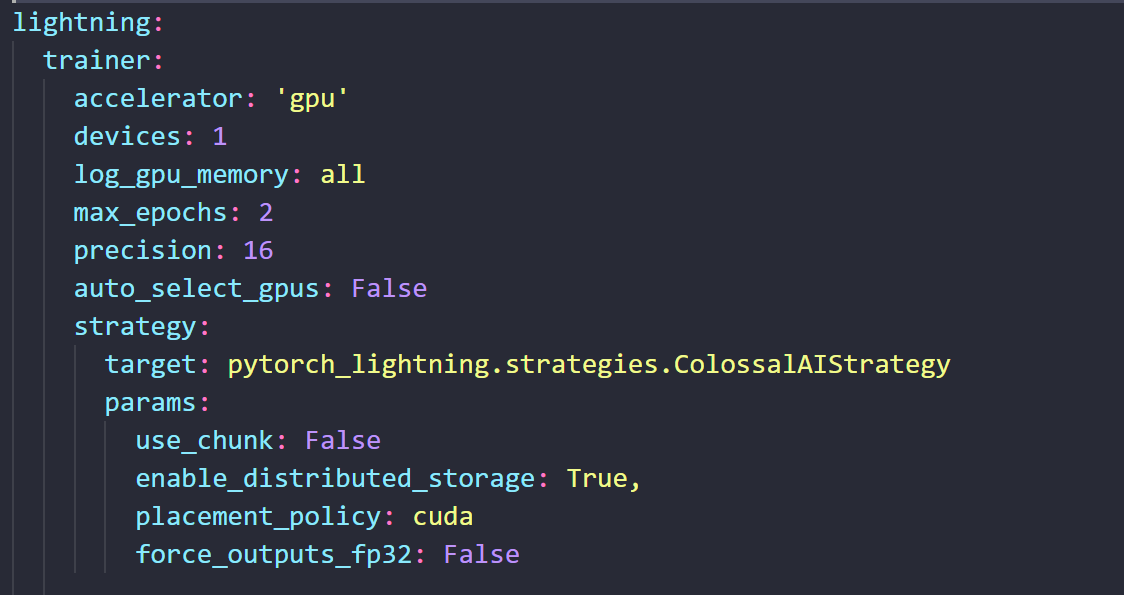

The config for lighting is as follows:

The batch size is set to 1. However, it is still out of memory.

Is this normal?

Thank you!

The batch size is set to 1. However, it is still out of memory.

Is this normal?

Thank you!

I can get it to start training by setting placement_policy to cpu or auto on my 2070 SUPER. But it crashes before it finishes training.

@DonStroganotti Setting placement_policy to cpu works. But like you, is crashes after 5 epoches.

In addition to GPU memory, I'm curious about how much RAM memory is needed for training.

My device has 32GB of RAM and an RTX 3090 (24GB), but it runs out of memory after a few steps.

I have 4*3090, I'll try it next week

We have updated a lot. This issue was closed due to inactivity. Thanks.