DataLoss Error with Tensorflow

Have you checked the FAQ? https://github.com/google/deepvariant/blob/r1.4/docs/FAQ.md: Yes Describe the issue: At the call_variants.py step, running into error that tensorflow.python.framework.errors_impl.DataLossError: truncated record at 19179998357' failed with EOF reached (A clear and concise description of what the issue is.)

Setup

- Operating system:CentOS7

- DeepVariant version:1.4.0

- Installation method (Docker, built from source, etc.):singularity run with SIF image pulled from docker://google/deepvariant:"${BIN_VERSION}"

- Type of data: (sequencing instrument: BGI, reference genome: hg19, anything special that is unlike the case studies?)

Steps to reproduce:

- Command:

-

singularity run \ -B "/paedyl01/disk1/yangyxt,/usr/lib/locale:/usr/lib/locale,/tmp:/paedyl01/disk1/yangyxt/test_tmp" \ --workdir /paedyl01/disk1/yangyxt \ ${image} \ /opt/deepvariant/bin/run_deepvariant \ --model_type=${model_type} \ --ref="${ref_genome}" \ --reads="${bam_file}" \ ${region_arg} \ --output_vcf="${output_vcf}" \ --output_gvcf="${output_gvcf}" \ --intermediate_results_dir "/paedyl01/disk1/yangyxt/test_tmp" \ --num_shards=${threads} && \ ls -lh ${output_vcf} && \ ls -lh ${output_gvcf} - Error trace: (if applicable)

- `***** Running the command:***** time /opt/deepvariant/bin/call_variants --outfile "/paedyl01/disk1/yangyxt/test_tmp/call_variants_output.tfrecord.gz" --examples "/paedyl01/disk1/yangyxt/test_tmp/[email protected]" --checkpoint "/opt/models/wgs/model.ckpt" --openvino_model_dir "/paedyl01/disk1/yangyxt/test_tmp"

I0826 20:44:28.894064 47737984214848 call_variants.py:317] From /paedyl01/disk1/yangyxt/test_tmp/make_examples.tfrecord-00000-of-00014.gz.example_info.json: Shape of input examples: [100, 221, 7], Channels of input examples: [1, 2, 3, 4, 5, 6, 19].

I0826 20:44:28.898550 47737984214848 call_variants.py:317] From /opt/models/wgs/model.ckpt.example_info.json: Shape of input examples: [100, 221, 7], Channels of input examples: [1, 2, 3, 4, 5, 6, 19].

2022-08-26 20:44:28.903729: I tensorflow/core/platform/cpu_feature_guard.cc:151] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 FMA

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

2022-08-26 20:44:28.905866: I tensorflow/core/common_runtime/process_util.cc:146] Creating new thread pool with default inter op setting: 3. Tune using inter_op_parallelism_threads for best performance.

WARNING:tensorflow:Using temporary folder as model directory: /tmp/pbs.1173981.omics/tmpag6nq5vt

W0826 20:44:28.952679 47737984214848 estimator.py:1864] Using temporary folder as model directory: /tmp/pbs.1173981.omics/tmpag6nq5vt

INFO:tensorflow:Using config: {'_model_dir': '/tmp/pbs.1173981.omics/tmpag6nq5vt', '_tf_random_seed': None, '_save_summary_steps': 100, '_save_checkpoints_steps': None, '_save_checkpoints_secs': 600, '_session_config': , '_keep_checkpoint_max': 100000, '_keep_checkpoint_every_n_hours': 10000, '_log_step_count_steps': 100, '_train_distribute': None, '_device_fn': None, '_protocol': None, '_eval_distribute': None, '_experimental_distribute': None, '_experimental_max_worker_delay_secs': None, '_session_creation_timeout_secs': 7200, '_checkpoint_save_graph_def': True, '_service': None, '_cluster_spec': ClusterSpec({}), '_task_type': 'worker', '_task_id': 0, '_global_id_in_cluster': 0, '_master': '', '_evaluation_master': '', '_is_chief': True, '_num_ps_replicas': 0, '_num_worker_replicas': 1}

I0826 20:44:28.953302 47737984214848 estimator.py:202] Using config: {'_model_dir': '/tmp/pbs.1173981.omics/tmpag6nq5vt', '_tf_random_seed': None, '_save_summary_steps': 100, '_save_checkpoints_steps': None, '_save_checkpoints_secs': 600, '_session_config': , '_keep_checkpoint_max': 100000, '_keep_checkpoint_every_n_hours': 10000, '_log_step_count_steps': 100, '_train_distribute': None, '_device_fn': None, '_protocol': None, '_eval_distribute': None, '_experimental_distribute': None, '_experimental_max_worker_delay_secs': None, '_session_creation_timeout_secs': 7200, '_checkpoint_save_graph_def': True, '_service': None, '_cluster_spec': ClusterSpec({}), '_task_type': 'worker', '_task_id': 0, '_global_id_in_cluster': 0, '_master': '', '_evaluation_master': '', '_is_chief': True, '_num_ps_replicas': 0, '_num_worker_replicas': 1}

I0826 20:44:28.953605 47737984214848 call_variants.py:446] Writing calls to /paedyl01/disk1/yangyxt/test_tmp/call_variants_output.tfrecord.gz

INFO:tensorflow:Calling model_fn.

I0826 20:44:29.309295 47737984214848 estimator.py:1173] Calling model_fn.

/usr/local/lib/python3.8/dist-packages/tf_slim/layers/layers.py:1083: UserWarning: layer.apply is deprecated and will be removed in a future version. Please use layer.__call__ method instead.

outputs = layer.apply(inputs)

/usr/local/lib/python3.8/dist-packages/tf_slim/layers/layers.py:678: UserWarning: layer.apply is deprecated and will be removed in a future version. Please use layer.__call__ method instead.

outputs = layer.apply(inputs, training=is_training)

/usr/local/lib/python3.8/dist-packages/tf_slim/layers/layers.py:2441: UserWarning: layer.apply is deprecated and will be removed in a future version. Please use layer.__call__ method instead.

outputs = layer.apply(inputs)

/usr/local/lib/python3.8/dist-packages/tf_slim/layers/layers.py:118: UserWarning: layer.apply is deprecated and will be removed in a future version. Please use layer.__call__ method instead.

outputs = layer.apply(inputs)

/usr/local/lib/python3.8/dist-packages/tf_slim/layers/layers.py:1638: UserWarning: layer.apply is deprecated and will be removed in a future version. Please use layer.__call__ method instead.

outputs = layer.apply(inputs, training=is_training)

INFO:tensorflow:Done calling model_fn.

I0826 20:44:33.173107 47737984214848 estimator.py:1175] Done calling model_fn.

INFO:tensorflow:Graph was finalized.

I0826 20:44:34.048544 47737984214848 monitored_session.py:247] Graph was finalized.

INFO:tensorflow:Restoring parameters from /opt/models/wgs/model.ckpt

I0826 20:44:34.048974 47737984214848 saver.py:1399] Restoring parameters from /opt/models/wgs/model.ckpt

INFO:tensorflow:Running local_init_op.

I0826 20:44:34.790676 47737984214848 session_manager.py:531] Running local_init_op.

INFO:tensorflow:Done running local_init_op.

I0826 20:44:34.816158 47737984214848 session_manager.py:534] Done running local_init_op.

INFO:tensorflow:Reloading EMA...

I0826 20:44:35.138201 47737984214848 modeling.py:418] Reloading EMA...

INFO:tensorflow:Restoring parameters from /opt/models/wgs/model.ckpt

I0826 20:44:35.138464 47737984214848 saver.py:1399] Restoring parameters from /opt/models/wgs/model.ckpt

I0826 20:44:37.459590 47737984214848 call_variants.py:462] Processed 1 examples in 1 batches [849.953 sec per 100]

I0826 20:46:44.255733 47737984214848 call_variants.py:462] Processed 50001 examples in 98 batches [0.271 sec per 100]

I0826 20:48:48.666643 47737984214848 call_variants.py:462] Processed 100001 examples in 196 batches [0.260 sec per 100]

I0826 20:50:53.094218 47737984214848 call_variants.py:462] Processed 150001 examples in 293 batches [0.256 sec per 100]

I0826 20:52:58.984037 47737984214848 call_variants.py:462] Processed 200001 examples in 391 batches [0.255 sec per 100]

I0826 20:55:03.618282 47737984214848 call_variants.py:462] Processed 250001 examples in 489 batches [0.254 sec per 100]

I0826 20:57:06.583475 47737984214848 call_variants.py:462] Processed 300001 examples in 586 batches [0.253 sec per 100]

I0826 20:59:10.820679 47737984214848 call_variants.py:462] Processed 350001 examples in 684 batches [0.252 sec per 100]

I0826 21:01:15.474886 47737984214848 call_variants.py:462] Processed 400001 examples in 782 batches [0.252 sec per 100]

I0826 21:03:18.836436 47737984214848 call_variants.py:462] Processed 450001 examples in 879 batches [0.251 sec per 100]

I0826 21:05:24.652524 47737984214848 call_variants.py:462] Processed 500001 examples in 977 batches [0.251 sec per 100]

I0826 21:07:30.681700 47737984214848 call_variants.py:462] Processed 550001 examples in 1075 batches [0.251 sec per 100]

I0826 21:09:35.367410 47737984214848 call_variants.py:462] Processed 600001 examples in 1172 batches [0.251 sec per 100]

I0826 21:11:41.218489 47737984214848 call_variants.py:462] Processed 650001 examples in 1270 batches [0.251 sec per 100]

I0826 21:13:47.358545 47737984214848 call_variants.py:462] Processed 700001 examples in 1368 batches [0.251 sec per 100]

I0826 21:15:52.436908 47737984214848 call_variants.py:462] Processed 750001 examples in 1465 batches [0.251 sec per 100]

I0826 21:17:58.339728 47737984214848 call_variants.py:462] Processed 800001 examples in 1563 batches [0.251 sec per 100]

I0826 21:20:07.519950 47737984214848 call_variants.py:462] Processed 850001 examples in 1661 batches [0.252 sec per 100]

I0826 21:22:14.806241 47737984214848 call_variants.py:462] Processed 900001 examples in 1758 batches [0.252 sec per 100]

I0826 21:24:23.524628 47737984214848 call_variants.py:462] Processed 950001 examples in 1856 batches [0.252 sec per 100]

Traceback (most recent call last):

File "/usr/local/lib/python3.8/dist-packages/tensorflow/python/client/session.py", line 1380, in _do_call

return fn(*args)

File "/usr/local/lib/python3.8/dist-packages/tensorflow/python/client/session.py", line 1363, in _run_fn

return self._call_tf_sessionrun(options, feed_dict, fetch_list,

File "/usr/local/lib/python3.8/dist-packages/tensorflow/python/client/session.py", line 1456, in _call_tf_sessionrun

return tf_session.TF_SessionRun_wrapper(self._session, options, feed_dict,

tensorflow.python.framework.errors_impl.DataLossError: truncated record at 19179998357' failed with EOF reached

[[{{node IteratorGetNext}}]]

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/tmp/pbs.1173981.omics/Bazel.runfiles_pfgek2w5/runfiles/com_google_deepvariant/deepvariant/call_variants.py", line 513, in

Errors may have originated from an input operation. Input Source operations connected to node IteratorGetNext: In[0] IteratorV2 (defined at /usr/local/lib/python3.8/dist-packages/tensorflow_estimator/python/estimator/util.py:58)

Operation defined at: (most recent call last)

File "/tmp/pbs.1173981.omics/Bazel.runfiles_pfgek2w5/runfiles/com_google_deepvariant/deepvariant/call_variants.py", line 513, in

tf.compat.v1.app.run() File "/tmp/pbs.1173981.omics/Bazel.runfiles_pfgek2w5/runfiles/absl_py/absl/app.py", line 300, in run _run_main(main, args)

File "/tmp/pbs.1173981.omics/Bazel.runfiles_pfgek2w5/runfiles/absl_py/absl/app.py", line 251, in _run_main sys.exit(main(argv))

File "/tmp/pbs.1173981.omics/Bazel.runfiles_pfgek2w5/runfiles/com_google_deepvariant/deepvariant/call_variants.py", line 494, in main call_variants(

File "/tmp/pbs.1173981.omics/Bazel.runfiles_pfgek2w5/runfiles/com_google_deepvariant/deepvariant/call_variants.py", line 453, in call_variants prediction = next(predictions)

File "/usr/local/lib/python3.8/dist-packages/tensorflow_estimator/python/estimator/estimator.py", line 621, in predict features, input_hooks = self._get_features_from_input_fn(

File "/usr/local/lib/python3.8/dist-packages/tensorflow_estimator/python/estimator/estimator.py", line 1019, in _get_features_from_input_fn result, _, hooks = estimator_util.parse_input_fn_result(result)

File "/usr/local/lib/python3.8/dist-packages/tensorflow_estimator/python/estimator/util.py", line 60, in parse_input_fn_result result = iterator.get_next()

Original stack trace for 'IteratorGetNext':

File "/tmp/pbs.1173981.omics/Bazel.runfiles_pfgek2w5/runfiles/com_google_deepvariant/deepvariant/call_variants.py", line 513, in

real 41m45.880s user 1063m44.358s sys 25m21.900s INFO: Cleaning up image... ERROR: failed to delete container image tempDir /tmp/pbs.1173981.omics/rootfs-2853380811: unlinkat /tmp/pbs.1173981.omics/rootfs-2853380811/tmp-rootfs-1307439201/opt/traps/lib/libmodule64.so: permission denied singularity/3.10.0 is unloaded

- `

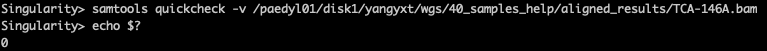

Does the quick start test work on your system? Please test with https://github.com/google/deepvariant/blob/r0.10/docs/deepvariant-quick-start.md. Is there any way to reproduce the issue by using the quick start? No Any additional context: Input BAM file seems valid. Checked with samtools quickcheck -v command.

@yangyxt how large is the bam file you are working with? Is it possible to connect to the singularity image while it is running and run samtools quickcheck -v?

@yangyxt how large is the bam file you are working with? Is it possible to connect to the singularity image while it is running and run

samtools quickcheck -v?

Thank you for the response and sorry for late notice.

I tried and the samtools quickcheck returned success:

@yangyxt how large is the bam file you are working with? Is it possible to connect to the singularity image while it is running and run

samtools quickcheck -v?

I found a thread in tensorflow github page, starting from 2017, lasting to 2021. I havent used tensorflow so I can't understand the thread. For your information only: https://github.com/tensorflow/tensorflow/issues/13463

@yangyxt was this resolved? From the original error message, it seems to me that the input to call_variants was truncated. Which means that your make_examples run might have not been fully succeeded. Another possible issue is: If you happen to have multiple make_examples running and overwriting the same files, you also might have corrupted output from make_examples (which will cause the call_variants step to err out.)

@pichuan Thanks for the response. I haven't resolved this. I do use GNU parallel to run 3 deep-variant docker images in parallel. But the input/output files for each process are different from each other. The only common directory that has parallel writing operations under it is the $TMPDIR or $SINGULARITY_CACHEDIR. Should I make the TMPDIR/SINGULARITY_CACHEDIR unique for each singularity run?

Here I show the original singularity command: `export SINGULARITY_CACHEDIR="/paedyl01/disk1/yangyxt/test_tmp"

singularity run \

-B "/paedyl01/disk1/yangyxt,/usr/lib/locale" \

--env LANG="en_US.UTF-8" \

--env LC_ALL="C" \

--env LANGUAGE="en_US.UTF-8" \

--env LC_CTYPE="UTF-8" \

--env TMPDIR="/paedyl01/disk1/yangyxt/test_tmp" \

--env SINGULARITY_CACHEDIR="/paedyl01/disk1/yangyxt/test_tmp" \

--home "/paedyl01/disk1/yangyxt/home:/home" \

--workdir /paedyl01/disk1/yangyxt \

--contain \

${container} \

/opt/deepvariant/bin/run_deepvariant \

--model_type=${model_type} \

--ref="${ref_genome}" \

--reads="${bam_file}" \

${region_arg} \

--output_vcf="${output_vcf}" \

--output_gvcf="${output_gvcf}" \

--intermediate_results_dir "/paedyl01/disk1/yangyxt/test_tmp" \

--num_shards=${threads}

I'm not very familiar with SINGULARITY_CACHEDIR. But, in your command, if you're running it 3 times, you should use a different --intermediate_results_dir. Output of make_examples will be written to that directory. So, if you use the same intermediate_results_dir, that might explain why your data is corrupted.

I'm not very familiar with SINGULARITY_CACHEDIR. But, in your command, if you're running it 3 times, you should use a different --intermediate_results_dir. Output of

make_exampleswill be written to that directory. So, if you use the same intermediate_results_dir, that might explain why your data is corrupted.

Thank you. I will try and feedback to you.

I tried to setup a temporary folder with random ID names for each run. And no more issue encountered. Thank you!