Eval bug: does llama.cpp support Intel AMX instruction? how to enable it

Name and Version

llama-cli

Operating systems

Linux

GGML backends

AMX

Hardware

XEON 8452Y + NV A40

Models

No response

Problem description & steps to reproduce

as title

First Bad Commit

No response

Relevant log output

as title

Please enable GGML_AVX and GGML_AVX

启⽤AVX512及AMX指令集

cmake llama.cpp -B llama.cpp/build_amx

-DGGML_NATIVE=OFF

-DGGML_AVX512=ON

-DGGML_AVX512_BF16=ON

-DGGML_AVX512_VBMI=ON

-DGGML_AVX512_VNNI=ON

-DGGML_AMX_TILE=ON

-DGGML_AMX_INT8=ON

-DGGML_AMX_BF16=ON

编译可执⾏文件

cmake --build llama.cpp/build_amx --config Release -j --clean-first

--target llama-cli llama-bench llama-server

@montagetao

Thanks for the example command. Did you find that it improved performance of llama.cpp on your newer Intel Xeon with AMX extensions? I'm benchmarking some newer Intel Xeon processors and not seeing the performance I would expect. I will make some notes and open a ticket here tomorrow most likely and keep you posted. There are a few other people I've seen around here and also like you over at ktransformers.

montagetao

感谢提供的示例命令。您是否观察到在启用AVX512及AMX指令集后,llama.cpp在您的新款支持AMX的Intel Xeon处理器上性能有所提升?目前我在对新一代Intel Xeon进行基准测试时,未达到预期性能水平。预计明天我会整理相关记录并在此提交工单,同时同步进展。此外,我注意到社区中有其他开发者(包括您提到的ktransformers项目中的成员)也遇到类似问题。

Thanks for the example command. Did you find that it improved performance of llama.cpp on your newer Intel Xeon with AMX extensions? I'm benchmarking some newer Intel Xeon processors and not seeing the performance I would expect. I will make some notes and open a ticket here tomorrow most likely and keep you posted. There are a few other people I've seen around here and also like you over at ktransformers.

montagetao

感谢提供的示例命令。您是否观察到在启用AVX512及AMX指令集后,llama.cpp在您的新款支持AMX的Intel Xeon处理器上性能有所提升?目前我在对新一代Intel Xeon进行基准测试时,未达到预期性能水平。预计明天我会整理相关记录并在此提交工单,同时同步进展。此外,我注意到社区中有其他开发者(包括您提到的ktransformers项目中的成员)也遇到类似问题。

我也也一样,building中增加AMX和AVX512 指令支持后,Llama-cli 性能未有明显改善, 我使用的CPU 是XEON 8452Y . ktransform Enable AMX 我还有成功过

我也也一样,building中增加AMX和AVX512 指令支持后,Llama-cli 性能未有明显改善, 我使用的CPU 是XEON 8452Y . ktransform Enable AMX 我还有成功过

感谢确认。接下来需要记录相关问题,并提交工单以明确支持 AMX 扩展指令集 的新款 Intel Xeon 处理器 的预期性能表现。

"I'm experiencing the same issue. After enabling AMX and AVX512 instruction sets during the build, the performance of Llama-cli hasn't shown significant improvement. My CPU is a Xeon 8452Y. I also tried enabling AMX in ktransformers but haven't succeeded yet."

Thanks for confirming. Okay, time to make some notes and open a ticket about expected performance on newer Intel Xeon that support AMX extensions.

@montagetao ---> https://github.com/ggml-org/llama.cpp/discussions/12088

Just profile the llama.cpp for deepseek R1 with Q4_K_M, found it only use the AVX VNNI, not use the AMX instruction.

Just profile the llama.cpp for deepseek R1 with Q4_K_M, found it only use the AVX VNNI, not use the AMX instruction.

got it, so llama.cpp with amx is fake cmd?

@oldmikeyang @montagetao

I believe at least some AMX extensions do work, but only for certain tensor quantizations (int8 quants exist, but not sure how to run on CPU). Look in llama.cpp/ggml/src/ggml-cpu/amx/ for specific code:

-

amx_int8- yes this seems to work -

amx_tile- yes there is at least code -

amx_bf16- maybe not (as maybe it usesavx512_bf16instead?` -

amx_fp8- not out until next generation Intel Xeon Diamond Rapids

If the tensor OP type is GGML_OP_MUL_MAT, it will be invoked on Intel AMX supported platform. -aubreyli

llama.cpp will tell you how big the AMX buffer is. I just don't know exactly how to quantize the model to maximize AMX usage:

# Q4_K_M

load_tensors: tensor 'token_embd.weight' (q4_K) (and 850 others) cannot be used with preferred buffer type AMX, using CPU instead

load_tensors: AMX model buffer size = 9264.66 MiB

load_tensors: CPU_Mapped model buffer size = 45358.97 MiB

load_tensors: CPU_Mapped model buffer size = 47139.54 MiB

.

.

.

# Q8_0

load_tensors: tensor 'token_embd.weight' (q8_0) (and 54 others) cannot be used with preferred buffer type AMX, using CPU instead

load_tensors: AMX model buffer size = 18214.39 MiB

load_tensors: CPU_Mapped model buffer size = 45565.90 MiB

load_tensors: CPU_Mapped model buffer size = 46661.11 MiB

.

.

.

You can confirm what successfully compiled into the binary as it shows you at the beginning of startup:

$lscpu | grep 'Model name'

Model name: Intel(R) Xeon(R) 6980P

$ git rev-parse --short HEAD

484a8ab5

build: 4903 (484a8ab5) with cc (Ubuntu 13.3.0-6ubuntu2~24.04) 13.3.0 for x86_64-linux-gnu

system info: n_threads = 24, n_threads_batch = 24, total_threads = 512

system_info: n_threads = 24 (n_threads_batch = 24) / 512 | CPU : SSE3 = 1 | SSSE3 = 1 | AVX = 1 | AVX_VNNI = 1 | AVX2 = 1 | F16C = 1 | FMA = 1 | BMI2

= 1 | AVX512 = 1 | AVX512_VBMI = 1 | AVX512_VNNI = 1 | AVX512_BF16 = 1 | AMX_INT8 = 1 | LLAMAFILE = 1 | OPENMP = 1 | AARCH64_REPACK = 1 |

I believe at least some AMX extensions do work, but only for certain tensor quantizations (int8 quants exist, but not sure how to run on CPU). Look in

llama.cpp/ggml/src/ggml-cpu/amx/for specific code:

amx_int8- yes this seems to workamx_tile- yes there is at least codeamx_bf16- maybe not (as maybe it usesavx512_bf16instead?`amx_fp8- not out until next generation Intel Xeon Diamond RapidsIf the tensor OP type is GGML_OP_MUL_MAT, it will be invoked on Intel AMX supported platform. -aubreyli

llama.cppwill tell you how big the AMX buffer is. I just don't know exactly how to quantize the model to maximize AMX usage:# Q4_K_M load_tensors: tensor 'token_embd.weight' (q4_K) (and 850 others) cannot be used with preferred buffer type AMX, using CPU instead load_tensors: AMX model buffer size = 9264.66 MiB load_tensors: CPU_Mapped model buffer size = 45358.97 MiB load_tensors: CPU_Mapped model buffer size = 47139.54 MiB . . . # Q8_0 load_tensors: tensor 'token_embd.weight' (q8_0) (and 54 others) cannot be used with preferred buffer type AMX, using CPU instead load_tensors: AMX model buffer size = 18214.39 MiB load_tensors: CPU_Mapped model buffer size = 45565.90 MiB load_tensors: CPU_Mapped model buffer size = 46661.11 MiB . . .You can confirm what successfully compiled into the binary as it shows you at the beginning of startup:

$lscpu | grep 'Model name' Model name: Intel(R) Xeon(R) 6980P $ git rev-parse --short HEAD 484a8ab5 build: 4903 (484a8ab5) with cc (Ubuntu 13.3.0-6ubuntu2~24.04) 13.3.0 for x86_64-linux-gnu system info: n_threads = 24, n_threads_batch = 24, total_threads = 512 system_info: n_threads = 24 (n_threads_batch = 24) / 512 | CPU : SSE3 = 1 | SSSE3 = 1 | AVX = 1 | AVX_VNNI = 1 | AVX2 = 1 | F16C = 1 | FMA = 1 | BMI2 = 1 | AVX512 = 1 | AVX512_VBMI = 1 | AVX512_VNNI = 1 | AVX512_BF16 = 1 | AMX_INT8 = 1 | LLAMAFILE = 1 | OPENMP = 1 | AARCH64_REPACK = 1 |

thank you, you are right, i was tried to recored by 'perf' when run llama.cpp and found the amx instructions execute

Handyman services zunigas contractor 7047636355

El lun, 17 de mar de 2025, 8:12 p. m., montagetao @.***> escribió:

@oldmikeyang https://github.com/oldmikeyang @montagetao https://github.com/montagetao

I believe at least some AMX extensions do work, but only for certain tensor quantizations (int8 quants exist, but not sure how to run on CPU https://huggingface.co/meituan/DeepSeek-R1-Block-INT8). Look in llama.cpp/ggml/src/ggml-cpu/amx/ for specific code:

- amx_int8 - yes this seems to work

- amx_tile - yes there is at least code

- amx_bf16 - maybe not (as maybe it uses avx512_bf16 instead?`

- amx_fp8 - not out until next generation Intel Xeon Diamond Rapids https://www.phoronix.com/news/Intel-AMX-FP8-In-LLVM

If the tensor OP type is GGML_OP_MUL_MAT, it will be invoked on Intel AMX supported platform. -aubreyli https://github.com/ggml-org/llama.cpp/discussions/12088#discussioncomment-12469251

llama.cpp will tell you how big the AMX buffer is. I just don't know exactly how to quantize the model to maximize AMX usage:

Q4_K_M

load_tensors: tensor 'token_embd.weight' (q4_K) (and 850 others) cannot be used with preferred buffer type AMX, using CPU instead load_tensors: AMX model buffer size = 9264.66 MiB load_tensors: CPU_Mapped model buffer size = 45358.97 MiB load_tensors: CPU_Mapped model buffer size = 47139.54 MiB . . .

Q8_0

load_tensors: tensor 'token_embd.weight' (q8_0) (and 54 others) cannot be used with preferred buffer type AMX, using CPU instead load_tensors: AMX model buffer size = 18214.39 MiB load_tensors: CPU_Mapped model buffer size = 45565.90 MiB load_tensors: CPU_Mapped model buffer size = 46661.11 MiB . . .

You can confirm what successfully compiled into the binary as it shows you at the beginning of startup:

$lscpu | grep 'Model name' Model name: Intel(R) Xeon(R) 6980P

$ git rev-parse --short HEAD 484a8ab5

build: 4903 (484a8ab5) with cc (Ubuntu 13.3.0-6ubuntu2~24.04) 13.3.0 for x86_64-linux-gnu system info: n_threads = 24, n_threads_batch = 24, total_threads = 512

system_info: n_threads = 24 (n_threads_batch = 24) / 512 | CPU : SSE3 = 1 | SSSE3 = 1 | AVX = 1 | AVX_VNNI = 1 | AVX2 = 1 | F16C = 1 | FMA = 1 | BMI2 = 1 | AVX512 = 1 | AVX512_VBMI = 1 | AVX512_VNNI = 1 | AVX512_BF16 = 1 | AMX_INT8 = 1 | LLAMAFILE = 1 | OPENMP = 1 | AARCH64_REPACK = 1 |

thank you, you are right, i was tried to recored by 'perf' when run llama.cpp and found the amx instructions execute

— Reply to this email directly, view it on GitHub https://github.com/ggml-org/llama.cpp/issues/12003#issuecomment-2731241293, or unsubscribe https://github.com/notifications/unsubscribe-auth/BQFRXQ2XBSRPQVE6TO255L32U5QHLAVCNFSM6AAAAABXTF3O7WVHI2DSMVQWIX3LMV43OSLTON2WKQ3PNVWWK3TUHMZDOMZRGI2DCMRZGM . You are receiving this because you are subscribed to this thread.Message ID: @.***> [image: montagetao]montagetao left a comment (ggml-org/llama.cpp#12003) https://github.com/ggml-org/llama.cpp/issues/12003#issuecomment-2731241293

@oldmikeyang https://github.com/oldmikeyang @montagetao https://github.com/montagetao

I believe at least some AMX extensions do work, but only for certain tensor quantizations (int8 quants exist, but not sure how to run on CPU https://huggingface.co/meituan/DeepSeek-R1-Block-INT8). Look in llama.cpp/ggml/src/ggml-cpu/amx/ for specific code:

- amx_int8 - yes this seems to work

- amx_tile - yes there is at least code

- amx_bf16 - maybe not (as maybe it uses avx512_bf16 instead?`

- amx_fp8 - not out until next generation Intel Xeon Diamond Rapids https://www.phoronix.com/news/Intel-AMX-FP8-In-LLVM

If the tensor OP type is GGML_OP_MUL_MAT, it will be invoked on Intel AMX supported platform. -aubreyli https://github.com/ggml-org/llama.cpp/discussions/12088#discussioncomment-12469251

llama.cpp will tell you how big the AMX buffer is. I just don't know exactly how to quantize the model to maximize AMX usage:

Q4_K_M

load_tensors: tensor 'token_embd.weight' (q4_K) (and 850 others) cannot be used with preferred buffer type AMX, using CPU instead load_tensors: AMX model buffer size = 9264.66 MiB load_tensors: CPU_Mapped model buffer size = 45358.97 MiB load_tensors: CPU_Mapped model buffer size = 47139.54 MiB . . .

Q8_0

load_tensors: tensor 'token_embd.weight' (q8_0) (and 54 others) cannot be used with preferred buffer type AMX, using CPU instead load_tensors: AMX model buffer size = 18214.39 MiB load_tensors: CPU_Mapped model buffer size = 45565.90 MiB load_tensors: CPU_Mapped model buffer size = 46661.11 MiB . . .

You can confirm what successfully compiled into the binary as it shows you at the beginning of startup:

$lscpu | grep 'Model name' Model name: Intel(R) Xeon(R) 6980P

$ git rev-parse --short HEAD 484a8ab5

build: 4903 (484a8ab5) with cc (Ubuntu 13.3.0-6ubuntu2~24.04) 13.3.0 for x86_64-linux-gnu system info: n_threads = 24, n_threads_batch = 24, total_threads = 512

system_info: n_threads = 24 (n_threads_batch = 24) / 512 | CPU : SSE3 = 1 | SSSE3 = 1 | AVX = 1 | AVX_VNNI = 1 | AVX2 = 1 | F16C = 1 | FMA = 1 | BMI2 = 1 | AVX512 = 1 | AVX512_VBMI = 1 | AVX512_VNNI = 1 | AVX512_BF16 = 1 | AMX_INT8 = 1 | LLAMAFILE = 1 | OPENMP = 1 | AARCH64_REPACK = 1 |

thank you, you are right, i was tried to recored by 'perf' when run llama.cpp and found the amx instructions execute

— Reply to this email directly, view it on GitHub https://github.com/ggml-org/llama.cpp/issues/12003#issuecomment-2731241293, or unsubscribe https://github.com/notifications/unsubscribe-auth/BQFRXQ2XBSRPQVE6TO255L32U5QHLAVCNFSM6AAAAABXTF3O7WVHI2DSMVQWIX3LMV43OSLTON2WKQ3PNVWWK3TUHMZDOMZRGI2DCMRZGM . You are receiving this because you are subscribed to this thread.Message ID: @.***>

- to enable amx build, you will need gcc11 or above, and amx optimizations will be compiled if the machine has amx-int8 support

- for decoding scenarios (when M=1 in the gemm), in my previous patch to enable amx, I use avx512-vnni instead of amx-int8 INTENIONALLY; i actually wrote both amx-int8 and avx512-vnni implementations for each of the quant formats. The reason is that vnni will perform better in such scenario, and amx works better for prefill (M is large).

I noticed that some people tried to use llama.cpp for DeepSeek R1 and at the current moment, low performance is expected. The moe modules in llama.cpp needs different optimizations (e.g. group gemm), it is not a matter of using amx or not. And also intel Xeon will require a special improvement for all-reduce to use all the numa nodes effectively.

Right now I have been actively optimizing DeepSeek V3/R1 on IPEX and also SGLan, the job is about to be finished soon(this is our company level target, and llama.cpp is more like a leisure time project for myself). Once this job is done, I will come back to optimize llama.cpp to improve DeepSeek performance.

@mingfeima

Thank you for the additional context and taking your time to explain. I understand now and that all makes sense.

The moe modules in llama.cpp needs different optimizations (e.g. group gemm), it is not a matter of using amx or not. And also intel Xeon will require a special improvement for all-reduce to use all the numa nodes effectively.

Yes, this lines up with my testing on a dual socket Intel Xeon 6980P for CPU only inference of DeepSeek-R1 671B. I can't use ktransformer's USE_NUMA=1 compile option for data parallel (load entire model into RAM twice) because there is no GPU.

The best speed benchmark I have recorded so far is with FlashMLA-2 and other optimizations implemented on the ik_llama.cpp fork.

I wonder if some kind of asynchronous ØMQ tensor parallel NUMA aware all-reduce implementation would work. Or does it take special Intel Xeon UPI stuff to get low latency and high throughput. It seems challenging to use simple shared memory without CUDA P2P, NVLINK, or RDMA networking.

I see your github issue on the SGLang project for DeepSeek R1 CPU inference. I will keep an eye on that and hope to try on the dual socket Intel Xeon 6980P if it supports CPU only backend.

Thanks for all your efforts and enjoy your day!

mingfeima

感谢您提供的补充说明和耐心解释,我现在完全理解相关技术背景了。

llama.cpp中的moe模块需要不同的优化策略(例如group gemm),这与是否使用amx指令集无关。此外,Intel Xeon平台需要通过特殊优 实现all-reduce操作以有效利用所有NUMA节点。

这与我在双路Intel Xeon 6980P平台进行的纯CPU推理测试(针对DeepSeek-R1 671B模型)的结论一致。由于没有GPU支持,无法使用ktransformer的USE_NUMA=1编译选项实现数据并行(该方案需要将完整模型重复载入内存两次)。

目前我记录的最佳性能指标来自ik_llama.cpp分支实现的FlashMLA-2及其他优化方案。

我思考是否可以基于异步ØMQ实现支持NUMA感知的张量并行all-reduce。或者是否需要依赖Intel Xeon特有的UPI技术才能获得低延迟和高吞吐量。在缺乏CUDA P2P、NVLINK或RDMA网络支持的情况下,仅使用共享内存实现似乎颇具挑战性。

注意到您在SGLang项目的相关讨论中提及DeepSeek R1的CPU推理方案,我将持 关注该进展,并期待能在双路Xeon 6980P平台测试纯CPU后端支持。

再次感谢您的工作贡献,祝您工作顺利!

@ubergarm I happen to know the people who are doing the ktransformer project. Its idea of utilizing Xeon (large memory) to host MoE and GPU for other layers is fanscinating. We plan to borrow the idea to sglang but this will be the next step.

Right now, we are considering getting the best out of CPU only platform. Our measured performance of TPOT for 1k input and 1k output on a single 6980 is roughly 70ms (14 tokens per second) with int8 w8a16, hopefully we can make it at 20 tokens per second at last.

instead of the AMX instruction, the profile also show, many CPU time is waiting on the openmp GOMP_barrier, not full use the CPU core. I try to change the number of the CPU core count from 32 to 42, looks like there is no performance improvement when add more cores.

Here is the profile on 1 cpu core.

@oldmikeyang

Yes, performance is not limited due to AMX or not. The issue is optimizing matrix calculations to keep the optimal number of threads per CPU chiplet per NUMA node active and working out of L2 cache as much as possible and only accessing NUMA local RAM.

The best discussion on it is here with @zts9989 where they show using less threads distributed appropriately improves performance. More threads improperly allocated just sit around waiting. You have to run llama-bench -t 16,32,64,... to test exact best number of CPU cores for both prompt processing and token generation for your exact configurations.

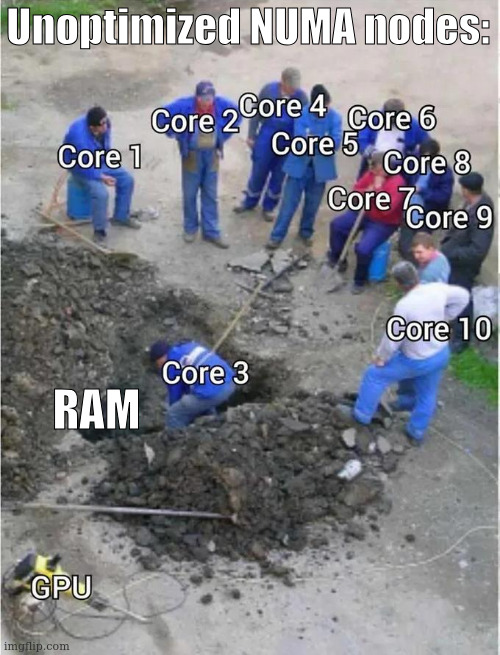

I created a technical diagram to help show the underlying issue:

I have been testing a new fork called ik_llama.cpp which has some optimizations and seems to perform faster already without additional NUMA optimizations yet.

Good luck!

是的,性能瓶颈并不取决于是否启用AMX。核心问题在于优化矩阵计算,以保持每个NUMA节点中CPU芯片组的最优线程数激活状态,并尽可能让计算驻留于L2缓存中,仅访问NUMA本地内存。

最佳讨论参见与@zts9989的对话,其中展示了合理减少线程分配反而能提升性能。不当分配过多线程会导致资源闲置等待。需要通过llama-bench -t 16,32,64,...实测不同配置下提示处理 令牌生成阶段的最优CPU核心数。

我制作了一个技术示意图说明根本问题:

目前正在测试名为ik_llama.cpp的新分支,该分支已实现部分优化, 尚未进行NUMA深度优化的情况下已展现出更优性能。

祝顺利!

This issue was closed because it has been inactive for 14 days since being marked as stale.

@ubergarm I happen to know the people who are doing the ktransformer project. Its idea of utilizing Xeon (large memory) to host MoE and GPU for other layers is fanscinating. We plan to borrow the idea to sglang but this will be the next step.

Right now, we are considering getting the best out of CPU only platform. Our measured performance of TPOT for 1k input and 1k output on a single 6980 is roughly 70ms (14 tokens per second) with int8 w8a16, hopefully we can make it at 20 tokens per second at last.

hi @mingfeima , could you share the memory bandwidth for this testing? 6400MT/s * 12 or 8800MT/s * 12? btw, is this performance measured on sglang?

@ubergarm I happen to know the people who are doing the ktransformer project. Its idea of utilizing Xeon (large memory) to host MoE and GPU for other layers is fanscinating. We plan to borrow the idea to sglang but this will be the next step. Right now, we are considering getting the best out of CPU only platform. Our measured performance of TPOT for 1k input and 1k output on a single 6980 is roughly 70ms (14 tokens per second) with int8 w8a16, hopefully we can make it at 20 tokens per second at last.

hi @mingfeima , could you share the memory bandwidth for this testing? 6400MT/s * 12 or 8800MT/s * 12? btw, is this performance measured on sglang?

@blossomin the whole work is upstreamed to sglang now, you can follow: https://lmsys.org/blog/2025-07-14-intel-xeon-optimization/.