download a folder using recursive gives FileNotFoundError but works

What happened:

I was debugging something over at aldfs (https://github.com/dask/adlfs/issues/120) and wanted to see the behavior of s3fs.

What you expected to happen:

I download a folder to local.

Minimal Complete Verifiable Example:

This downloads the remote folder '00' to a local folder '00

import s3fs

fs = s3fs.S3FileSystem(anon=True)

fs.get("noaa-goes16/ABI-L2-MCMIPC/2020/001/00", "00", recursive=True)

This returns

>>> fs.get("noaa-goes16/ABI-L2-MCMIPC/2020/001/00", "00", recursive=True)

Traceback (most recent call last):

File "/Users/Ray/miniconda3/envs/main/lib/python3.8/site-packages/s3fs/core.py", line 207, in _call_s3

return await method(**additional_kwargs)

File "/Users/Ray/miniconda3/envs/main/lib/python3.8/site-packages/aiobotocore/client.py", line 151, in _make_api_call

raise error_class(parsed_response, operation_name)

botocore.errorfactory.NoSuchKey: An error occurred (NoSuchKey) when calling the GetObject operation: The specified key does not exist.

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/Users/Ray/miniconda3/envs/main/lib/python3.8/site-packages/fsspec/asyn.py", line 278, in get

return sync(self.loop, self._get, rpaths, lpaths)

File "/Users/Ray/miniconda3/envs/main/lib/python3.8/site-packages/fsspec/asyn.py", line 68, in sync

raise exc.with_traceback(tb)

File "/Users/Ray/miniconda3/envs/main/lib/python3.8/site-packages/fsspec/asyn.py", line 52, in f

result[0] = await future

File "/Users/Ray/miniconda3/envs/main/lib/python3.8/site-packages/fsspec/asyn.py", line 263, in _get

return await asyncio.gather(

File "/Users/Ray/miniconda3/envs/main/lib/python3.8/site-packages/s3fs/core.py", line 694, in _get_file

resp = await self._call_s3(

File "/Users/Ray/miniconda3/envs/main/lib/python3.8/site-packages/s3fs/core.py", line 225, in _call_s3

raise translate_boto_error(err) from err

FileNotFoundError: The specified key does not exist.

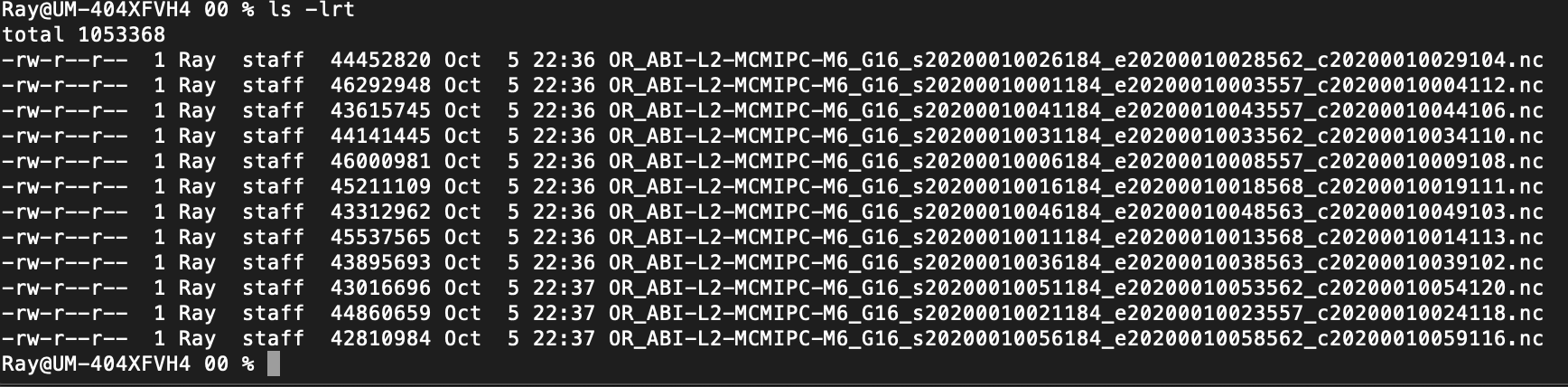

However, the process still runs in the background and gives the desired output.

Anything else we need to know?:

Environment:

- Dask version: 2.29.0

- Python version: 3.8.5

- Operating System: Mac

- Install method (conda, pip, source): Conda

Would you mind formulating this as a test in a PR, so I can more easily make a fix? I imagine it is trying to get the "root" of the set of directories which, because this is S3, is not really a file or a directory on the remote.

ref: https://github.com/intake/filesystem_spec/issues/378

I encountered this problem recently and present my analysis below: I have the following bucket structure:

s3://test-bucket-s3fs/test_folder/Sample10k.csv

Objective is to download test_folder recursively using the below code

from s3fs import S3FileSystem

S3FileSystem().download(

rpath='s3://test-bucket-s3fs/test_folder/',

lpath='/tmp/test_folder', recursive=True)

so when the path is expanded rpaths become

['test-bucket-s3fs/test_folder', 'test-bucket-s3fs/test_folder/', 'test-bucket-s3fs/test_folder/Sample10K.csv']

and _get_file is applied on these file paths, and test-bucket-s3fs/test_folder is not a valid key in AWS hence the error.

This can be validated with

from s3fs import S3FileSystem

S3FileSystem().download(

rpath='s3://test-bucket-s3fs/test_folder',

lpath='/tmp/test_folder')

PS. Given the async nature of _get_file we might get the impression that the has been done but it depends on the order in which the file paths are processed which don't have any ordering

I have encountered this problem with recursive too. My environment: Python 3.6.9 s3fs 0.5.0 fsspec 0.8.0

Case 1:

fs = s3fs.S3FileSystem()

fs.get("s3://bucket/dir/", "./local/", recursive=True)

Where s3://bucket/dir/ contains nested directory and ./local dir has been created.

It gives:

File "s3test.py", line 20, in <module>

fs.get("s3://bucket/dir/", "./local/", recursive=True)

File "/usr/local/lib/python3.6/dist-packages/fsspec/asyn.py", line 244, in get

return sync(self.loop, self._get, rpaths, lpaths)

File "/usr/local/lib/python3.6/dist-packages/fsspec/asyn.py", line 51, in sync

raise exc.with_traceback(tb)

File "/usr/local/lib/python3.6/dist-packages/fsspec/asyn.py", line 35, in f

result[0] = await future

File "/usr/local/lib/python3.6/dist-packages/fsspec/asyn.py", line 233, in _get

for lpath, rpath in zip(lpaths, rpaths)

File "/usr/local/lib/python3.6/dist-packages/s3fs/core.py", line 649, in _get_file

with open(lpath, "wb") as f0:

FileNotFoundError: [Errno 2] No such file or directory: '/root/local/variables/variables.index'

I tried the same case with s3fs==0.4.2 and problem is gone:D

Case 2:

fs = s3fs.S3FileSystem()

fs.get("s3://bucket/dir/*", "./local/", recursive=True)

Which has glob magic. It gives:

File "s3test.py", line 20, in <module>

fs.get("s3://bucket/dir/*", "./local/", recursive=True)

File "/usr/local/lib/python3.6/dist-packages/fsspec/asyn.py", line 242, in get

rpaths = self.expand_path(rpath, recursive=recursive)

File "/usr/local/lib/python3.6/dist-packages/fsspec/spec.py", line 724, in expand_path

out = self.expand_path([path], recursive, maxdepth)

File "/usr/local/lib/python3.6/dist-packages/fsspec/spec.py", line 732, in expand_path

out += self.expand_path(p)

TypeError: unsupported operand type(s) for +=: 'set' and 'list'

I viewed the code in fsspec and I believe it's a compatibility problem with fsspec...

@kuroiiwa your last issue is filed here: https://github.com/dask/s3fs/issues/253

Our FileNotFoundError is gone when not adding the search path in expand_paths:

def expand_path(self, path, recursive=False, maxdepth=None):

if isinstance(path, str):

out = self.expand_path([path], recursive, maxdepth)

else:

out = set()

path = [self._strip_protocol(p) for p in path]

for p in path:

if has_magic(p):

bit = set(self.glob(p))

out |= bit

if recursive:

out |= set(self.expand_path(p))

continue

elif recursive:

# fix a: withdirs arg is ingored in S3FileSystem, yet dirs should be ignored as they errors during .get()

rec = (f for f, detail in self.find(p, withdirs=None, maxdepth=maxdepth, detail=True).items()

if detail["type"] != "directory")

out |= set(rec)

# fix b: again, remove dir

if p not in out and (recursive is False or self.info(p)["type"] != "directory"):

# should only check once, for the root

out.add(p)

if not out:

raise FileNotFoundError(path)

return list(sorted(out))

Note this is a preliminary fix. Actually a) wasn't necessary in our integration tests. However, I find it odd to add dirs to rpaths in get().

The most useful way to proceed would be to write up the cases above into test functions to be added into s3fs's test suite in a new PR - and then we can get onto fixing them. Obviously I'd rather not touch expand_path if possible, but we shall see.

Note that in s3fs, we override find() to use a one-shot call to list files for downloading a directory or evaluating globs, and here we create a set of pseudo-folders to return, inferred from the file names. Also, there is a TODO in that method, to fill in the directory listings cache, so that any other call would know that a given path is a directory without having to call S3 again.

^ should point out that the root path being listed in find is special - if find returns anything, it definitely exists, but is not normally returned.

Any updates on this @martindurant ? Experiencing the same behavior, when I try to invoke get() with recursive flag on a s3 dir to local dir, I get

s3fs version: 2022.3.0

File "/home/devrimcavusoglu/anaconda3/envs/prjct/lib/python3.9/site-packages/s3fs/core.py", line 282, in _call_s3

out = await method(**additional_kwargs)

File "/home/devrimcavusoglu/anaconda3/envs/prjct/lib/python3.9/site-packages/aiobotocore/client.py", line 228, in _make_api_call

raise error_class(parsed_response, operation_name)

botocore.errorfactory.NoSuchKey: An error occurred (NoSuchKey) when calling the GetObject operation: The specified key does not exist.

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/home/devrimcavusoglu/project/aws/s3.py", line 86, in <module>

download(src, dst)

File "/home/devrimcavusoglu/project/aws/s3.py", line 75, in download

s3.get(source.as_posix(), destination.as_posix(), recursive=True)

File "/home/devrimcavusoglu/anaconda3/envs/project/lib/python3.9/site-packages/fsspec/asyn.py", line 85, in wrapper

return sync(self.loop, func, *args, **kwargs)

File "/home/devrimcavusoglu/anaconda3/envs/project/lib/python3.9/site-packages/fsspec/asyn.py", line 65, in sync

raise return_result

File "/home/devrimcavusoglu/anaconda3/envs/project/lib/python3.9/site-packages/fsspec/asyn.py", line 25, in _runner

result[0] = await coro

File "/home/devrimcavusoglu/anaconda3/envs/project/lib/python3.9/site-packages/fsspec/asyn.py", line 520, in _get

return await _run_coros_in_chunks(

File "/home/devrimcavusoglu/anaconda3/envs/project/lib/python3.9/site-packages/fsspec/asyn.py", line 241, in _run_coros_in_chunks

await asyncio.gather(*chunk, return_exceptions=return_exceptions),

File "/home/devrimcavusoglu/anaconda3/envs/project/lib/python3.9/asyncio/tasks.py", line 442, in wait_for

return await fut

File "/home/devrimcavusoglu/anaconda3/envs/project/lib/python3.9/site-packages/s3fs/core.py", line 1025, in _get_file

resp = await self._call_s3(

File "/home/devrimcavusoglu/anaconda3/envs/project/lib/python3.9/site-packages/s3fs/core.py", line 302, in _call_s3

raise err

FileNotFoundError: The specified key does not exist.