training disrupted after shuffle=true cause env.reset() detects missing vehicles

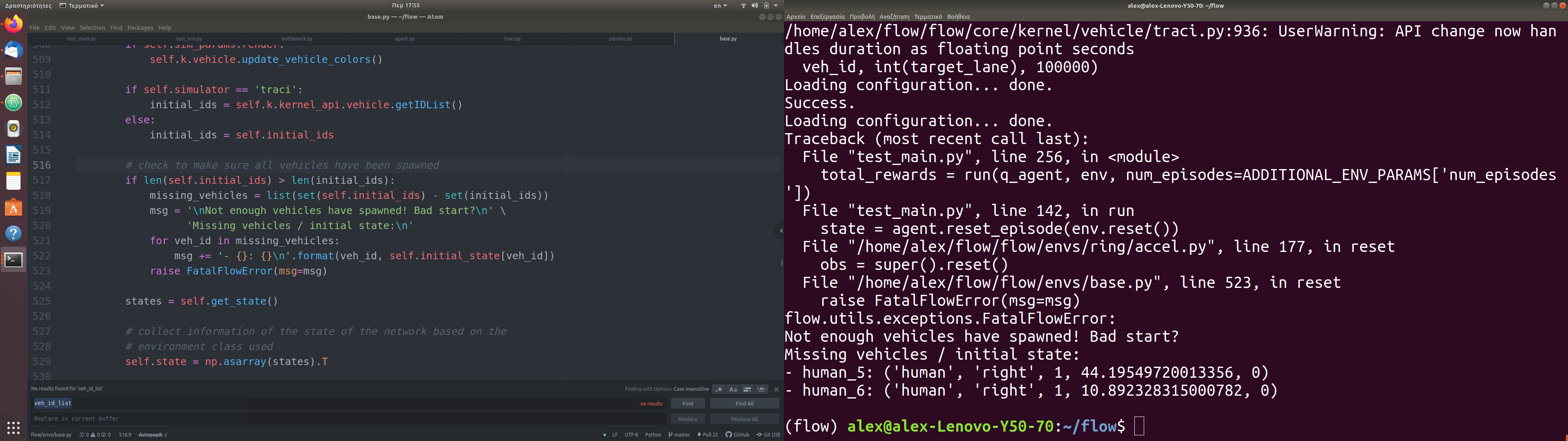

I'm training a rl agent using a q learning algorithm where env.reset() refers to everytime an episode gets completed. If the toggle shuffle or restart instance is false then this happens only in the outset of me running the program and not during training but if either of them are true then abruptly after a random number of episodes, a vehicle might not get re-introduced in the network, and that is caught by the error below and the training is terminated. After I had commented out the that part of the code, I noticed that the vehicles not appearing in the start, will eventually appear somewhat delayed in the simulation. In the case of the missing vehicle being the rl agent though, the simulation would terminate resulting of the loss of my training process. Also I have encountered a similar error when for example I try to run one of the examples (sugiyama) setting it to do more than (the default) 1 episodes.

Sorry to hear this! Could you post the full error log?

That would be very helpful in us diagnosing what the issue is.

The file that produces the error is /envs/base.py which is unchanged from source and doesn't use logging.error(). The error message is raised after FatalFlowError which is displayed in the screenshot. Also in the same file in the reset() function (from source) there are plenty fixme notations that need addressing so I can resolve the issue.

Also as I mentioned above the issue of running an experiment (in any of the existing source examples) with a number of runs >1 results to termination. Is that not the case with you?. Could it be a faulty installation?

Sorry, but when it errors could you post the entirety of the error that occurs? It's hard for me to read your screenshot.

(flow) alex@alex-Lenovo-Y50-70:~/flow$ python test_main.py

Loading configuration... done.

Success.

Loading configuration... done.

/home/alex/flow/flow/core/kernel/vehicle/traci.py:936: UserWarning: API change now handles duration as floating point seconds

veh_id, int(target_lane), 100000)

Traceback (most recent call last):

File "test_main.py", line 334, in

- human_7: ('human', 'left', 1, 74.69848318325853, 0)

- human_9: ('human', 'left', 2, 32.081819692289855, 0)

(flow) alex@alex-Lenovo-Y50-70:~/flow/examples/sumo$ python sugiyama.py Loading configuration... done. Success. Loading configuration... done. Round 0, return: 380.50609821938536 Traceback (most recent call last): File "/home/alex/flow/flow/envs/base.py", line 487, in reset speed=speed) File "/home/alex/flow/flow/core/kernel/vehicle/traci.py", line 1025, in add departSpeed=str(speed)) File "/home/alex/anaconda3/envs/flow/lib/python3.6/site-packages/traci/_vehicle.py", line 1427, in add self._connection._sendExact() File "/home/alex/anaconda3/envs/flow/lib/python3.6/site-packages/traci/connection.py", line 106, in _sendExact raise TraCIException(err, prefix[1], _RESULTS[prefix[2]]) traci.exceptions.TraCIException: Invalid departLane definition for vehicle 'idm_0'; must be one of ("random", "free", "allowed", "best", "first", or an int>=0)

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "sugiyama.py", line 68, in

Also running into this bug with the rlliib tutorial (tutorial 3). It's not the most noticeable since rllib catches the error and tries again afterwards, but if you run it without rllib the error pops up the second time you try to reset the environment

The issue seems to be more likely when it randomly initialises the ring to be so small there is very little space between the cars. Adjusting the length of the ring seems to reduce the chance of bumping into it.

Any updates on this?

When I ran tutorial 3 I faced the same error, and the error log is as following:

Failure # 1 (occurred at 2024-08-14_15-30-20)

Traceback (most recent call last):

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/tune/trial_runner.py", line 426, in _process_trial

result = self.trial_executor.fetch_result(trial)

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/tune/ray_trial_executor.py", line 378, in fetch_result

result = ray.get(trial_future[0], DEFAULT_GET_TIMEOUT)

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/worker.py", line 1457, in get

raise value.as_instanceof_cause()

ray.exceptions.RayTaskError(FatalFlowError): [36mray::PPO.train()[39m (pid=144533, ip=10.41.2.44)

File "python/ray/_raylet.pyx", line 636, in ray._raylet.execute_task

File "python/ray/_raylet.pyx", line 619, in ray._raylet.execute_task.function_executor

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/rllib/agents/trainer.py", line 444, in train

raise e

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/rllib/agents/trainer.py", line 433, in train

result = Trainable.train(self)

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/tune/trainable.py", line 176, in train

result = self._train()

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/rllib/agents/trainer_template.py", line 129, in _train

fetches = self.optimizer.step()

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/rllib/optimizers/multi_gpu_optimizer.py", line 140, in step

self.num_envs_per_worker, self.train_batch_size)

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/rllib/optimizers/rollout.py", line 29, in collect_samples

next_sample = ray_get_and_free(fut_sample)

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/rllib/utils/memory.py", line 33, in ray_get_and_free

result = ray.get(object_ids)

ray.exceptions.RayTaskError(FatalFlowError): [36mray::RolloutWorker.sample()[39m (pid=144532, ip=10.41.2.44)

File "python/ray/_raylet.pyx", line 636, in ray._raylet.execute_task

File "python/ray/_raylet.pyx", line 619, in ray._raylet.execute_task.function_executor

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/rllib/evaluation/rollout_worker.py", line 471, in sample

batches = [self.input_reader.next()]

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/rllib/evaluation/sampler.py", line 56, in next

batches = [self.get_data()]

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/rllib/evaluation/sampler.py", line 99, in get_data

item = next(self.rollout_provider)

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/rllib/evaluation/sampler.py", line 305, in _env_runner

base_env.poll()

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/rllib/env/base_env.py", line 312, in poll

self.new_obs = self.vector_env.vector_reset()

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/rllib/env/vector_env.py", line 100, in vector_reset

return [e.reset() for e in self.envs]

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/rllib/env/vector_env.py", line 100, in

- human_11: ('human', 'right', 0, 50.17162630275448, 0)

- human_16: ('human', 'top', 0, 46.13150154781563, 0)

- human_10: ('human', 'right', 0, 40.17456773662231, 0)

Failure # 2 (occurred at 2024-08-14_15-30-28)

Traceback (most recent call last):

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/tune/trial_runner.py", line 426, in _process_trial

result = self.trial_executor.fetch_result(trial)

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/tune/ray_trial_executor.py", line 378, in fetch_result

result = ray.get(trial_future[0], DEFAULT_GET_TIMEOUT)

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/worker.py", line 1457, in get

raise value.as_instanceof_cause()

ray.exceptions.RayTaskError(FatalFlowError): [36mray::PPO.train()[39m (pid=144743, ip=10.41.2.44)

File "python/ray/_raylet.pyx", line 636, in ray._raylet.execute_task

File "python/ray/_raylet.pyx", line 619, in ray._raylet.execute_task.function_executor

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/rllib/agents/trainer.py", line 444, in train

raise e

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/rllib/agents/trainer.py", line 433, in train

result = Trainable.train(self)

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/tune/trainable.py", line 176, in train

result = self._train()

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/rllib/agents/trainer_template.py", line 129, in _train

fetches = self.optimizer.step()

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/rllib/optimizers/multi_gpu_optimizer.py", line 140, in step

self.num_envs_per_worker, self.train_batch_size)

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/rllib/optimizers/rollout.py", line 29, in collect_samples

next_sample = ray_get_and_free(fut_sample)

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/rllib/utils/memory.py", line 33, in ray_get_and_free

result = ray.get(object_ids)

ray.exceptions.RayTaskError(FatalFlowError): [36mray::RolloutWorker.sample()[39m (pid=144821, ip=10.41.2.44)

File "python/ray/_raylet.pyx", line 636, in ray._raylet.execute_task

File "python/ray/_raylet.pyx", line 619, in ray._raylet.execute_task.function_executor

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/rllib/evaluation/rollout_worker.py", line 471, in sample

batches = [self.input_reader.next()]

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/rllib/evaluation/sampler.py", line 56, in next

batches = [self.get_data()]

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/rllib/evaluation/sampler.py", line 99, in get_data

item = next(self.rollout_provider)

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/rllib/evaluation/sampler.py", line 305, in _env_runner

base_env.poll()

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/rllib/env/base_env.py", line 312, in poll

self.new_obs = self.vector_env.vector_reset()

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/rllib/env/vector_env.py", line 100, in vector_reset

return [e.reset() for e in self.envs]

File "/home/aileen/anaconda3/envs/flow/lib/python3.7/site-packages/ray/rllib/env/vector_env.py", line 100, in

- rl_0: ('rl', 'left', 0, 43.82147741497402, 0)

- human_16: ('human', 'top', 0, 45.39006808431694, 0)

- human_20: ('human', 'left', 0, 30.194363233721305, 0)

When I changed the ring length of net_params from the default value 230 to 1500, it still reported errors like this.