fairseq

fairseq copied to clipboard

using foreach to reduce kernel

Before submitting

- [ ] Was this discussed/approved via a Github issue? (no need for typos, doc improvements) No

- [ ] Did you read the contributor guideline? Yes

- [ ] Did you make sure to update the docs? Yes

- [ ] Did you write any new necessary tests? No

What does this PR do?

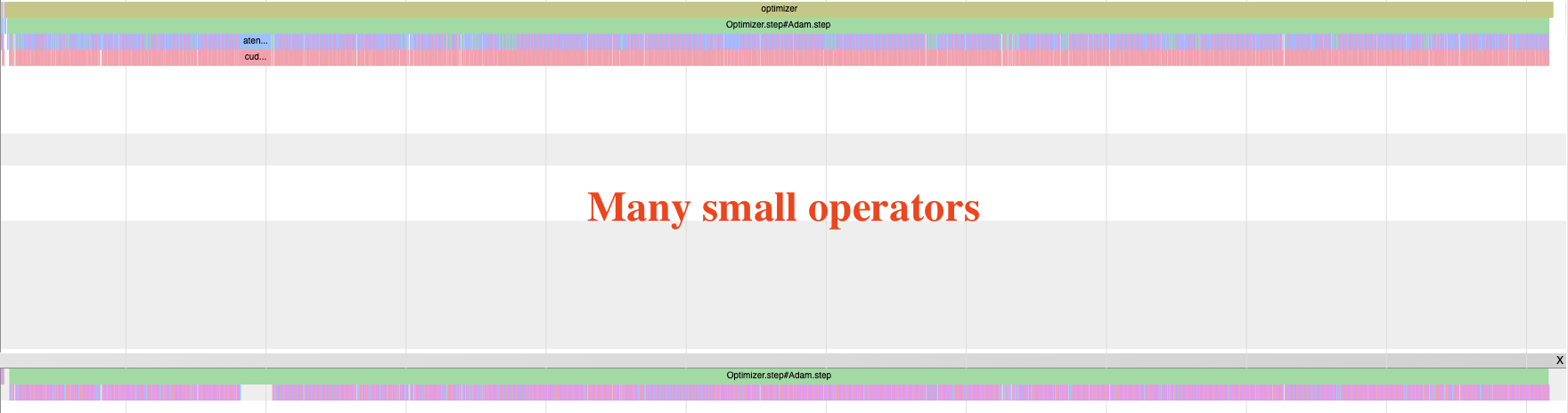

The existing Adam optimizer used by speech models is not efficient as it has many small operators (as shown in the below picture). As the speech team does not use latest PyTorch version Adam optimizer with the multi_tensor (foreach fusion) support, this diff is to support multi_tensor Adam implementation (by fusing many smaller operators into fewer number of operators via PyTorch foreach APIs) for the customized adam_sam optimizer used by the speech team.

PR review

Anyone in the community is free to review the PR once the tests have passed. If we didn't discuss your PR in Github issues there's a high chance it will not be merged.

Did you have fun?

Make sure you had fun coding 🙃