Goerli initial sync: db error, streaming issue, silent crashes

System information

Erigon version: latest 2022.07.02

Expected behaviour

clean sync

Actual behaviour

Erigon seems to silently crash during sync. Erigon is managed by runit which should explain the restarts.

[INFO] [07-10|01:26:53.647] [4/16 Bodies] Wrote block bodies block_num=7037637 delivery/sec=35.2MB wasted/sec=19.5MB alloc=3.6GB sys=6.9GB

[INFO] [07-10|01:27:13.664] [4/16 Bodies] Wrote block bodies block_num=7037685 delivery/sec=102.1MB wasted/sec=27.9MB alloc=7.5GB sys=7.9GB

[WARN] [07-10|01:27:15.208] Handling incoming message stream=RecvMessage err="decode BlockBodiesPacket66: rlp: input list has too many elements for eth.BlockRawBodiesPacket66"

[WARN] [07-10|01:27:15.364] Handling incoming message stream=RecvMessage err="decode BlockBodiesPacket66: rlp: input list has too many elements for eth.BlockRawBodiesPacket66"

[INFO] [07-10|01:27:33.348] [p2p] GoodPeers eth66=3

[INFO] [07-10|01:27:33.645] [4/16 Bodies] Wrote block bodies block_num=7037745 delivery/sec=117.2MB wasted/sec=0B alloc=10.3GB sys=11.8GB

[INFO] [07-10|01:27:49.867] [txpool] stat block=7199665 pending=0 baseFee=0 queued=0 alloc=11.3GB sys=11.8GB

>>>> Starting Erigon goerli public chain <<<< : /usr/local/bin/erigon : data directory : /home/erigon/erigon-goerli-data

[INFO] [07-10|01:27:51.107] Build info git_branch=HEAD git_tag=v2022.07.02-dirty git_commit=c7a94eeea05d7c0d569c811399642a7d108d8c82

[INFO] [07-10|01:27:51.107] Starting Erigon on Görli testnet...

[INFO] [07-10|01:27:51.107] Maximum peer count ETH=100 total=100

[INFO] [07-10|01:27:51.107] torrent verbosity level=2

[INFO] [07-10|01:27:53.210] Set global gas cap cap=50000000

[INFO] [07-10|01:27:53.211] Opening Database label=chaindata path=/home/erigon/erigon-goerli-data/chaindata

mdbx_setup_dxb:15446 filesize mismatch (expect 2147483648b/524288p, have 10737418240b/2621440p)

[INFO] [07-10|01:27:53.233] Initialised chain configuration config="{ChainID: 5, Homestead: 0, DAO: <nil>, DAO Support: true, Tangerine Whistle: 0, Spurious Dragon: 0, Byzantium: 0, Constantinople: 0, Petersburg: 0, Istanbul: 1561651, Muir Glacier: <nil>, Berlin: 4460644, London: 5062605, Arrow Glacier: <nil>, Gray Glacier: <nil>, Terminal Total Difficulty: <nil>, Engine: clique}" genesis=0xbf7e331f7f7c1dd2e05159666b3bf8bc7a8a3a9eb1d518969eab529dd9b88c1a

[INFO] [07-10|01:27:53.233] Initialising Ethereum protocol network=5

[INFO] [07-10|01:27:53.233] Using snapshots on=true

[INFO] [07-10|01:27:53.234] Effective prune_flags= snapshot_flags="--snapshots=true"

[INFO] [07-10|01:28:09.536] Starting private RPC server on=localhost:9090

[INFO] [07-10|01:28:09.536] new subscription to logs established

[INFO] [07-10|01:28:09.547] [txpool] Started

[INFO] [07-10|01:28:09.547] [Snapshots] Fetching torrent files metadata

[INFO] [07-10|01:28:09.592] Started P2P networking version=66 self=enode://29cd13bbed59ee3a9bc264c7277c0b8a376482b943d938ef02e01a787f7b90d4b385ca57be6929e6a4c844665cf4ca2b767849c7c5415e59b41651c384184759@127.0.0.1:30303 name=erigon/v2022.07.2-alpha-c7a94eee/linux-amd64/go1.18.1

[INFO] [07-10|01:28:10.295] [Snapshots] Stat blocks=6999999 segments=6999999 indices=6999999 alloc=656.9MB sys=876.1MB

[INFO] [07-10|01:28:10.296] [1/16 Headers] Waiting for headers... from=7199700

[INFO] [07-10|01:28:30.296] [1/16 Headers] Wrote block headers number=7199700 blk/second=0.000 alloc=670.8MB sys=876.1MB

[INFO] [07-10|01:28:50.297] [1/16 Headers] Wrote block headers number=7199700 blk/second=0.000 alloc=683.9MB sys=876.3MB

[INFO] [07-10|01:28:53.409] [1/16 Headers] Processed highest inserted=7199714 age=0

[INFO] [07-10|01:28:53.441] [4/16 Bodies] Processing bodies... from=7000036 to=7199714

I can't tell if it's related to

Handling incoming message stream=RecvMessage err="decode BlockBodiesPacket66: rlp: input list has too many elements for eth.BlockRawBodiesPacket66"

or

[INFO] [07-10|01:27:53.211] Opening Database label=chaindata path=/home/erigon/erigon-goerli-data/chaindata

mdbx_setup_dxb:15446 filesize mismatch (expect 2147483648b/524288p, have 10737418240b/2621440p)

I have to observe further, it seems it's still making sync progress.

Steps to reproduce the behaviour

Noteable erigon args:

--chain goerli \

--private.api.addr "localhost:9090" \

--http=false \

--snapshots=true \

--torrent.download.rate 64mb \

OOM killer?

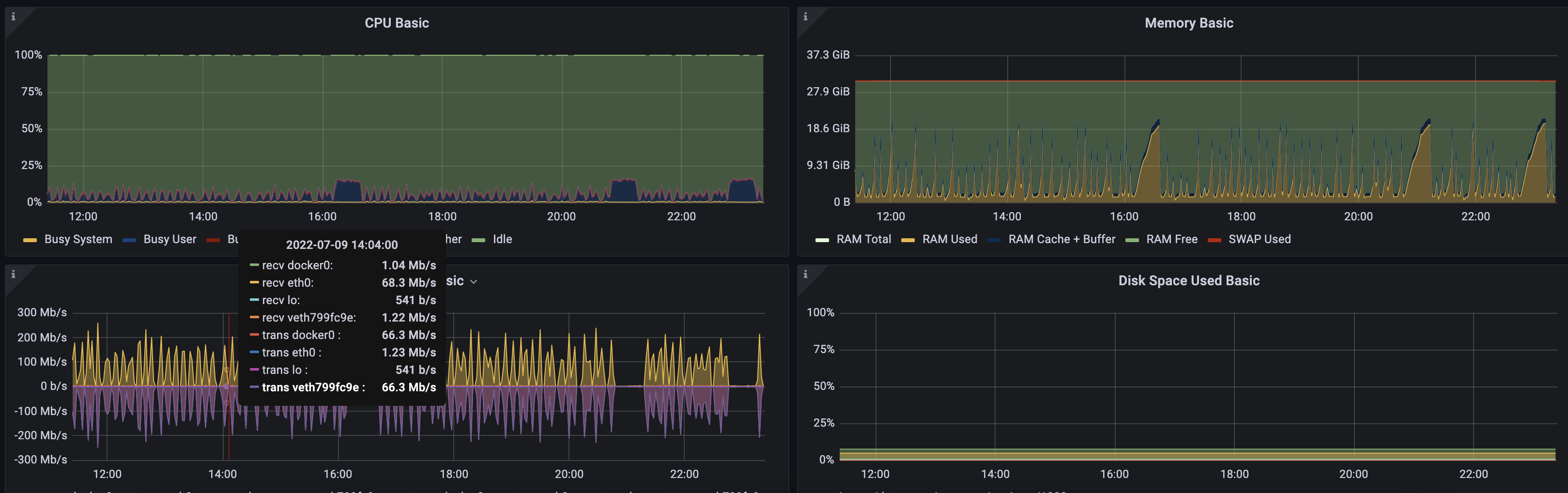

@AskAlexSharov Digged out some graphs, there are definitely spikes but it's not capping out

Regarding "it's still making progress": Looks stuck, it's jumping around in block numbers and on each restart, the db issue shows up:

INFO] [07-10|04:25:26.844] [4/16 Bodies] Wrote block bodies block_num=7037922 delivery/sec=70.6MB wasted/sec=3.3MB alloc=9.4GB sys=12.8GB

[INFO] [07-10|04:25:31.219] [txpool] stat block=7200375 pending=0 baseFee=0 queued=0 alloc=10.4GB sys=12.8GB

>>>> Starting Erigon goerli public chain <<<< : /usr/local/bin/erigon : data directory : /home/erigon/erigon-goerli-data

[INFO] [07-10|04:25:39.383] Build info git_branch=HEAD git_tag=v2022.07.02-dirty git_commit=c7a94eeea05d7c0d569c811399642a7d108d8c82

[INFO] [07-10|04:25:39.383] Starting Erigon on Görli testnet...

[INFO] [07-10|04:25:39.384] Maximum peer count ETH=100 total=100

[INFO] [07-10|04:25:39.384] torrent verbosity level=2

[INFO] [07-10|04:25:41.486] Set global gas cap cap=50000000

[INFO] [07-10|04:25:41.486] Opening Database label=chaindata path=/home/erigon/erigon-goerli-data/chaindata

mdbx_setup_dxb:15446 filesize mismatch (expect 2147483648b/524288p, have 8589934592b/2097152p)

[INFO] [07-10|04:25:41.509] Initialised chain configuration config="{ChainID: 5, Homestead: 0, DAO: <nil>, DAO Support: true, Tangerine Whistle: 0, Spurious Dragon: 0, Byzantium: 0, Constantinople: 0, Petersburg: 0, Istanbul: 1561651, Muir Glacier: <nil>, Berlin: 4460644, London: 5062605, Arrow Glacier: <nil>, Gray Glacier: <nil>, Terminal Total Difficulty: <nil>, Engine: clique}" genesis=0xbf7e331f7f7c1dd2e05159666b3bf8bc7a8a3a9eb1d518969eab529dd9b88c1a

[INFO] [07-10|04:25:41.509] Initialising Ethereum protocol network=5

[INFO] [07-10|04:25:41.509] Using snapshots on=true

[INFO] [07-10|04:25:41.510] Effective prune_flags= snapshot_flags="--snapshots=true"

[INFO] [07-10|04:25:57.851] Starting private RPC server on=localhost:9090

[INFO] [07-10|04:25:57.851] new subscription to logs established

[INFO] [07-10|04:25:57.861] [txpool] Started

[INFO] [07-10|04:25:57.861] [Snapshots] Fetching torrent files metadata

[INFO] [07-10|04:25:57.903] Started P2P networking version=66 self=enode://29cd13bbed59ee3a9bc264c7277c0b8a376482b943d938ef02e01a787f7b90d4b385ca57be6929e6a4c844665cf4ca2b767849c7c5415e59b41651c384184759@127.0.0.1:30303 name=erigon/v2022.07.2-alpha-c7a94eee/linux-amd64/go1.18.1

[INFO] [07-10|04:25:58.676] [Snapshots] Stat blocks=6999999 segments=6999999 indices=6999999 alloc=586.3MB sys=1.2GB

[INFO] [07-10|04:25:58.677] [1/16 Headers] Waiting for headers... from=7200401

[INFO] [07-10|04:26:18.677] [1/16 Headers] Wrote block headers number=7200401 blk/second=0.000 alloc=601.6MB sys=1.2GB

It’s OOM killer. Maybe we have mem leak.

We had the same issue when syncing Goerli specifically, after 2-4 OOMs it went fine. I even shared the trace in Discord. Let me try to find it.

When syncing Goerli, it took about 26 GB of RAM to get through it successfully.

That is at stage 4/16 — block bodies. BitTorrent sync is on.

Now seeing after restart:

[INFO] [07-11|17:20:22.571] Opening Database label=chaindata path=/home/erigon/erigon-goerli-data/chaindata

mdbx_ipclock_failed:30764 wlock owner died, recovering

Any ideas to repair this? Otherwise I purge and start from the beginning

Check that your processes have different pid: https://github.com/ledgerwatch/erigon/blob/b161c27ac318e5689656929ee032aea5b1c0350d/docker-compose.yml#L19

@AskAlexSharov Your example points at a multi-container setup but I have Erigon and RPC daemon running as separate processes in the same container. Does the PID tweak still apply?

@mohoff you processes have different PID you are good

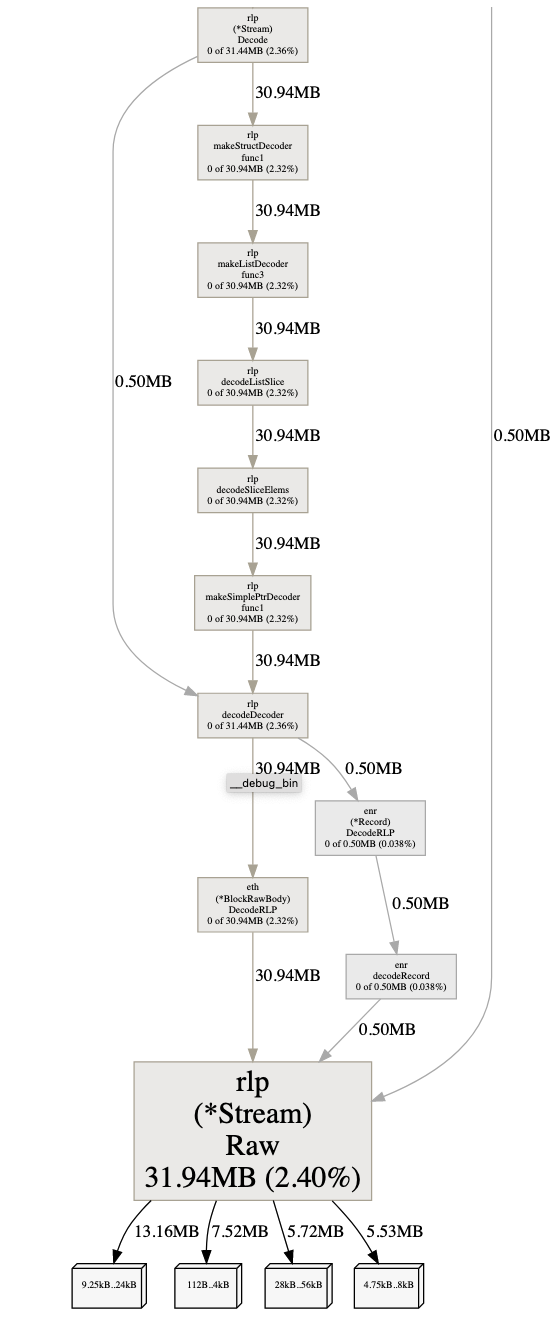

Well, Raw allocates buffers for encoded transactions, which escaped then through Delivery channel where those buffers are used, which causes Raw to not release allocated buffers.

I changed Delivery.tx type from [][][]byte to *[][][]byte which helps GC to reduce memory usage but could potentially lead to NPE on processing tx after read from that channel.

Currently double checking before push.