ComfyUI

ComfyUI copied to clipboard

RuntimeError when using ControlNet

When using the example image and workflow from comfyUI/examples I get the following error:

File "/home/lupos/Documents/AIStuff/ComfyUI/execution.py", line 174, in execute

executed += recursive_execute(self.server, prompt, self.outputs, x, extra_data)

File "/home/lupos/Documents/AIStuff/ComfyUI/execution.py", line 54, in recursive_execute

executed += recursive_execute(server, prompt, outputs, input_unique_id, extra_data)

File "/home/lupos/Documents/AIStuff/ComfyUI/execution.py", line 54, in recursive_execute

executed += recursive_execute(server, prompt, outputs, input_unique_id, extra_data)

File "/home/lupos/Documents/AIStuff/ComfyUI/execution.py", line 63, in recursive_execute

outputs[unique_id] = getattr(obj, obj.FUNCTION)(**input_data_all)

File "/home/lupos/Documents/AIStuff/ComfyUI/nodes.py", line 683, in sample

return common_ksampler(model, seed, steps, cfg, sampler_name, scheduler, positive, negative, latent_image, denoise=denoise)

File "/home/lupos/Documents/AIStuff/ComfyUI/nodes.py", line 652, in common_ksampler

samples = sampler.sample(noise, positive_copy, negative_copy, cfg=cfg, latent_image=latent_image, start_step=start_step, last_step=last_step, force_full_denoise=force_full_denoise, denoise_mask=noise_mask)

File "/home/lupos/Documents/AIStuff/ComfyUI/comfy/samplers.py", line 486, in sample

samples = getattr(k_diffusion_sampling, "sample_{}".format(self.sampler))(self.model_k, noise, sigmas, extra_args=extra_args)

File "/home/lupos/Documents/AIStuff/stable-diffusion-webui/venv/lib/python3.9/site-packages/torch/autograd/grad_mode.py", line 27, in decorate_context

return func(*args, **kwargs)

File "/home/lupos/Documents/AIStuff/ComfyUI/comfy/k_diffusion/sampling.py", line 553, in sample_dpmpp_sde

denoised = model(x, sigmas[i] * s_in, **extra_args)

File "/home/lupos/Documents/AIStuff/stable-diffusion-webui/venv/lib/python3.9/site-packages/torch/nn/modules/module.py", line 1194, in _call_impl

return forward_call(*input, **kwargs)

File "/home/lupos/Documents/AIStuff/ComfyUI/comfy/samplers.py", line 225, in forward

out = self.inner_model(x, sigma, cond=cond, uncond=uncond, cond_scale=cond_scale, cond_concat=cond_concat)

File "/home/lupos/Documents/AIStuff/stable-diffusion-webui/venv/lib/python3.9/site-packages/torch/nn/modules/module.py", line 1194, in _call_impl

return forward_call(*input, **kwargs)

File "/home/lupos/Documents/AIStuff/ComfyUI/comfy/k_diffusion/external.py", line 114, in forward

eps = self.get_eps(input * c_in, self.sigma_to_t(sigma), **kwargs)

File "/home/lupos/Documents/AIStuff/ComfyUI/comfy/k_diffusion/external.py", line 140, in get_eps

return self.inner_model.apply_model(*args, **kwargs)

File "/home/lupos/Documents/AIStuff/ComfyUI/comfy/samplers.py", line 213, in apply_model

out = sampling_function(self.inner_model.apply_model, x, timestep, uncond, cond, cond_scale, cond_concat)

File "/home/lupos/Documents/AIStuff/ComfyUI/comfy/samplers.py", line 195, in sampling_function

cond, uncond = calc_cond_uncond_batch(model_function, cond, uncond, x, timestep, max_total_area, cond_concat)

File "/home/lupos/Documents/AIStuff/ComfyUI/comfy/samplers.py", line 170, in calc_cond_uncond_batch

c['control'] = control.get_control(input_x, timestep_, c['c_crossattn'], len(cond_or_uncond))

File "/home/lupos/Documents/AIStuff/ComfyUI/comfy/sd.py", line 463, in get_control

control = self.control_model(x=x_noisy, hint=self.cond_hint, timesteps=t, context=cond_txt)

File "/home/lupos/Documents/AIStuff/stable-diffusion-webui/venv/lib/python3.9/site-packages/torch/nn/modules/module.py", line 1194, in _call_impl

return forward_call(*input, **kwargs)

File "/home/lupos/Documents/AIStuff/ComfyUI/comfy/cldm/cldm.py", line 263, in forward

emb = self.time_embed(t_emb)

File "/home/lupos/Documents/AIStuff/stable-diffusion-webui/venv/lib/python3.9/site-packages/torch/nn/modules/module.py", line 1194, in _call_impl

return forward_call(*input, **kwargs)

File "/home/lupos/Documents/AIStuff/stable-diffusion-webui/venv/lib/python3.9/site-packages/torch/nn/modules/container.py", line 204, in forward

input = module(input)

File "/home/lupos/Documents/AIStuff/stable-diffusion-webui/venv/lib/python3.9/site-packages/torch/nn/modules/module.py", line 1194, in _call_impl

return forward_call(*input, **kwargs)

File "/home/lupos/Documents/AIStuff/stable-diffusion-webui/venv/lib/python3.9/site-packages/torch/nn/modules/linear.py", line 114, in forward

return F.linear(input, self.weight, self.bias)

RuntimeError: Expected all tensors to be on the same device, but found at least two devices, cuda:0 and cpu! (when checking argument for argument mat1 in method wrapper_addmm)

Can you tell me exactly which example you tried, how you ran comfyui and what your GPU is?

@comfyanonymous Sure, sorry for the late answer: OS: Pop!_OS 22.04 LTS x86_64 Kernel: 6.2.6-76060206-generic NVIDIA GeForce GTX 1050 Ti

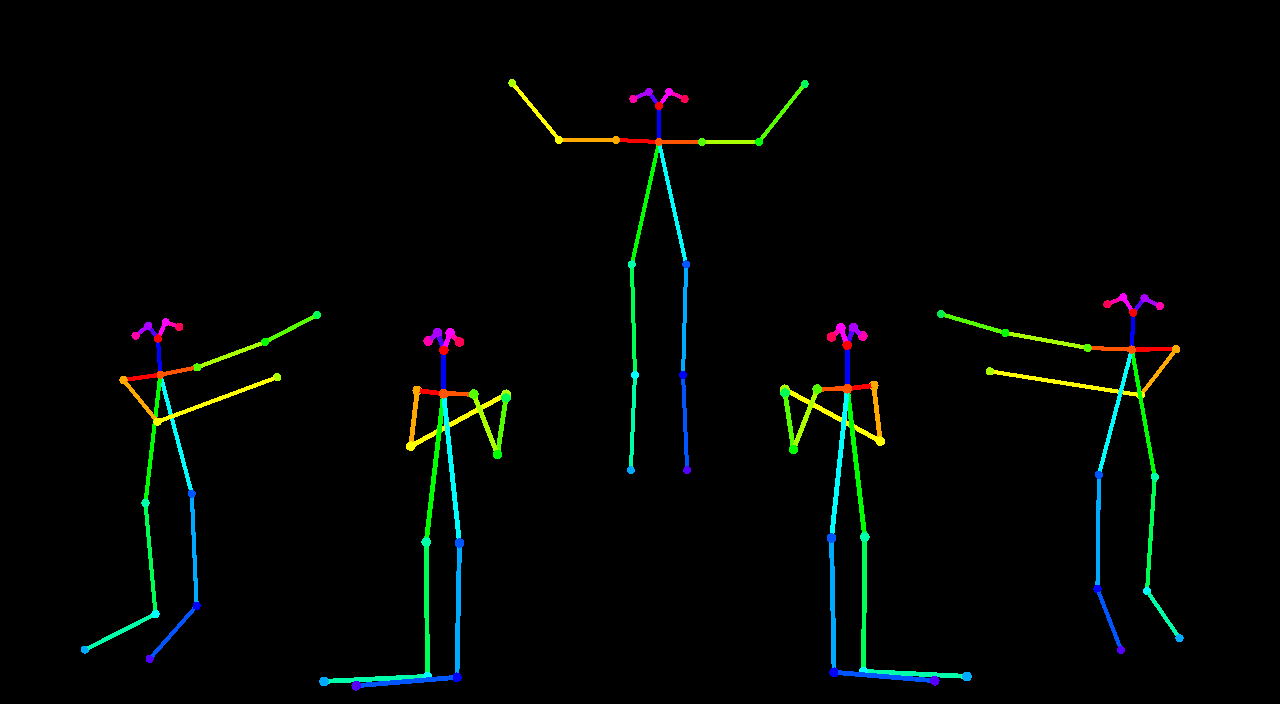

Its a bit here to i dont know excatly which image it was. But it was one of following.

It was a while back so i dont know exactly if i checked my VRAM usage after the crash, but if it was the reason for the crash there should be a better error message.