TC启动失败的问题

1. Bug Description

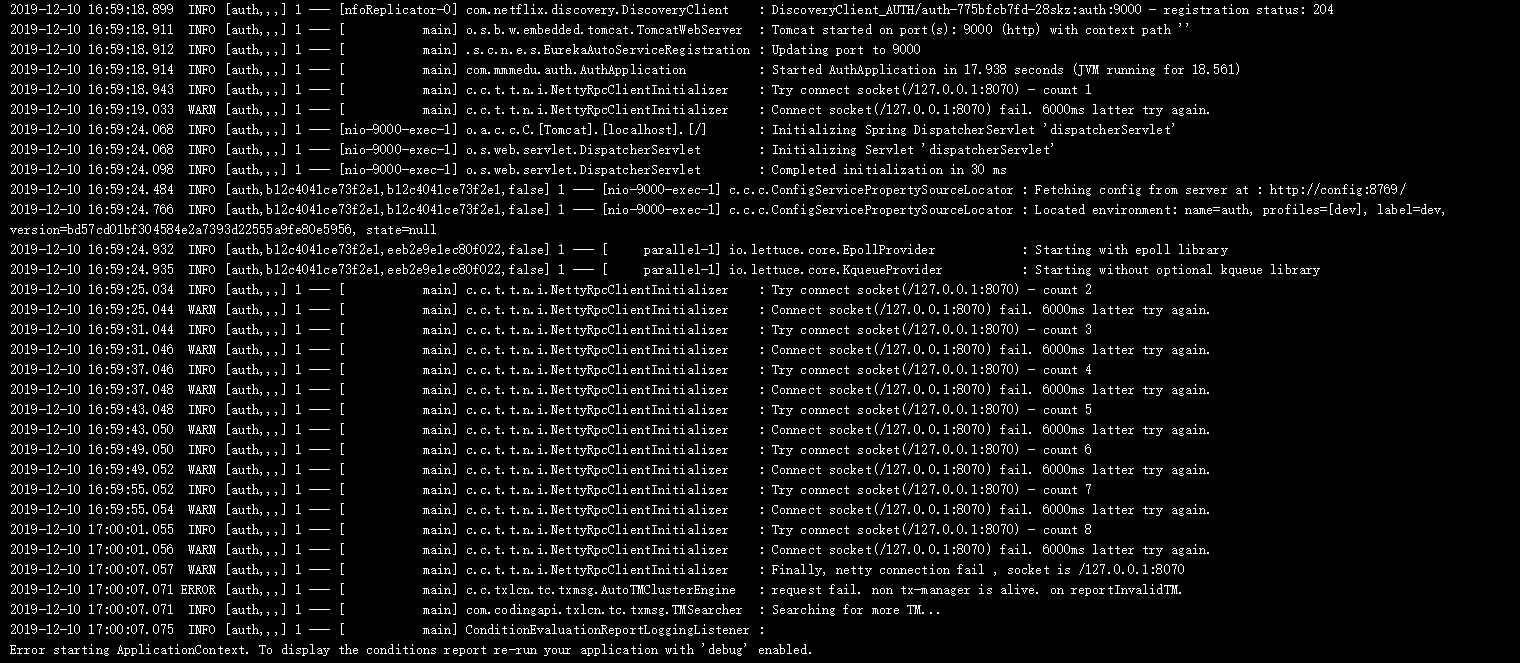

在使用k8s作为集群的时候,创建了eureka,config,tm,tc的时候,tm可以启动,tc在启动的时候日志输出一直显示

,链接不上tm,达到八次就失败了,

,链接不上tm,达到八次就失败了,

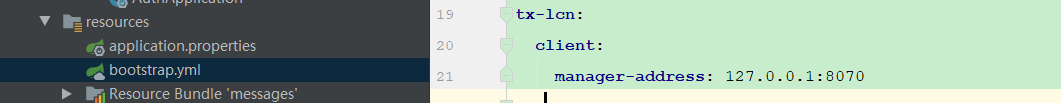

,这是我tc模块的配置,

,这是我tc模块的配置,

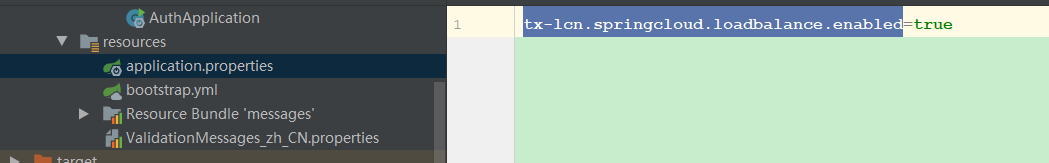

按照文档配置了“tx-lcn.springcloud.loadbalance.enabled”这个集群配置了,但是idea显示这个配置其实是找不到的,仍然失败,希望能帮忙解决一下,急!

按照文档配置了“tx-lcn.springcloud.loadbalance.enabled”这个集群配置了,但是idea显示这个配置其实是找不到的,仍然失败,希望能帮忙解决一下,急!

2. Environment:

- JDK version:1.8

- OS:k8s

- TX-LCN version:5.0.2.RELEASE

看不到图片

看不到图片

兄弟联系方式有没有,邮箱也行,求助

你的client能注册到eureka-server中吗

你的client能注册到eureka-server中吗

可以的,并且本地测试都是没问题的,但是在集群中TC启动就失败了。 以下是启动的日志: 2019-12-10 18:09:10.475 INFO [auth,,,] 1 --- [ main] c.codingapi.txlcn.tc.corelog.H2DbHelper : log hikariDataSource close. 2019-12-10 18:09:10.480 INFO [auth,,,] 1 --- [ main] j.LocalContainerEntityManagerFactoryBean : Closing JPA EntityManagerFactory for persistence unit 'default' 2019-12-10 18:09:10.736 INFO [auth,,,] 1 --- [ main] com.netflix.discovery.DiscoveryClient : Shutting down DiscoveryClient ... 2019-12-10 18:09:13.738 INFO [auth,,,] 1 --- [ main] com.netflix.discovery.DiscoveryClient : Unregistering ... 2019-12-10 18:09:13.743 INFO [auth,,,] 1 --- [ main] com.netflix.discovery.DiscoveryClient : DiscoveryClient_AUTH/auth-775bfcb7fd-28skz:auth:9000 - deregister status: 200 2019-12-10 18:09:13.751 INFO [auth,,,] 1 --- [ main] com.netflix.discovery.DiscoveryClient : Completed shut down of DiscoveryClient 2019-12-10 18:09:13.751 INFO [auth,,,] 1 --- [ main] com.zaxxer.hikari.HikariDataSource : HikariPool-1 - Shutdown initiated... 2019-12-10 18:09:13.759 INFO [auth,,,] 1 --- [ main] com.zaxxer.hikari.HikariDataSource : HikariPool-1 - Shutdown completed. 2019-12-10 18:09:13.767 INFO [auth,,,] 1 --- [ main] c.mmmedu.commons.filter.SignAuthFilter : SignAuthFilter destroy 2019-12-10 18:09:13.781 ERROR [auth,,,] 1 --- [ main] o.s.boot.SpringApplication : Application run failed java.lang.IllegalStateException: Failed to execute ApplicationRunner at org.springframework.boot.SpringApplication.callRunner(SpringApplication.java:775) [spring-boot-2.1.10.RELEASE.jar!/:2.1.10.RELEASE] at org.springframework.boot.SpringApplication.callRunners(SpringApplication.java:762) [spring-boot-2.1.10.RELEASE.jar!/:2.1.10.RELEASE] at org.springframework.boot.SpringApplication.run(SpringApplication.java:319) [spring-boot-2.1.10.RELEASE.jar!/:2.1.10.RELEASE] at org.springframework.boot.SpringApplication.run(SpringApplication.java:1215) [spring-boot-2.1.10.RELEASE.jar!/:2.1.10.RELEASE] at org.springframework.boot.SpringApplication.run(SpringApplication.java:1204) [spring-boot-2.1.10.RELEASE.jar!/:2.1.10.RELEASE] at com.mmmedu.auth.AuthApplication.main(AuthApplication.java:39) [classes!/:0.0.1-SNAPSHOT] at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[na:1.8.0_201] at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[na:1.8.0_201] at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[na:1.8.0_201] at java.lang.reflect.Method.invoke(Method.java:498) ~[na:1.8.0_201] at org.springframework.boot.loader.MainMethodRunner.run(MainMethodRunner.java:48) [app.jar:0.0.1-SNAPSHOT] at org.springframework.boot.loader.Launcher.launch(Launcher.java:87) [app.jar:0.0.1-SNAPSHOT] at org.springframework.boot.loader.Launcher.launch(Launcher.java:51) [app.jar:0.0.1-SNAPSHOT] at org.springframework.boot.loader.JarLauncher.main(JarLauncher.java:52) [app.jar:0.0.1-SNAPSHOT] Caused by: java.lang.IllegalStateException: There is no normal TM. at com.codingapi.txlcn.tc.txmsg.TMSearcher.search(TMSearcher.java:86) ~[txlcn-tc-5.0.2.RELEASE.jar!/:5.0.2.RELEASE] at com.codingapi.txlcn.tc.txmsg.AutoTMClusterEngine.prepareToResearchTMCluster(AutoTMClusterEngine.java:83) ~[txlcn-tc-5.0.2.RELEASE.jar!/:5.0.2.RELEASE] at com.codingapi.txlcn.tc.txmsg.AutoTMClusterEngine.onConnectFail(AutoTMClusterEngine.java:69) ~[txlcn-tc-5.0.2.RELEASE.jar!/:5.0.2.RELEASE] at com.codingapi.txlcn.tc.txmsg.TCSideRpcInitCallBack.lambda$connectFail$3(TCSideRpcInitCallBack.java:107) ~[txlcn-tc-5.0.2.RELEASE.jar!/:5.0.2.RELEASE] at java.util.ArrayList.forEach(ArrayList.java:1257) ~[na:1.8.0_201] at com.codingapi.txlcn.tc.txmsg.TCSideRpcInitCallBack.connectFail(TCSideRpcInitCallBack.java:107) ~[txlcn-tc-5.0.2.RELEASE.jar!/:5.0.2.RELEASE] at com.codingapi.txlcn.txmsg.netty.impl.NettyRpcClientInitializer.connect(NettyRpcClientInitializer.java:125) ~[txlcn-txmsg-netty-5.0.2.RELEASE.jar!/:5.0.2.RELEASE] at com.codingapi.txlcn.txmsg.netty.impl.NettyRpcClientInitializer.init(NettyRpcClientInitializer.java:85) ~[txlcn-txmsg-netty-5.0.2.RELEASE.jar!/:5.0.2.RELEASE] at com.codingapi.txlcn.tc.txmsg.TCRpcServer.init(TCRpcServer.java:58) ~[txlcn-tc-5.0.2.RELEASE.jar!/:5.0.2.RELEASE] at com.codingapi.txlcn.common.runner.TxLcnApplicationRunner.run(TxLcnApplicationRunner.java:54) ~[txlcn-common-5.0.2.RELEASE.jar!/:5.0.2.RELEASE] at org.springframework.boot.SpringApplication.callRunner(SpringApplication.java:772) [spring-boot-2.1.10.RELEASE.jar!/:2.1.10.RELEASE] ... 13 common frames omitted

显示还是注册不到TM上去

应该和你的k8s网络环境有关。 你这个现象我之前也遇到过,tc和tm之期是通过ip+port直接访问的,k8s环境之间网络环境有问题,会导致你的ip之间不能互通。你可以检查下ip之类的是否正确。 另外再检查下,有没有别的线程的阻塞

应该和你的k8s网络环境有关。 你这个现象我之前也遇到过,tc和tm之期是通过ip+port直接访问的,k8s环境之间网络环境有问题,会导致你的ip之间不能互通。你可以检查下ip之类的是否正确。 另外再检查下,有没有别的线程的阻塞

2019-12-10 18:13:26.771 INFO [auth,,,] 1 --- [ main] .s.c.n.e.s.EurekaAutoServiceRegistration : Updating port to 9000 2019-12-10 18:13:26.773 INFO [auth,,,] 1 --- [ main] com.mmmedu.auth.AuthApplication : Started AuthApplication in 18.702 seconds (JVM running for 19.371) 2019-12-10 18:13:26.803 INFO [auth,,,] 1 --- [ main] c.c.t.t.n.i.NettyRpcClientInitializer : Try connect socket(/127.0.0.1:8070) - count 1 2019-12-10 18:13:26.893 WARN [auth,,,] 1 --- [ main] c.c.t.t.n.i.NettyRpcClientInitializer : Connect socket(/127.0.0.1:8070) fail. 6000ms latter try again. 2019-12-10 18:13:29.933 INFO [auth,,,] 1 --- [nio-9000-exec-1] o.a.c.c.C.[Tomcat].[localhost].[/] : Initializing Spring DispatcherServlet 'dispatcherServlet' 2019-12-10 18:13:29.933 INFO [auth,,,] 1 --- [nio-9000-exec-1] o.s.web.servlet.DispatcherServlet : Initializing Servlet 'dispatcherServlet' 2019-12-10 18:13:29.961 INFO [auth,,,] 1 --- [nio-9000-exec-1] o.s.web.servlet.DispatcherServlet : Completed initialization in 28 ms 2019-12-10 18:13:30.385 INFO [auth,0cdf04aa6ebb55d7,0cdf04aa6ebb55d7,false] 1 --- [nio-9000-exec-1] c.c.c.ConfigServicePropertySourceLocator : Fetching config from server at : http://config:8769/ 2019-12-10 18:13:30.855 INFO [auth,0cdf04aa6ebb55d7,0cdf04aa6ebb55d7,false] 1 --- [nio-9000-exec-1] c.c.c.ConfigServicePropertySourceLocator : Located environment: name=auth, profiles=[dev], label=dev, version=bd57cd01bf304584e2a7393d22555a9fe80e5956, state=null 2019-12-10 18:13:31.019 INFO [auth,0cdf04aa6ebb55d7,317ea9b787cd628d,false] 1 --- [ parallel-1] io.lettuce.core.EpollProvider : Starting with epoll library 2019-12-10 18:13:31.031 INFO [auth,0cdf04aa6ebb55d7,317ea9b787cd628d,false] 1 --- [ parallel-1] io.lettuce.core.KqueueProvider : Starting without optional kqueue library 2019-12-10 18:13:32.894 INFO [auth,,,] 1 --- [ main] c.c.t.t.n.i.NettyRpcClientInitializer : Try connect socket(/127.0.0.1:8070) - count 2 2019-12-10 18:13:32.897 WARN [auth,,,] 1 --- [ main] c.c.t.t.n.i.NettyRpcClientInitializer : Connect socket(/127.0.0.1:8070) fail. 6000ms latter try again. 2019-12-10 18:13:38.897 INFO [auth,,,] 1 --- [ main] c.c.t.t.n.i.NettyRpcClientInitializer : Try connect socket(/127.0.0.1:8070) - count 3 2019-12-10 18:13:38.899 WARN [auth,,,] 1 --- [ main] c.c.t.t.n.i.NettyRpcClientInitializer : Connect socket(/127.0.0.1:8070) fail. 6000ms latter try again. 2019-12-10 18:13:44.899 INFO [auth,,,] 1 --- [ main] c.c.t.t.n.i.NettyRpcClientInitializer : Try connect socket(/127.0.0.1:8070) - count 4 2019-12-10 18:13:44.901 WARN [auth,,,] 1 --- [ main] c.c.t.t.n.i.NettyRpcClientInitializer : Connect socket(/127.0.0.1:8070) fail. 6000ms latter try again. 2019-12-10 18:13:50.901 INFO [auth,,,] 1 --- [ main] c.c.t.t.n.i.NettyRpcClientInitializer : Try connect socket(/127.0.0.1:8070) - count 5 2019-12-10 18:13:50.903 WARN [auth,,,] 1 --- [ main] c.c.t.t.n.i.NettyRpcClientInitializer : Connect socket(/127.0.0.1:8070) fail. 6000ms latter try again. 2019-12-10 18:13:56.904 INFO [auth,,,] 1 --- [ main] c.c.t.t.n.i.NettyRpcClientInitializer : Try connect socket(/127.0.0.1:8070) - count 6 2019-12-10 18:13:56.905 WARN [auth,,,] 1 --- [ main] c.c.t.t.n.i.NettyRpcClientInitializer : Connect socket(/127.0.0.1:8070) fail. 6000ms latter try again. 2019-12-10 18:14:02.906 INFO [auth,,,] 1 --- [ main] c.c.t.t.n.i.NettyRpcClientInitializer : Try connect socket(/127.0.0.1:8070) - count 7 2019-12-10 18:14:02.908 WARN [auth,,,] 1 --- [ main] c.c.t.t.n.i.NettyRpcClientInitializer : Connect socket(/127.0.0.1:8070) fail. 6000ms latter try again. 2019-12-10 18:14:08.908 INFO [auth,,,] 1 --- [ main] c.c.t.t.n.i.NettyRpcClientInitializer : Try connect socket(/127.0.0.1:8070) - count 8 2019-12-10 18:14:08.910 WARN [auth,,,] 1 --- [ main] c.c.t.t.n.i.NettyRpcClientInitializer : Connect socket(/127.0.0.1:8070) fail. 6000ms latter try again. 2019-12-10 18:14:14.910 WARN [auth,,,] 1 --- [ main] c.c.t.t.n.i.NettyRpcClientInitializer : Finally, netty connection fail , socket is /127.0.0.1:8070 2019-12-10 18:14:14.925 ERROR [auth,,,] 1 --- [ main] c.c.txlcn.tc.txmsg.AutoTMClusterEngine : request fail. non tx-manager is alive. on reportInvalidTM. 2019-12-10 18:14:14.926 INFO [auth,,,] 1 --- [ main] com.codingapi.txlcn.tc.txmsg.TMSearcher : Searching for more TM... 2019-12-10 18:14:14.930 INFO [auth,,,] 1 --- [ main] ConditionEvaluationReportLoggingListener :

Error starting ApplicationContext. To display the conditions report re-run your application with 'debug' enabled. 2019-12-10 18:14:14.931 INFO [auth,,,] 1 --- [ main] o.s.c.n.e.s.EurekaServiceRegistry : Unregistering application AUTH with eureka with status DOWN 2019-12-10 18:14:14.931 WARN [auth,,,] 1 --- [ main] com.netflix.discovery.DiscoveryClient : Saw local status change event StatusChangeEvent [timestamp=1575972854931, current=DOWN, previous=UP] 2019-12-10 18:14:14.931 INFO [auth,,,] 1 --- [nfoReplicator-0] com.netflix.discovery.DiscoveryClient : DiscoveryClient_AUTH/auth-7bfff685d9-69vdg:auth:9000: registering service... 2019-12-10 18:14:14.937 INFO [auth,,,] 1 --- [nfoReplicator-0] com.netflix.discovery.DiscoveryClient : DiscoveryClient_AUTH/auth-7bfff685d9-69vdg:auth:9000 - registration status: 204 2019-12-10 18:14:14.939 INFO [auth,,,] 1 --- [ main] o.s.s.concurrent.ThreadPoolTaskExecutor : Shutting down ExecutorService 'applicationTaskExecutor' 2019-12-10 18:14:14.942 INFO [auth,,,] 1 --- [ main] c.c.t.t.n.i.NettyRpcServerInitializer : server was down. 2019-12-10 18:14:14.942 INFO [auth,,,] 1 --- [ main] c.c.t.t.n.i.NettyRpcClientInitializer : RPC client was down. 2019-12-10 18:14:14.949 INFO [auth,,,] 1 --- [ main] com.zaxxer.hikari.HikariDataSource : HikariPool-2 - Shutdown initiated... 2019-12-10 18:14:15.032 INFO [auth,,,] 1 --- [ main] com.zaxxer.hikari.HikariDataSource : HikariPool-2 - Shutdown completed. 2019-12-10 18:14:15.033 INFO [auth,,,] 1 --- [ main] c.codingapi.txlcn.tc.corelog.H2DbHelper : log hikariDataSource close. 2019-12-10 18:14:15.038 INFO [auth,,,] 1 --- [ main] j.LocalContainerEntityManagerFactoryBean : Closing JPA EntityManagerFactory for persistence unit 'default' 2019-12-10 18:14:15.491 INFO [auth,,,] 1 --- [ main] com.netflix.discovery.DiscoveryClient : Shutting down DiscoveryClient ... 2019-12-10 18:14:18.491 INFO [auth,,,] 1 --- [ main] com.netflix.discovery.DiscoveryClient : Unregistering ... 2019-12-10 18:14:18.497 INFO [auth,,,] 1 --- [ main] com.netflix.discovery.DiscoveryClient : DiscoveryClient_AUTH/auth-7bfff685d9-69vdg:auth:9000 - deregister status: 200 2019-12-10 18:14:18.506 INFO [auth,,,] 1 --- [ main] com.netflix.discovery.DiscoveryClient : Completed shut down of DiscoveryClient 2019-12-10 18:14:18.507 INFO [auth,,,] 1 --- [ main] com.zaxxer.hikari.HikariDataSource : HikariPool-1 - Shutdown initiated... 2019-12-10 18:14:18.515 INFO [auth,,,] 1 --- [ main] com.zaxxer.hikari.HikariDataSource : HikariPool-1 - Shutdown completed. 2019-12-10 18:14:18.523 INFO [auth,,,] 1 --- [ main] c.mmmedu.commons.filter.SignAuthFilter : SignAuthFilter destroy 2019-12-10 18:14:18.537 ERROR [auth,,,] 1 --- [ main] o.s.boot.SpringApplication : Application run failed

java.lang.IllegalStateException: Failed to execute ApplicationRunner at org.springframework.boot.SpringApplication.callRunner(SpringApplication.java:775) [spring-boot-2.1.10.RELEASE.jar!/:2.1.10.RELEASE] at org.springframework.boot.SpringApplication.callRunners(SpringApplication.java:762) [spring-boot-2.1.10.RELEASE.jar!/:2.1.10.RELEASE] at org.springframework.boot.SpringApplication.run(SpringApplication.java:319) [spring-boot-2.1.10.RELEASE.jar!/:2.1.10.RELEASE] at org.springframework.boot.SpringApplication.run(SpringApplication.java:1215) [spring-boot-2.1.10.RELEASE.jar!/:2.1.10.RELEASE] at org.springframework.boot.SpringApplication.run(SpringApplication.java:1204) [spring-boot-2.1.10.RELEASE.jar!/:2.1.10.RELEASE] at com.mmmedu.auth.AuthApplication.main(AuthApplication.java:39) [classes!/:0.0.1-SNAPSHOT] at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[na:1.8.0_201] at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[na:1.8.0_201] at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[na:1.8.0_201] at java.lang.reflect.Method.invoke(Method.java:498) ~[na:1.8.0_201] at org.springframework.boot.loader.MainMethodRunner.run(MainMethodRunner.java:48) [app.jar:0.0.1-SNAPSHOT] at org.springframework.boot.loader.Launcher.launch(Launcher.java:87) [app.jar:0.0.1-SNAPSHOT] at org.springframework.boot.loader.Launcher.launch(Launcher.java:51) [app.jar:0.0.1-SNAPSHOT] at org.springframework.boot.loader.JarLauncher.main(JarLauncher.java:52) [app.jar:0.0.1-SNAPSHOT] Caused by: java.lang.IllegalStateException: There is no normal TM. at com.codingapi.txlcn.tc.txmsg.TMSearcher.search(TMSearcher.java:86) ~[txlcn-tc-5.0.2.RELEASE.jar!/:5.0.2.RELEASE] at com.codingapi.txlcn.tc.txmsg.AutoTMClusterEngine.prepareToResearchTMCluster(AutoTMClusterEngine.java:83) ~[txlcn-tc-5.0.2.RELEASE.jar!/:5.0.2.RELEASE] at com.codingapi.txlcn.tc.txmsg.AutoTMClusterEngine.onConnectFail(AutoTMClusterEngine.java:69) ~[txlcn-tc-5.0.2.RELEASE.jar!/:5.0.2.RELEASE] at com.codingapi.txlcn.tc.txmsg.TCSideRpcInitCallBack.lambda$connectFail$3(TCSideRpcInitCallBack.java:107) ~[txlcn-tc-5.0.2.RELEASE.jar!/:5.0.2.RELEASE] at java.util.ArrayList.forEach(ArrayList.java:1257) ~[na:1.8.0_201] at com.codingapi.txlcn.tc.txmsg.TCSideRpcInitCallBack.connectFail(TCSideRpcInitCallBack.java:107) ~[txlcn-tc-5.0.2.RELEASE.jar!/:5.0.2.RELEASE] at com.codingapi.txlcn.txmsg.netty.impl.NettyRpcClientInitializer.connect(NettyRpcClientInitializer.java:125) ~[txlcn-txmsg-netty-5.0.2.RELEASE.jar!/:5.0.2.RELEASE] at com.codingapi.txlcn.txmsg.netty.impl.NettyRpcClientInitializer.init(NettyRpcClientInitializer.java:85) ~[txlcn-txmsg-netty-5.0.2.RELEASE.jar!/:5.0.2.RELEASE] at com.codingapi.txlcn.tc.txmsg.TCRpcServer.init(TCRpcServer.java:58) ~[txlcn-tc-5.0.2.RELEASE.jar!/:5.0.2.RELEASE] at com.codingapi.txlcn.common.runner.TxLcnApplicationRunner.run(TxLcnApplicationRunner.java:54) ~[txlcn-common-5.0.2.RELEASE.jar!/:5.0.2.RELEASE] at org.springframework.boot.SpringApplication.callRunner(SpringApplication.java:772) [spring-boot-2.1.10.RELEASE.jar!/:2.1.10.RELEASE] ... 13 common frames omitted

是这个日志

因为tc-tm之间压根就没有连上,默认连接8次,就over了

次数达到上限,就没注册到tm上

因为tc-tm之间压根就没有连上,默认连接8次,就over了

所以你之前遇到过是由于k8s网络的原因是吗

是的,我换了个k8s环境部署,然后就没有问题了

是的,我换了个k8s环境部署,然后就没有问题了

具体是怎么解决的,麻烦你能稍微解答一下吗,谢谢了

没思路,我遇到问题的时候,是在自己机器上开发部署出现的(当时是集成istio出现的问题)。 后面在公司开发环境相同情形上,发现问题不存在

tc,tm配置发下看看

TM配置如下: server: port: 7970 tx-lcn: logger: enabled: true manager: host: 127.0.0.1 port: 8070

TC配置: tx-lcn: client: manager-address: 127.0.0.1:8070

TC配置: tx-lcn: client: manager-address: 127.0.0.1:8070

显然这里配置错了

TC配置: tx-lcn: client: manager-address: 127.0.0.1:8070

显然这里配置错了

意思就是TM暴露的通信端口和TC要链接的通信端口在k8s里面能够通信就可以了吧,原理上是这样吧

是的,而且不能使用ip,要使用FQDN方式去访问

FQDN

感谢,我从k8s中修改配置试试

是的,而且不能使用ip,要使用FQDN方式去访问

再请问一下,我试了一下k8s中TM通信的Port暴露出来了一个FQDN,然后TC链接的时候配置为: tx-lcn: client: manager-address: http://tx:8070 ,然后tc启动报错,txlcn底层这个manager-address解析是默认数字类型,变成FQDN方式底层就有问题了,请问你是增加了其他处理方式吗

不好意思,误导你了; 这个tc-tm之间使用ip+port端口通讯的。 你可以先启动tm,拿到ip(一般以10.开头的ip)后,修改客户端的配置,这样tc就可以和tm通讯了

或者你改造下tx-lcn源码

不好意思,误导你了; 这个tc-tm之间使用ip+port端口通讯的。 你可以先启动tm,拿到ip(一般以10.开头的ip)后,修改客户端的配置,这样tc就可以和tm通讯了

所以你的处理方式还是用ip+port的处理吗

是的