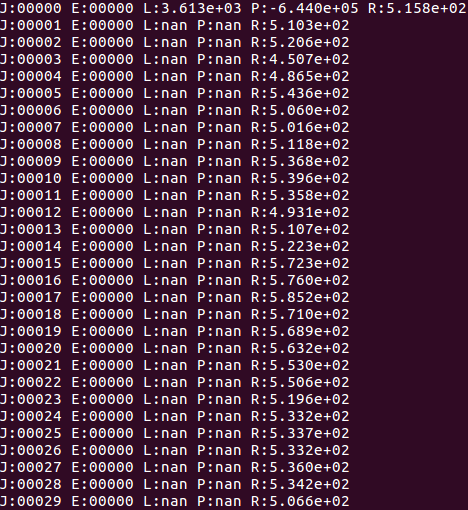

Loss and Prior becomes nan

Is this a normal behavior that loss and prior became nan soon after the training process started? I ran the sample code of 20 news group.

No, I don't think it's working as intended.

Hi, try to reinstall chainer version 1.9 instead of latest one.

changing the code "optimizer.zero_grads()" to "model.cleargrads()",i get a digit.

Thank you, aggarwalpiush's answer is correct. I tried chainer version 1.6.0, and I failed to install it. But 1.9.0 succeeded.

But I have no idea how can I use the latest version of chainer in the model. ttjs8423219's solution is disabled in 3.5.0.

Hi, I'm also getting nan values in loss and prior same as austinlaurice. I even tried changing the chainer versions with 1.9.0 and latest version 7.1.0.

Can someone please help me out in resolving the bug.