Proposed change to Mack valuation correlation all-year confidence interval

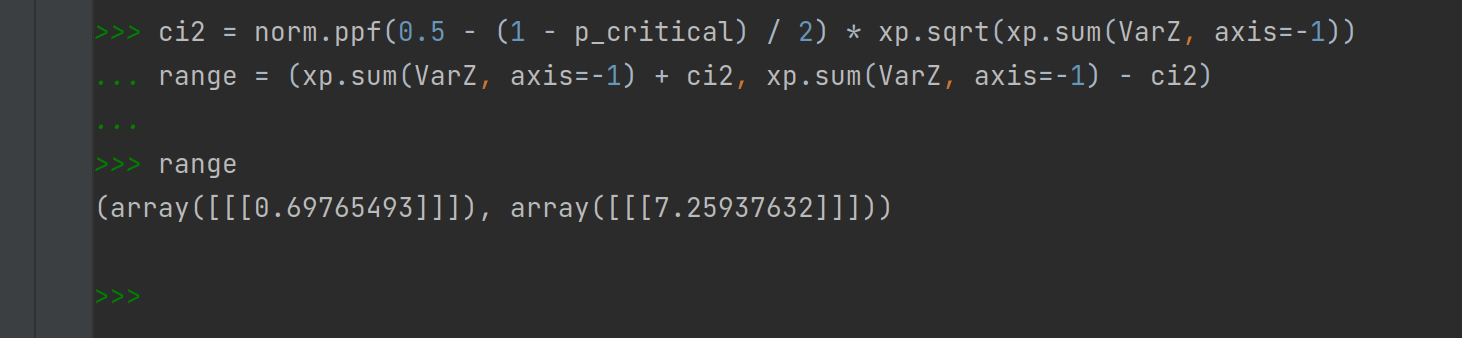

The current version of the valuation correlation algorithm uses the variance to construct the confidence interval, and then compares the variance to the two endpoints of the confidence interval:

ci2 = norm.ppf(0.5 - (1 - p_critical) / 2) * xp.sqrt(xp.sum(VarZ, axis=-1))

self.range = (xp.sum(VarZ, axis=-1) + ci2, xp.sum(VarZ, axis=-1) - ci2)

idx = triangle.index.set_index(triangle.key_labels).index

self.z_critical = pd.DataFrame(

(

(self.range[0] > VarZ.sum(axis=-1))

| (VarZ.sum(axis=-1) > self.range[1])

)[..., 0],

columns=triangle.vdims,

index=idx,

)

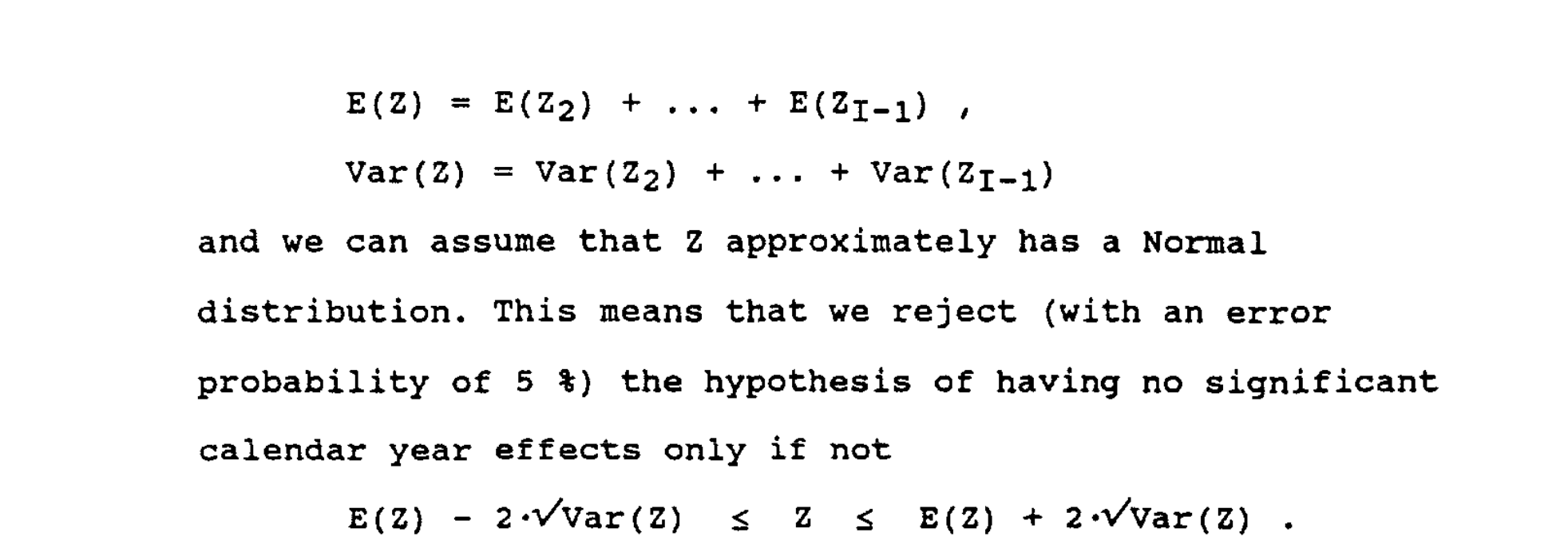

Whereas in Mack 97, the interval is constructed using the expected value and standard deviation:

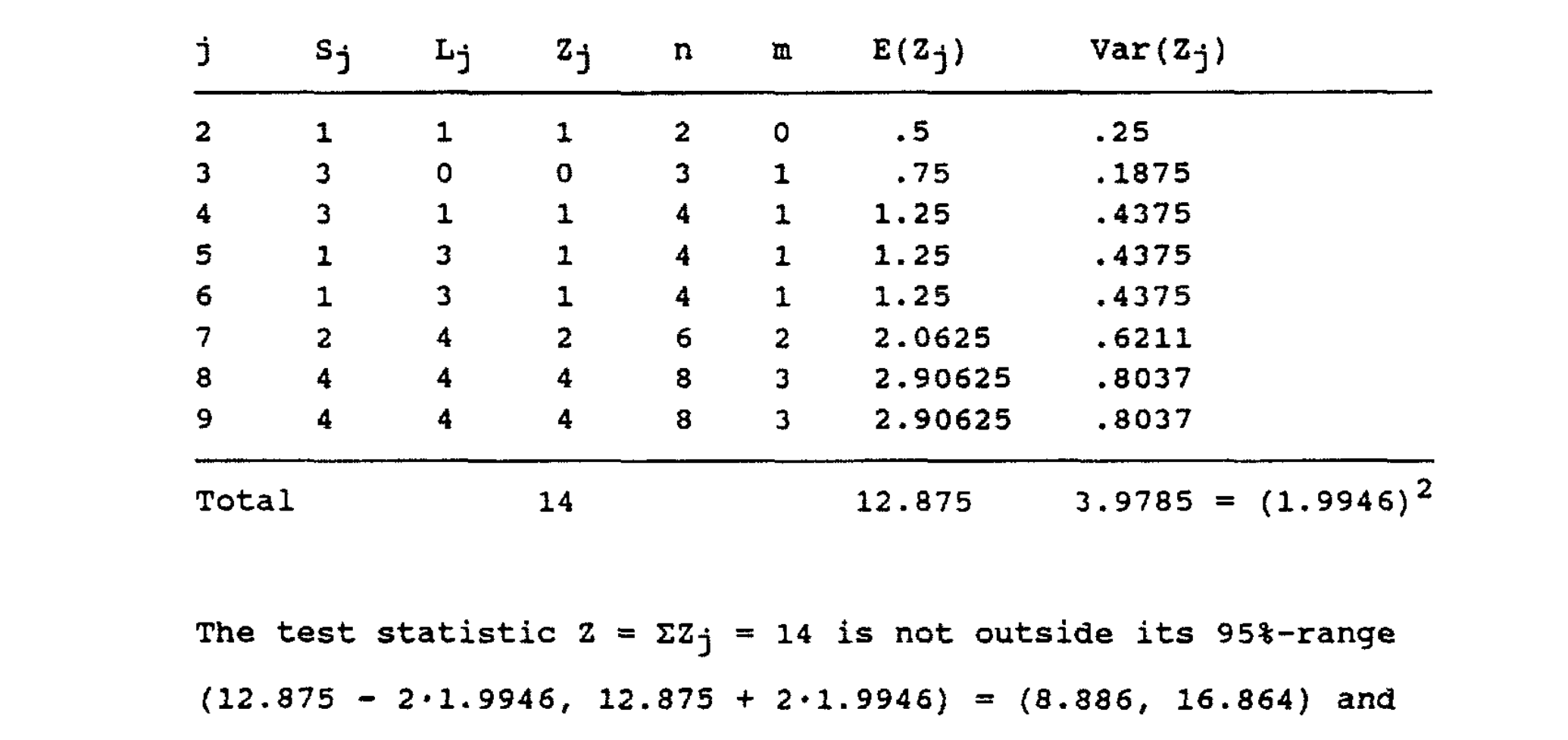

This leads to different bounds for the interval:

versus

While we still fail to reject the null hypothesis, and ultimately reach the same conclusion, the different confidence intervals and test statistic make it hard to verify the code against the paper. I think some instances of VarZ were supposed to be replaced with EZ instead, so that's what I propose. The results will differ slightly from the paper since I think Mack rounded up from 1.96 to 2 when constructing the intervals.

Sorry for late response @genedan. Your proposal looks great. I am all for correcting issues that have us deviate from the paper, and it looks like you've gone through that with a fine-toothed comb.