cannot unconditionally set status in `__init__`

A charm cannot unconditionally set status in __init__

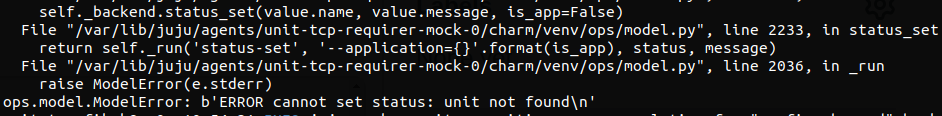

Reason is, if the unit is being torn down, status_set will raise an error:

Interestingly enough, it looks like the last events the charm received did NOT include the teardown sequence:

┏━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┓ ┃ timestamp ┃ tcp-requirer-mock/0 ┃ ┡━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┩ │ 10:50:15 │ ingress-per-unit-relation-changed │ │ 10:50:15 │ ingress-per-unit-relation-joined │ │ 10:50:10 │ ingress-per-unit-relation-created │ │ 10:49:59 │ update-status │ │ 10:48:41 │ ingress-per-unit-relation-departed │ │ 10:45:55 │ tcp-server-pebble-ready │ │ 10:45:53 │ ingress-per-unit-relation-changed │ │ 10:45:52 │ ingress-per-unit-relation-changed │ │ 10:45:52 │ ingress-per-unit-relation-joined │ │ 10:45:52 │ ingress-per-unit-relation-created │ └───────────┴────────────────────────────────────┘

I'm not sure if this is related.

Point remains: should we warn against setting status in init, or places where the unit is not guaranteed to exist? Should we raise a more informative error message? Should we simply silently fail if one is trying to set a status on a dying unit? After all, we don't really care. It's just clutter in the debug-log.

We do many things in __init__ as part of charm library instantiation. Here's an example from prometheus. Many things can happen there inside those charm libraries.

Not setting status inside charm libraries is only a convention.

Seems to me that silently ignoring or a log.warning are better than a traceback.

For this particular case, it might be worth just catching the exception and putting an entry in the debug log. Especially since charms don't have any way of "knowing" that the status-set will fail in advance.

- This has been a while, so we should probably try to reproduce this on Juju 3 and see what events we get.

- Probably the least invasive change would be to have ops catch the ModelError in status-set, and if it includes "unit not found" re-raise a more specific exception subclass that helps the charmer debug: "Juju reported unit not found -- is the unit being torn down?" or similar.

@PietroPasotti What's the actual concern here: noise in the debug log? The unit blipping into error state for a second during teardown? Or something else? To me the current behaviour seems okay, but just making sure I'm not missing something.

I struggle to reproduce it, I need some help here @PietroPasotti

I tried to set the status in __init__ then remove a unit. Even tried to add a for loop to set it many times then remove units/kill pods/etc., but still failed to reproduce it...

Simple test charm used:

#!/usr/bin/env python3

import ops

class SampleK8SCharm(ops.CharmBase):

def __init__(self, *args):

super().__init__(*args)

# here also tried in a for loop

self.unit.status = ops.BlockedStatus("test")

self.framework.observe(self.on['httpbin'].pebble_ready, self._on_httpbin_pebble_ready)

# also tried setting status in a handler for `self.on.remove`

def _on_httpbin_pebble_ready(self, event: ops.PebbleReadyEvent):

container = event.workload

container.add_layer("httpbin", self._pebble_layer, combine=True)

container.replan()

self.unit.status = ops.ActiveStatus()

@property

def _pebble_layer(self) -> ops.pebble.LayerDict:

return {

"summary": "httpbin layer",

"description": "pebble config layer for httpbin",

"services": {

"httpbin": {

"override": "replace",

"summary": "httpbin",

"command": "gunicorn -b 0.0.0.0:80 httpbin:app -k gevent",

"startup": "enabled",

"environment": {

"GUNICORN_CMD_ARGS": f"--log-level {self.model.config['log-level']}"

},

}

},

}

if __name__ == "__main__":

ops.main(SampleK8SCharm)

The charm that this error showed up in originally was https://github.com/canonical/traefik-k8s-operator/blob/main/tests/integration/testers/tcp/src/charm.py

I haven't tried it out since, possibly the issue is gone in latest juju.

As you can see that charm sets active in init. I recall it errored out during teardown, but I can't remember precisely at which stage.

The charm that this error showed up in originally was https://github.com/canonical/traefik-k8s-operator/blob/main/tests/integration/testers/tcp/src/charm.py

I haven't tried it out since, possibly the issue is gone in latest juju.

As you can see that charm sets active in init. I recall it errored out during teardown, but I can't remember precisely at which stage.

Hi Pietro,

I tried with traefic-k8s chart (latest/stable revision 169) with juju (version 3.1.7-genericlinux-amd64 and 3.4.0-genericlinux-amd64) but could not reproduce.

I tried to search the error message, and it's from juju, not OF, and it seems it could happen theoretically: https://github.com/juju/juju/blob/3a43e3202323021dd3f9ee70ef3f28b7691cc153/state/status_unit_test.go#L133.

I tried to add unit and remove unit but it seems the state transfer looks fine, ready -> maintenance -> terminated.

Screenshot:

@benhoyt shall we close this issue for now?

Yeah, let's close this for now -- presuming the issue has gone away in recent version of Juju. If we have a clear repro in future, happy to reopen.