Uncensor

the project is very good but it look has it have some censorship about nudity. Are possible to turn off al the filters from the models used?

I believe this implementation don't have any nudity filters built-in.

@chaos4455 we don't use any safety checkers for output images, but we hope that users will not use our project to create inappropriate or illegal content.

@bes-dev , thanks for reply. "but we hope that users will not use our project to create inappropriate or illegal content." I hope it too, unfortunately from my vision we have no control on that. You make a very grood work, very good.

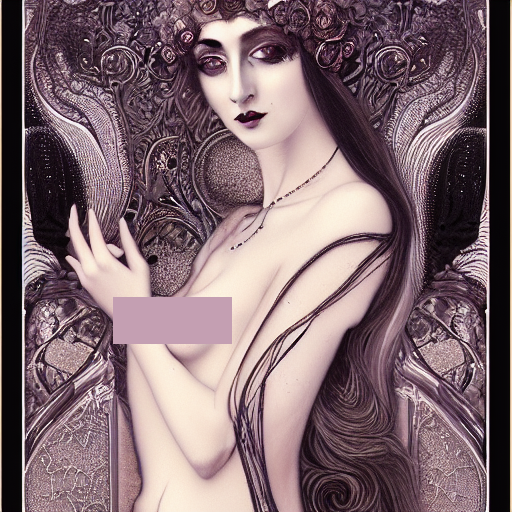

Here an example. I use some input with sexy and sensual word as nude and i dont give any nude result.

Examples: Looks like the model try to censor nude parts or words relative to those themes.

Otherwise, if we put something random the results look preety awesome.

Here an example, the model try to hide the sexual body parts. Thanks again!

Here an example, the model try to hide the sexual body parts. Thanks again!

Here an example, the model try to hide the sexual body parts. Thanks again!

This is because not a lot of explicit material included in laion dataset (on which SD was trained) (and probably it is additionally filtered before training SD). So SD as is not suitable as porn generator. (Architecture itself is pretty capable to do it, yet proper dataset and a lot of computing power required to achieve this goal)

Thanks for the answer, is possible to edit the code of this project to use a more suitable dataset? i dont have fhurther klowledgement about this but if you tell me some good information i think im capable to do that. Are possible to use others dataset in this projetc? Can you please help me to do that? i see that when i start the project fisrt time it download a 3gb dataset. Are possible do force the project to use other more suitable to this?

There is no censorship happening here, I have folders full images containing artistic nudes, both intentional and accidental. I can easily assure you that there is nothing being censored:

....now with that said, this is not a random porn generation tool, and I'd hate to see problems arising from it's use as such. Honestly what you're likely seeing is a limitation of the training set of images, not intentional censorship.

The original Laion5B dataset does have a "NSFW" score:

Additionally, we provide several nearest neighbor indices, an improved web interface for exploration & subset creation as well as detection scores for watermark and NSFW.

I do not have a reference for that, but I think the stable diffusion was trained on a subset of Laion5B with the NSFW tagged content removed (or better: not included). As such the model has "seen" less nudity and explicit content than it could have seen.

That said, it certainly has seen nudity and can be coerced into reproducing it with (un-)appropriate prompts.

@chaos4455 The thing is that it is not as simple as using "a more suitable dataset". There literally is no (open) alternative to the Stable Diffusion model that this project relies on. And (if I am not mistaken) that cost USD 600000 to train when it was first released.

(Sidenote: am I the only one not trying to produce porn with stable diffusion? :-) )

Yeah, thanks, thats right: For the moment this is preety impressive. Im using ain i39100f and the performance is quite impressive too. arround 6,3 sec for instance. Thanks for the information you give to us.

However, fine tuning should be possible, and not as expensive as initial training. And we already have proof of it: https://github.com/harubaru/waifu-diffusion