Help metrics functions

I'm adding some basic support for crossvalidation (targeting metrics like p@k, map@k and ndcg@k to start off with). Its a work in progress right now, but the initial changes are here: https://github.com/benfred/implicit/compare/eval

With this branch you can do something like

from implicit.evaluation import precision_at_k, train_test_split from implicit.als import AlternatingLeastSquares from implicit.datasets.movielens import get_movielens movies, ratings = get_movielens("1m") train, test = train_test_split(ratings) model = AlternatingLeastSquares(factors=128, regularization=20, iterations=15) model.fit(train) p = precision_at_k(model, train.T.tocsr(), test.T.tocsr(), K=10, num_threads=4)You can check out the eval branch if you want to try this out today ( that example above should work with this branch), I'm hoping to have this finished up later this week

I'm adding some basic support for crossvalidation (targeting metrics like p@k, map@k and ndcg@k to start off with). Its a work in progress right now, but the initial changes are here: https://github.com/benfred/implicit/compare/eval

With this branch you can do something like

from implicit.evaluation import precision_at_k, train_test_split from implicit.als import AlternatingLeastSquares from implicit.datasets.movielens import get_movielens movies, ratings = get_movielens("1m") train, test = train_test_split(ratings) model = AlternatingLeastSquares(factors=128, regularization=20, iterations=15) model.fit(train) p = precision_at_k(model, train.T.tocsr(), test.T.tocsr(), K=10, num_threads=4)You can check out the eval branch if you want to try this out today ( that example above should work with this branch), I'm hoping to have this finished up later this week

hi @benfred, How to get the recommendations for a user, if it was trained as user_item_rating?

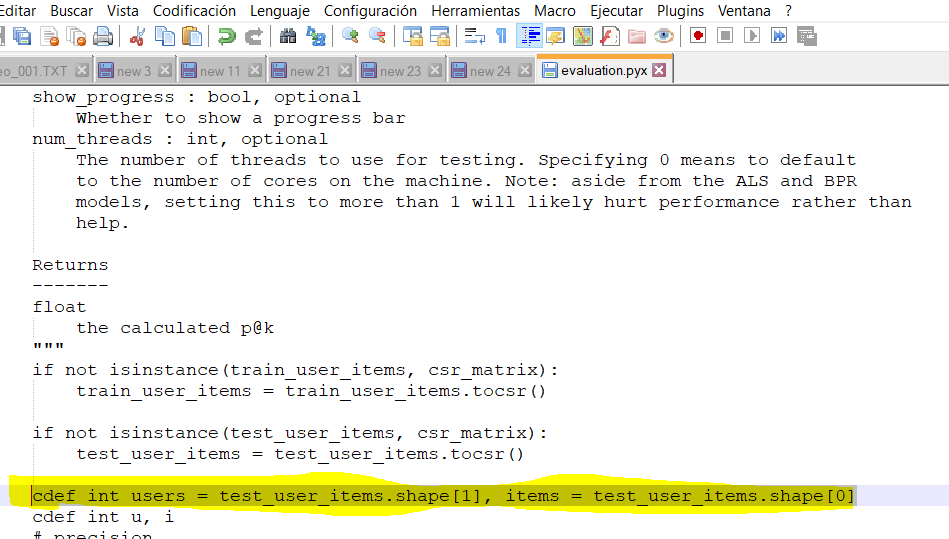

Understanding that the recommendations of the initial guide are trained with the item_user_data matrix, while the ranking_metrics_at_k functions have train_user_items as input, and in the next line you will get the users and items cdef int users = test_user_items.shape [0], items = test_user_items.shape [1]

Modifying the file evaluation.pyx, as highlighted in the image, solves it ?. Please help.

Thank you!