Is it possible to make a skip connection hypernetwork?

It is possible to make a totally manual mode which allows to make connection and activation function every layer? Maybe resblock like architecture or skip connection is useful for hypernetwork

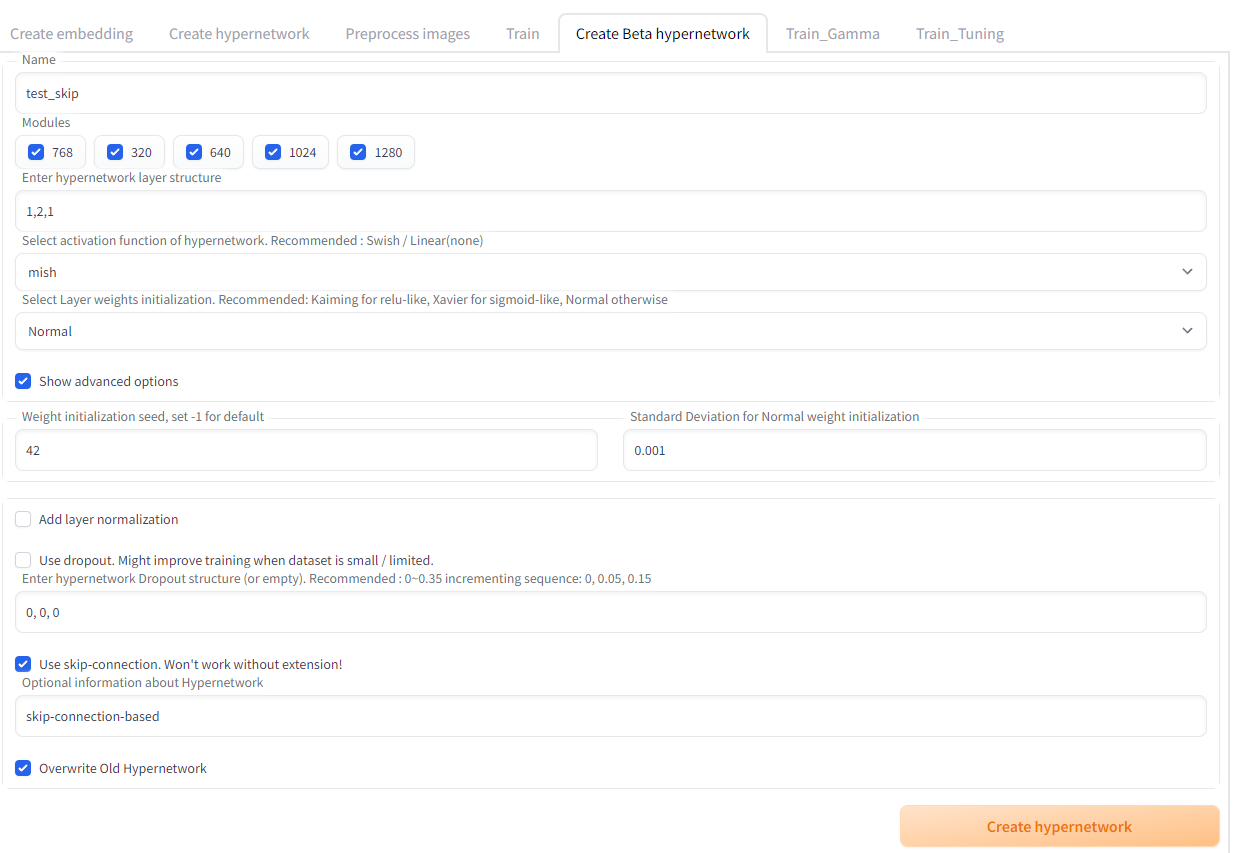

Yes, but the problem is UI, I could add some way to load model but creating it is different story.... I'll try making it to save and load any arbitrary model structure, but creating it would be homework for users.

https://github.com/aria1th/Hypernetwork-MonkeyPatch-Extension/tree/residual-connection

Currently skip-connection, ResBlock architecture for normal tensors are available here.

There are few things you need to know:

Since it 'interpolates' attention vector, setting value lower than 1 would make loss in vector, because it cannot recover vectors from compressed form.

(It could be solved by having attention-compressing and decompressing model somehow?)

Default standard deviation is too big for attention transformation. I suggest using lower stdev.

Original webui does not support these types of hypernetworks.

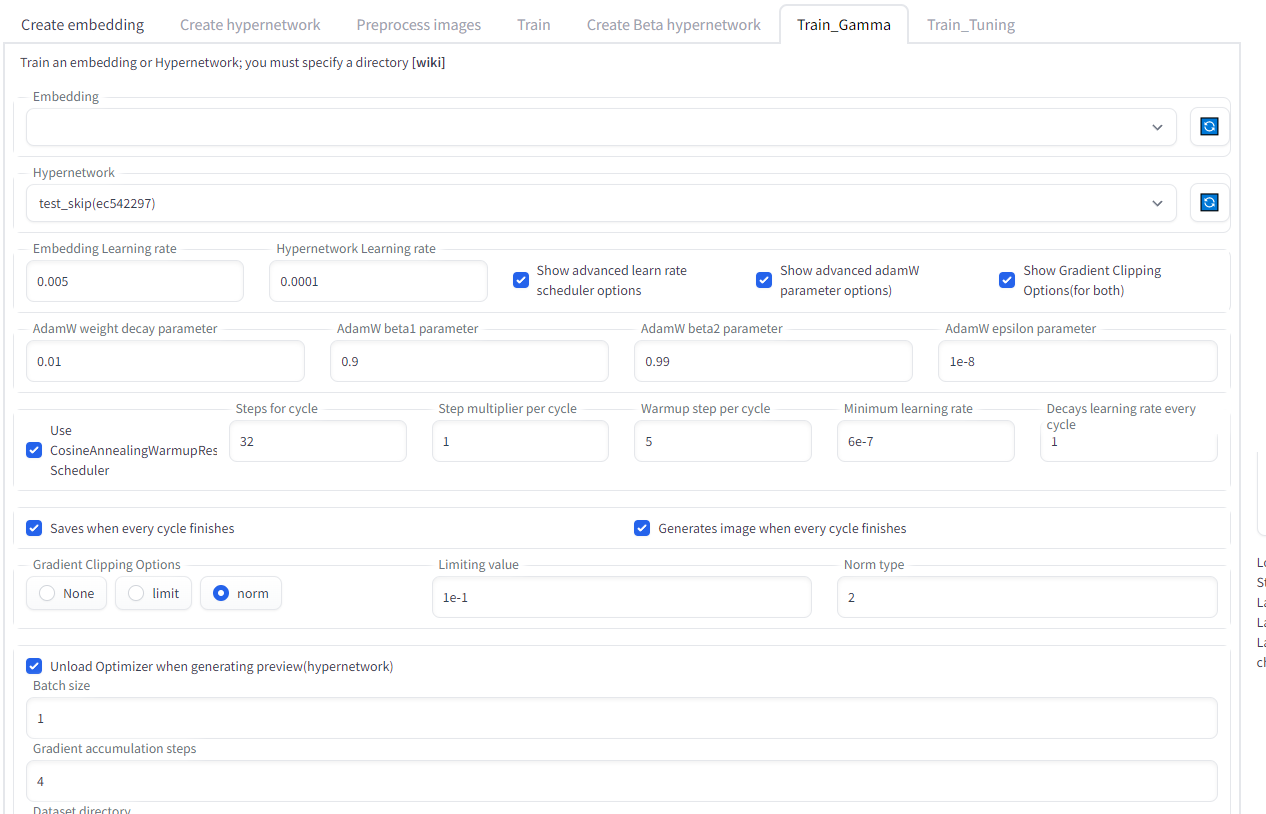

Training works well anyway, but you might have to struggle with setting.

Thank you for working on this. I will try it

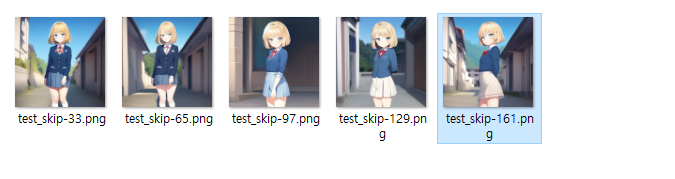

It looks work well

Epoch 0 (Cycle 0 after warm up step 298)

Epoch 39 (Cycle 9 step 11920)

Epoch 39 (Cycle 9 step 11920)

Skip-connection hypernetworks train on vainilla NovelAI model works excellent with a pre-trained dreambooth model