ml-stable-diffusion

ml-stable-diffusion copied to clipboard

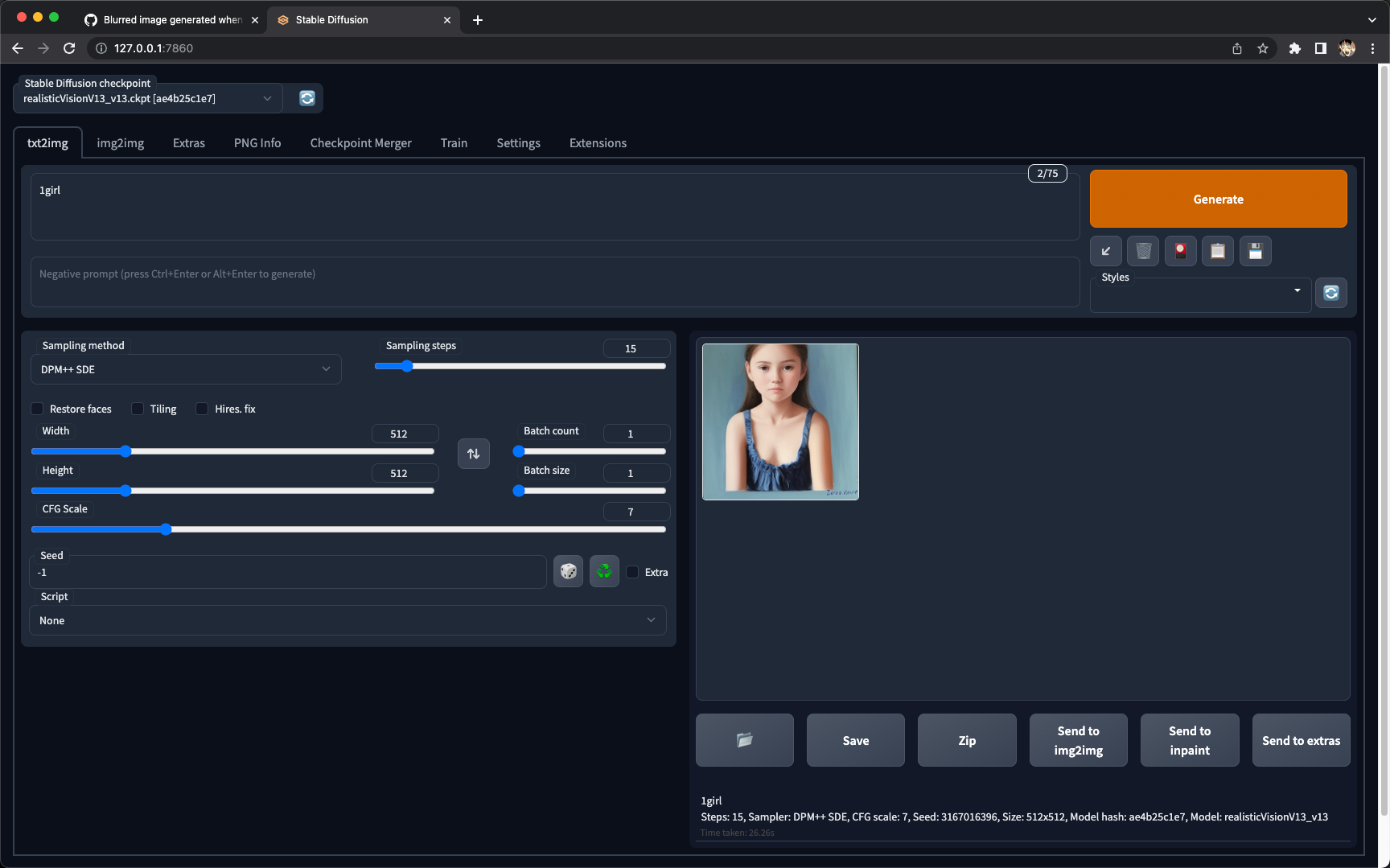

Blurred image generated using converted safetensor models

Running Latest Version

- [X] I am running the latest version

Processor

M1 Pro (or later)

Intel processor

Computer:

Memory

16GB

What happened?

I converted the civitai model to coreml by downloading safetensor file from https://civitai.com/models/4201/realistic-vision-v13 according to guide: https://github.com/godly-devotion/MochiDiffusion/wiki/How-to-convert-ckpt-or-safetensors-files-to-Core-ML

Problem:

log of conversion

(coreml_stable_diffusion) ➜ ml-stable-diffusion git:(main) ✗ python -m python_coreml_stable_diffusion.torch2coreml --convert-unet --convert-text-encoder --convert-vae-decoder --convert-vae-encoder --model-version "./diffusers_model" --bundle-resources-for-swift-cli --attention-implementation SPLIT_EINSUM -o "./real_split-einsum_compiled"

INFO:__main__:Initializing StableDiffusionPipeline with ./diffusers_model..

/Users/jo32/miniforge3/envs/coreml_stable_diffusion/lib/python3.8/site-packages/transformers/models/clip/feature_extraction_clip.py:28: FutureWarning: The class CLIPFeatureExtractor is deprecated and will be removed in version 5 of Transformers. Please use CLIPImageProcessor instead.

warnings.warn(

INFO:__main__:Done.

INFO:__main__:Converting vae_decoder

/Users/jo32/miniforge3/envs/coreml_stable_diffusion/lib/python3.8/site-packages/diffusers/models/resnet.py:109: TracerWarning: Converting a tensor to a Python boolean might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

assert hidden_states.shape[1] == self.channels

/Users/jo32/miniforge3/envs/coreml_stable_diffusion/lib/python3.8/site-packages/diffusers/models/resnet.py:122: TracerWarning: Converting a tensor to a Python boolean might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

if hidden_states.shape[0] >= 64:

INFO:__main__:Converting vae_decoder to CoreML..

Converting PyTorch Frontend ==> MIL Ops: 0%| | 0/426 [00:00<?, ? ops/s]WARNING:__main__:Casted the `beta`(value=0.0) argument of `baddbmm` op from int32 to float32 dtype for conversion!

Converting PyTorch Frontend ==> MIL Ops: 100%|▉| 425/426 [00:00<00:00, 2822.27 o

Running MIL Common passes: 100%|███████████| 40/40 [00:00<00:00, 79.11 passes/s]

Running MIL FP16ComputePrecision pass: 100%|█| 1/1 [00:00<00:00, 1.67 passes/s]

Running MIL Clean up passes: 100%|█████████| 11/11 [00:02<00:00, 4.47 passes/s]

INFO:__main__:Saved vae_decoder model to ./real_split-einsum_compiled/Stable_Diffusion_version_._diffusers_model_vae_decoder.mlpackage

INFO:__main__:Saved vae_decoder into ./real_split-einsum_compiled/Stable_Diffusion_version_._diffusers_model_vae_decoder.mlpackage

INFO:__main__:Converted vae_decoder

INFO:__main__:Converting vae_encoder

/Users/jo32/miniforge3/envs/coreml_stable_diffusion/lib/python3.8/site-packages/diffusers/models/resnet.py:182: TracerWarning: Converting a tensor to a Python boolean might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

assert hidden_states.shape[1] == self.channels

/Users/jo32/miniforge3/envs/coreml_stable_diffusion/lib/python3.8/site-packages/diffusers/models/resnet.py:187: TracerWarning: Converting a tensor to a Python boolean might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

assert hidden_states.shape[1] == self.channels

INFO:__main__:Converting vae_encoder to CoreML..

Converting PyTorch Frontend ==> MIL Ops: 0%| | 0/370 [00:00<?, ? ops/s]WARNING:__main__:Casted the `beta`(value=0.0) argument of `baddbmm` op from int32 to float32 dtype for conversion!

Converting PyTorch Frontend ==> MIL Ops: 100%|▉| 369/370 [00:00<00:00, 3335.20 o

Running MIL Common passes: 100%|██████████| 40/40 [00:00<00:00, 109.98 passes/s]

Running MIL FP16ComputePrecision pass: 100%|█| 1/1 [00:00<00:00, 2.47 passes/s]

Running MIL Clean up passes: 100%|█████████| 11/11 [00:01<00:00, 7.38 passes/s]

INFO:__main__:Saved vae_encoder model to ./real_split-einsum_compiled/Stable_Diffusion_version_._diffusers_model_vae_encoder.mlpackage

INFO:__main__:Saved vae_encoder into ./real_split-einsum_compiled/Stable_Diffusion_version_._diffusers_model_vae_encoder.mlpackage

INFO:__main__:Converted vae_encoder

INFO:__main__:Converting unet

INFO:__main__:Attention implementation in effect: AttentionImplementations.SPLIT_EINSUM

INFO:__main__:Sample inputs spec: {'sample': (torch.Size([2, 4, 64, 64]), torch.float32), 'timestep': (torch.Size([2]), torch.float32), 'encoder_hidden_states': (torch.Size([2, 768, 1, 77]), torch.float32)}

INFO:__main__:JIT tracing..

/Users/jo32/Projects/xcode/ml-stable-diffusion/python_coreml_stable_diffusion/layer_norm.py:61: TracerWarning: Converting a tensor to a Python boolean might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

assert inputs.size(1) == self.num_channels

INFO:__main__:Done.

INFO:__main__:Converting unet to CoreML..

WARNING:coremltools:Tuple detected at graph output. This will be flattened in the converted model.

Converting PyTorch Frontend ==> MIL Ops: 0%| | 0/7876 [00:00<?, ? ops/s]WARNING:coremltools:Saving value type of int64 into a builtin type of int32, might lose precision!

Converting PyTorch Frontend ==> MIL Ops: 100%|▉| 7874/7876 [00:01<00:00, 5011.63

Running MIL Common passes: 100%|███████████| 40/40 [00:08<00:00, 4.47 passes/s]

Running MIL FP16ComputePrecision pass: 100%|█| 1/1 [00:23<00:00, 23.51s/ passes]

Running MIL Clean up passes: 100%|█████████| 11/11 [02:57<00:00, 16.10s/ passes]

INFO:__main__:Saved unet model to ./real_split-einsum_compiled/Stable_Diffusion_version_._diffusers_model_unet.mlpackage

INFO:__main__:Saved unet into ./real_split-einsum_compiled/Stable_Diffusion_version_._diffusers_model_unet.mlpackage

INFO:__main__:Converted unet

INFO:__main__:Converting text_encoder

INFO:__main__:Sample inputs spec: {'input_ids': (torch.Size([1, 77]), torch.float32)}

INFO:__main__:JIT tracing text_encoder..

/Users/jo32/miniforge3/envs/coreml_stable_diffusion/lib/python3.8/site-packages/transformers/models/clip/modeling_clip.py:284: TracerWarning: Converting a tensor to a Python boolean might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

if attn_weights.size() != (bsz * self.num_heads, tgt_len, src_len):

/Users/jo32/miniforge3/envs/coreml_stable_diffusion/lib/python3.8/site-packages/transformers/models/clip/modeling_clip.py:292: TracerWarning: Converting a tensor to a Python boolean might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

if causal_attention_mask.size() != (bsz, 1, tgt_len, src_len):

/Users/jo32/miniforge3/envs/coreml_stable_diffusion/lib/python3.8/site-packages/transformers/models/clip/modeling_clip.py:324: TracerWarning: Converting a tensor to a Python boolean might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

if attn_output.size() != (bsz * self.num_heads, tgt_len, self.head_dim):

INFO:__main__:Done.

INFO:__main__:Converting text_encoder to CoreML..

WARNING:coremltools:Tuple detected at graph output. This will be flattened in the converted model.

Converting PyTorch Frontend ==> MIL Ops: 44%|▍| 361/814 [00:00<00:00, 3608.45 oWARNING:coremltools:Saving value type of int64 into a builtin type of int32, might lose precision!

Converting PyTorch Frontend ==> MIL Ops: 100%|▉| 812/814 [00:00<00:00, 4170.30 o

Running MIL Common passes: 100%|███████████| 40/40 [00:00<00:00, 87.37 passes/s]

Running MIL FP16ComputePrecision pass: 100%|█| 1/1 [00:00<00:00, 1.09 passes/s]

Running MIL Clean up passes: 100%|█████████| 11/11 [00:02<00:00, 4.33 passes/s]

INFO:__main__:Saved text_encoder model to ./real_split-einsum_compiled/Stable_Diffusion_version_._diffusers_model_text_encoder.mlpackage

INFO:__main__:Saved text_encoder into ./real_split-einsum_compiled/Stable_Diffusion_version_._diffusers_model_text_encoder.mlpackage

INFO:__main__:Converted text_encoder

INFO:__main__:Bundling resources for the Swift CLI

INFO:__main__:Created ./real_split-einsum_compiled/Resources for Swift CLI assets

INFO:__main__:Compiling ./real_split-einsum_compiled/Stable_Diffusion_version_._diffusers_model_text_encoder.mlpackage

/Users/jo32/Projects/xcode/ml-stable-diffusion/real_split-einsum_compiled/Resources/Stable_Diffusion_version_._diffusers_model_text_encoder.mlmodelc/coremldata.bin

INFO:__main__:Compiled ./real_split-einsum_compiled/Stable_Diffusion_version_._diffusers_model_text_encoder.mlpackage to ./real_split-einsum_compiled/Resources/TextEncoder.mlmodelc

INFO:__main__:Compiling ./real_split-einsum_compiled/Stable_Diffusion_version_._diffusers_model_vae_decoder.mlpackage

/Users/jo32/Projects/xcode/ml-stable-diffusion/real_split-einsum_compiled/Resources/Stable_Diffusion_version_._diffusers_model_vae_decoder.mlmodelc/coremldata.bin

INFO:__main__:Compiled ./real_split-einsum_compiled/Stable_Diffusion_version_._diffusers_model_vae_decoder.mlpackage to ./real_split-einsum_compiled/Resources/VAEDecoder.mlmodelc

INFO:__main__:Compiling ./real_split-einsum_compiled/Stable_Diffusion_version_._diffusers_model_vae_encoder.mlpackage

/Users/jo32/Projects/xcode/ml-stable-diffusion/real_split-einsum_compiled/Resources/Stable_Diffusion_version_._diffusers_model_vae_encoder.mlmodelc/coremldata.bin

INFO:__main__:Compiled ./real_split-einsum_compiled/Stable_Diffusion_version_._diffusers_model_vae_encoder.mlpackage to ./real_split-einsum_compiled/Resources/VAEEncoder.mlmodelc

INFO:__main__:Compiling ./real_split-einsum_compiled/Stable_Diffusion_version_._diffusers_model_unet.mlpackage

/Users/jo32/Projects/xcode/ml-stable-diffusion/real_split-einsum_compiled/Resources/Stable_Diffusion_version_._diffusers_model_unet.mlmodelc/coremldata.bin

INFO:__main__:Compiled ./real_split-einsum_compiled/Stable_Diffusion_version_._diffusers_model_unet.mlpackage to ./real_split-einsum_compiled/Resources/Unet.mlmodelc

WARNING:__main__:./real_split-einsum_compiled/Stable_Diffusion_version_._diffusers_model_unet_chunk1.mlpackage not found, skipping compilation to UnetChunk1.mlmodelc

WARNING:__main__:./real_split-einsum_compiled/Stable_Diffusion_version_._diffusers_model_unet_chunk2.mlpackage not found, skipping compilation to UnetChunk2.mlmodelc

WARNING:__main__:./real_split-einsum_compiled/Stable_Diffusion_version_._diffusers_model_safety_checker.mlpackage not found, skipping compilation to SafetyChecker.mlmodelc

INFO:__main__:Downloading and saving tokenizer vocab.json

INFO:__main__:Done

INFO:__main__:Downloading and saving tokenizer merges.txt

INFO:__main__:Done

INFO:__main__:Bundled resources for the Swift CLI

I have tried using the generated ckpt in automatic1111 webui, the result is fine. So I guess the model itself is fine.