[ZEPPELIN-4326][spark]Spark Interpreter restart failed when no suffic…

What is this PR for?

The pr is the last fix of the ZEPPELIN-4326 The user can still restart the spark interpreter successfullywhen no sufficient yarn queue resources

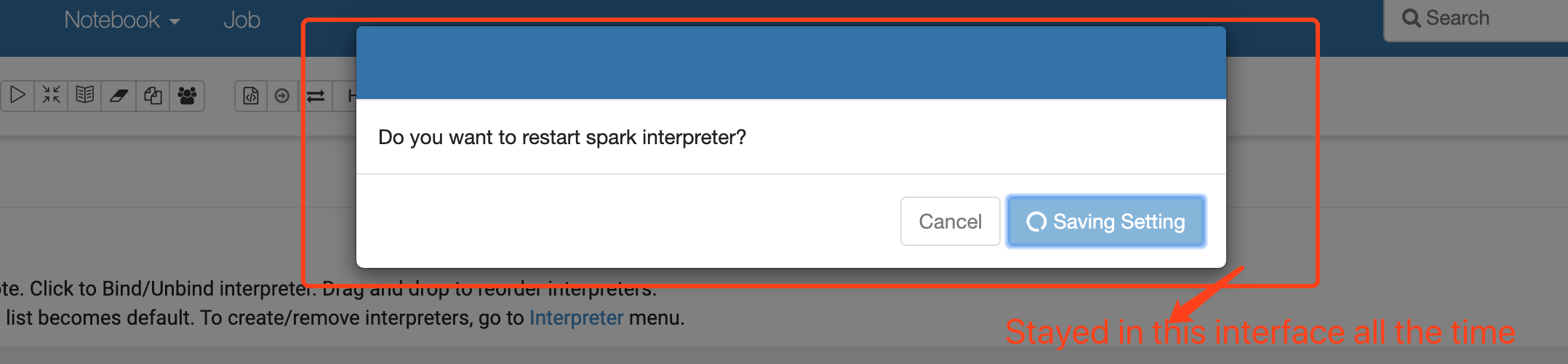

When the queue resource is insufficient, the spark task will remain in the ACCEPTED state. If the spark interpreter is restarted at this time, the restart interface will be stuck[thread blocking in the LazyOpenInterpreter.open method, this is a synchronized method]. Restarting the spark interpreter fails. only use the yarn applicaiton -kill appID command or the kill -9 SparkSubmit process to restart the spark interpreter., which is very unfriendly to the user. At present, I use the system.exit(1) rough way to exit the JVM to achieve the purpose of restarting successfully, similar to the task of ending the ACCEPTED state by using Ctrl+C in the spark-shell.Spark only has cancelJob / cancelStage APIs,i thinks Spark is unable to do

What type of PR is it?

Bug Fix

Todos

- [ ] - Task

What is the Jira issue?

https://issues.apache.org/jira/browse/ZEPPELIN-4326

How should this be tested?

- Manually tested

Screenshots (if appropriate)

Questions:

- Add another ACCETTED state to tell the user that the task is in the ACCEPTED state instead of the running state?【PENDING -> ACCEPTED -> RUNNING】

- For a spark task in the ACCEPTED state, the user can only restart the interpreter and cannot cancel with the cancel button.