[SYSTEMDS-3444] Spark Write Compressed

Recent commit did not address the spark writing completely therefore this PR follows up and adds the writing of a RDD to disk.

Preview Compressed format for binary matrices, comparing text binary and compressed.:

Reading -> plus -> sum of ... empty ... 256k x 2k matrix.

Binary:

1.536E9

SystemDS Statistics:

Total elapsed time: 5.827 sec.

Total compilation time: 0.383 sec.

Total execution time: 5.444 sec.

Cache hits (Mem/Li/WB/FS/HDFS): 1/0/0/0/1.

Cache writes (Li/WB/FS/HDFS): 0/0/1/0.

Cache times (ACQr/m, RLS, EXP): 0.076/0.000/3.880/0.000 sec.

HOP DAGs recompiled (PRED, SB): 0/0.

HOP DAGs recompile time: 0.000 sec.

Total JIT compile time: 0.253 sec.

Total JVM GC count: 0.

Total JVM GC time: 0.0 sec.

Heavy hitter instructions:

# Instruction Time(s) Count

1 + 5.190 1

2 uak+ 0.246 1

3 createvar 0.006 2

4 print 0.001 1

5 rmvar 0.001 2

Compressed:

1.536E9

SystemDS Statistics:

Total elapsed time: 0.437 sec.

Total compilation time: 0.376 sec.

Total execution time: 0.060 sec.

Cache hits (Mem/Li/WB/FS/HDFS): 1/0/0/0/1.

Cache writes (Li/WB/FS/HDFS): 0/0/0/0.

Cache times (ACQr/m, RLS, EXP): 0.045/0.000/0.000/0.000 sec.

HOP DAGs recompiled (PRED, SB): 0/0.

HOP DAGs recompile time: 0.000 sec.

Total JIT compile time: 0.132 sec.

Total JVM GC count: 0.

Total JVM GC time: 0.0 sec.

Heavy hitter instructions:

# Instruction Time(s) Count

1 + 0.050 1

2 createvar 0.006 2

3 uak+ 0.003 1

4 print 0.001 1

5 rmvar 0.000 2

Initial Results: Writing a Matrix Block of 64k x 2k.

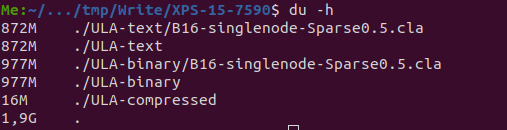

Disk and Memory Size

M = in Memory size

D = on Disk size

M = in Memory size

D = on Disk size

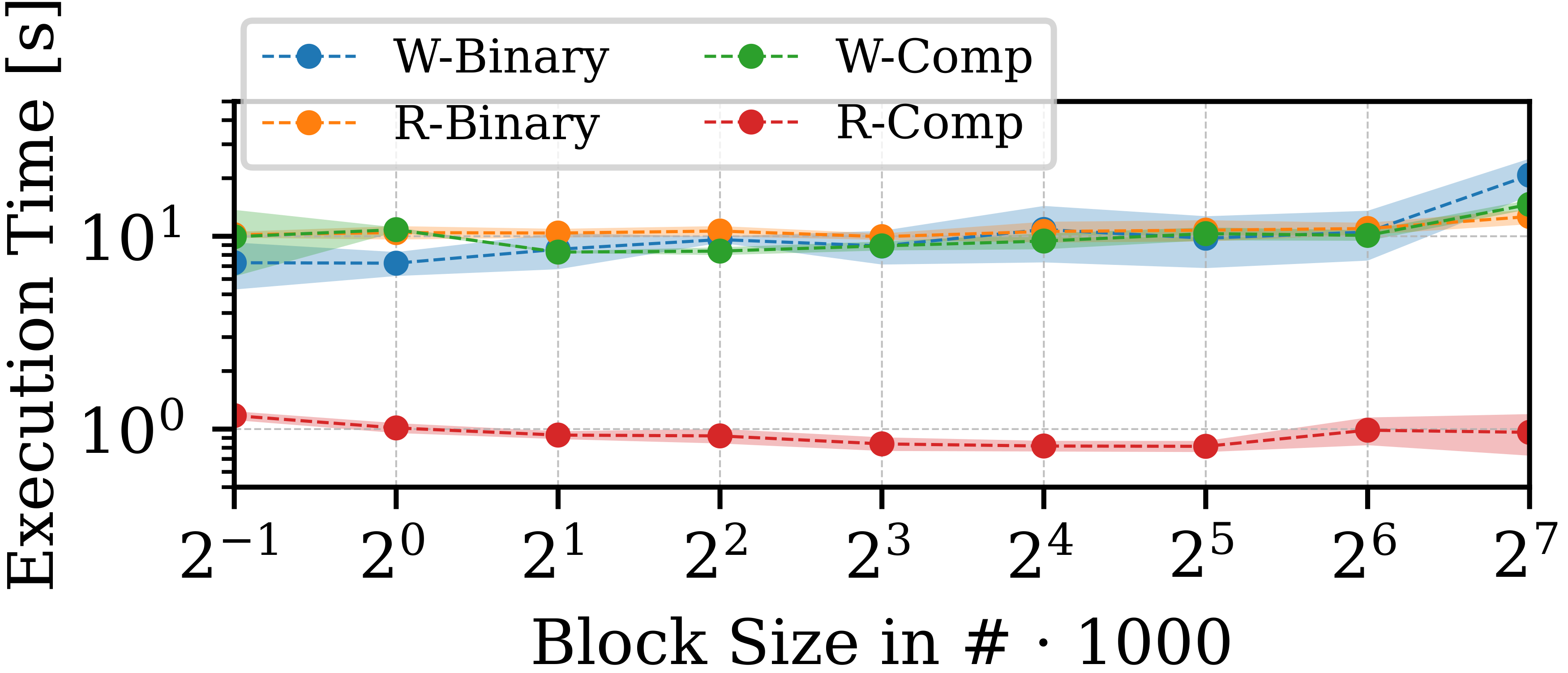

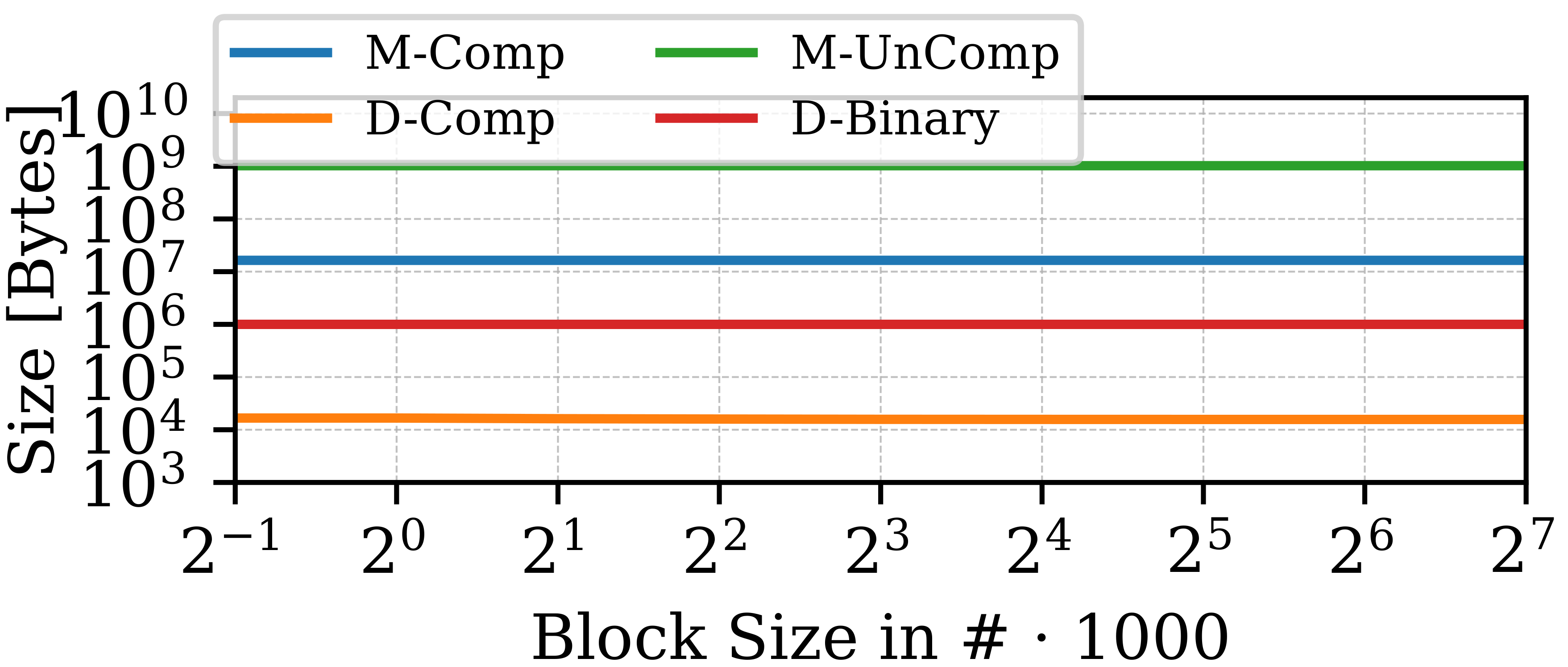

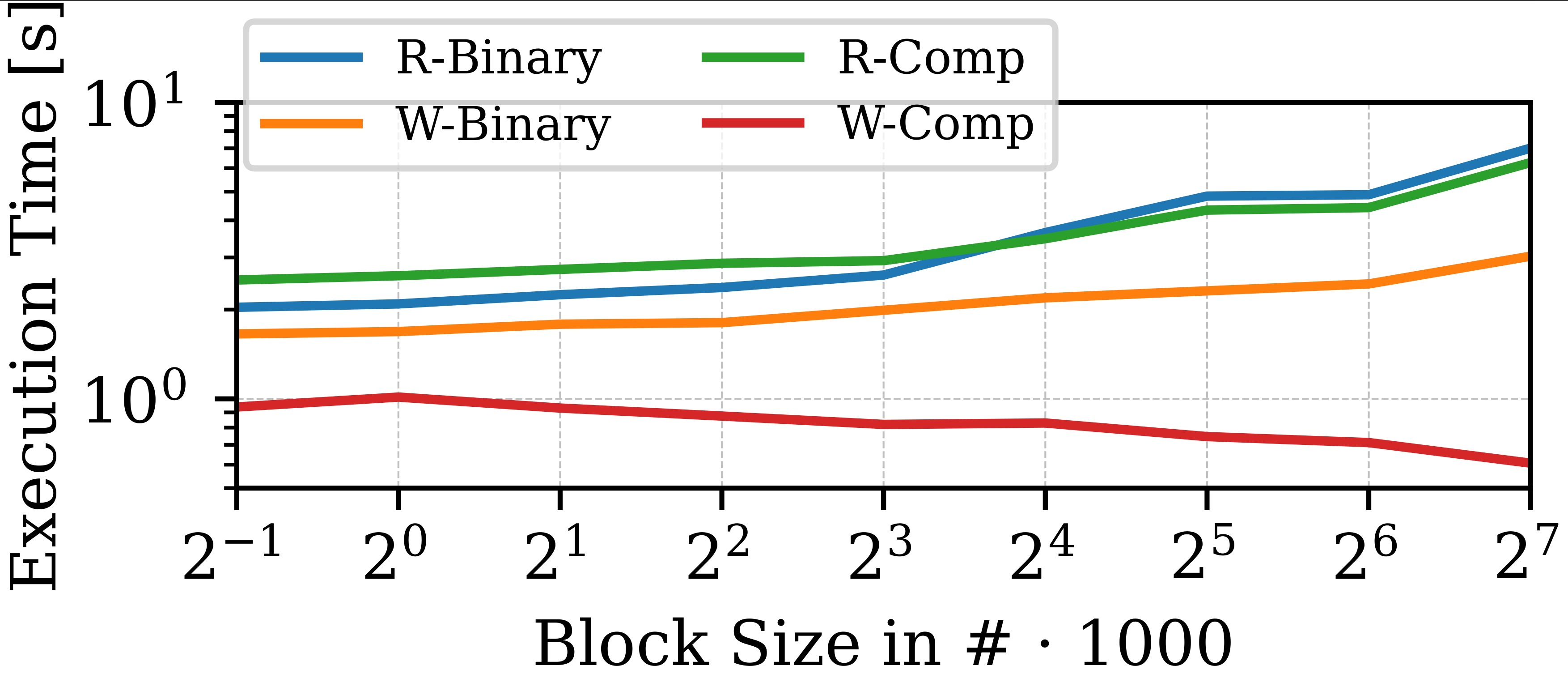

Read and Write end-to-end times:

script1 - write:

script1 - write:

m = rand(rows= $1, cols=$2, min=$3, max=$4, sparsity=$5)

write(m, $7, format=$6)

script2 - read:

m = read($1)

m = m + 3

print(sum(m))

Binary -> reading and writing using our binary format Comp -> Compressed writing and reading using new compressed format.

The times are end to end, so the read script include time to do addition and sum.

Optimization 1. Do not use Hadoop compression but only internal serialization: Improved performance Read: 1.6 -> 0.939 sec 1k blocks and 0.9 to 0.6 with 128k blocks. Write: 2 -> 1.4 sec

Optimization 2: n way combining.

This optimization makes smaller blocks viable, since the combination of the column groups and allocation of all sub combinations was done before this. Note the execution did in general improve and reduce the time of execution, but it is not obvious in the plot since 1. clock speed was low since the experiments were run multiple times on laptop 2. the overhead of other things in execution. One such example is compilation of 300 ms.

more detailed breakdown (Binary matrix 0.5 sparsity 64k x 2k):

Compressed:

blockSize (k), Total Read , Read Time , Plus Time , Sum Time

0.5, 1.013+-0.029, 0.386+-0.012, 0.403+-0.012, 0.037+-0.005

1.0, 0.884+-0.030, 0.273+-0.014, 0.291+-0.015, 0.034+-0.005

2.0, 0.816+-0.025, 0.210+-0.008, 0.227+-0.008, 0.036+-0.005

4.0, 0.787+-0.031, 0.176+-0.006, 0.196+-0.011, 0.036+-0.006

8.0, 0.754+-0.033, 0.153+-0.008, 0.170+-0.008, 0.031+-0.004

16.0, 0.611, 0.121, 0.133, 0.026

32.0, 0.749+-0.033, 0.141+-0.007, 0.158+-0.008, 0.034+-0.005

64.0, 0.739+-0.034, 0.136+-0.004, 0.152+-0.004, 0.032+-0.005

128.0, 0.732+-0.038, 0.117+-0.011, 0.134+-0.011, 0.033+-0.005

Binary:

blockSize (k), Total Read , Read Time , Plus Time , Sum Time

0.5, 1.660+-0.014, 0.768+-0.018, 1.161+-0.022, 0.121+-0.013

1.0, 1.665+-0.040, 0.739+-0.017, 1.147+-0.025, 0.106+-0.008

2.0, 1.774+-0.029, 0.805+-0.014, 1.233+-0.026, 0.117+-0.008

4.0, 1.811+-0.016, 0.833+-0.011, 1.269+-0.012, 0.116+-0.009

8.0, 1.995+-0.039, 0.968+-0.020, 1.423+-0.029, 0.126+-0.013

16.0, 1.979, 0.999, 1.426, 0.114

32.0, 2.313+-0.058, 1.372+-0.044, 1.781+-0.048, 0.120+-0.019

64.0, 2.420+-0.120, 1.350+-0.060, 1.770+-0.065, 0.115+-0.004

128.0, 3.040+-0.127, 2.093+-0.090, 2.508+-0.099, 0.112+-0.008

When increasing the size of the matrix the differences become larger, and the overhead of compressing and writing to disk disappear:

example 256k x 2k binary matrix:

Compressed:

blockSize (k), Disk Size , Total Read , Read Time , Plus Time , Sum Time

0.5, 103116, 1.175+-0.031, 0.763+-0.024, 0.779+-0.024, 0.027+-0.003

1.0, 83536, 1.014+-0.030, 0.585+-0.016, 0.599+-0.016, 0.027+-0.003

2.0, 73760, 0.931+-0.022, 0.469+-0.015, 0.483+-0.015, 0.027+-0.004

4.0, 67880, 0.922+-0.040, 0.424+-0.017, 0.438+-0.018, 0.032+-0.005

8.0, 64940, 0.838+-0.034, 0.346+-0.021, 0.360+-0.021, 0.035+-0.007

16.0, 63720, 0.817+-0.026, 0.321+-0.007, 0.335+-0.007, 0.028+-0.003

32.0, 63112, 0.814+-0.027, 0.309+-0.011, 0.324+-0.012, 0.027+-0.003

64.0, 63112, 0.987+-0.081, 0.348+-0.026, 0.364+-0.026, 0.034+-0.004

128.0, 62656, 0.962+-0.117, 0.337+-0.037, 0.354+-0.038, 0.030+-0.003

Binary:

blockSize (k), Disk Size , Total Read , Read Time , Plus Time , Sum Time

0.5, 4000152, 10.187+-0.181, 3.308+-0.236, 9.306+-0.142, 0.281+-0.032

1.0, 4000070.667+-4.619, 10.449+-0.419, 3.373+-0.172, 9.635+-0.396, 0.284+-0.007

2.0, 4000073.333+-4.619, 10.391+-0.270, 3.385+-0.258, 9.559+-0.286, 0.298+-0.008

4.0, 4000070.667+-4.619, 10.644+-0.326, 3.645+-0.301, 9.804+-0.345, 0.298+-0.016

8.0, 4000101.333+-29.484, 9.954+-0.190, 3.707+-0.139, 9.123+-0.160, 0.288+-0.025

16.0, 4000080, 10.589+-0.650, 4.211+-0.601, 9.718+-0.650, 0.299+-0.003

32.0, 4000056, 10.757+-0.679, 4.422+-0.548, 9.904+-0.663, 0.307+-0.009

64.0, 4000057.333+-9.238, 10.969+-0.388, 4.224+-0.395, 10.131+-0.386, 0.296+-0.007

128.0, 4000020, 12.660+-0.556, 6.316+-0.380, 11.765+-0.559, 0.325+-0.019