Heap memory leak caused OOM

BUG REPORT

- Please describe the issue you observed:

Heap memory leak caused OOM.

- What did you do (The steps to reproduce)?

@Slf4j

@Component

@RocketMQMessageListener(topic = "${rocketmq.consumer.topic}"

, consumerGroup ="${rocketmq.consumer.group}")

public class EventListener implements RocketMQListener<Event>, RocketMQPushConsumerLifecycleListener {

@Override

public void prepareStart(DefaultMQPushConsumer consumer) {

consumer.setMaxReconsumeTimes(5);

consumer.setPullThresholdForTopic(200);

consumer.setPullThresholdSizeForTopic(100);

}

@Override

public void onMessage(Event message) {

log.info("The event listener: {}", JSON.toJSONString(message));

}

}

- What did you expect to see?

Message consumption is normal.

- What did you see instead?

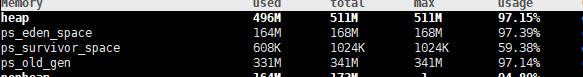

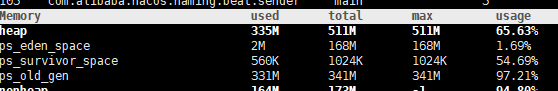

Messages are being consumed, but the old zone is also accumulating.

- Please tell us about your environment:

JDK 1.8 spring-boot 2.3.2.RELEASE rocketmq-spring-boot-starter 2.2.2

- Other information (e.g. detailed explanation, logs, related issues, suggestions how to fix, etc):

ps_old_gen always unable to release, after holding for 1-2 days, OOM will occur.

Maybe it is caused by too many cached messages. You can check rocketmq_client.log to see if there is a the cached message size exceeds the threshold . PullThresholdSizeForTopic can be set smaller.

However, I see MQ Dashboard messages are consumed and are manually GC, and the memory is still not release.

So far I've switched to rocketmq-client 4.9.3 and everything works fine without changing any configuration. PullThresholdForTopic and PullThresholdSizeForTopic I tried a variety of, still can appear

So far I've switched to rocketmq-client 4.9.3 and everything works fine without changing any configuration. PullThresholdForTopic and PullThresholdSizeForTopic I tried a variety of, still can appear

did not user rocketmq-spring-boot-starter 2.2.2。 use rocketmq-client 4.9.3 directly no problem?

So far I've switched to rocketmq-client 4.9.3 and everything works fine without changing any configuration. PullThresholdForTopic and PullThresholdSizeForTopic I tried a variety of, still can appear

did not user rocketmq-spring-boot-starter 2.2.2。 use rocketmq-client 4.9.3 directly no problem?

Yes, i'm use rocketmq-client 4.9.3 is ok in the same MQ. That's why I think it's rocketmq-spring-boot-starter 2.2.2 bug, because nothing else has changed

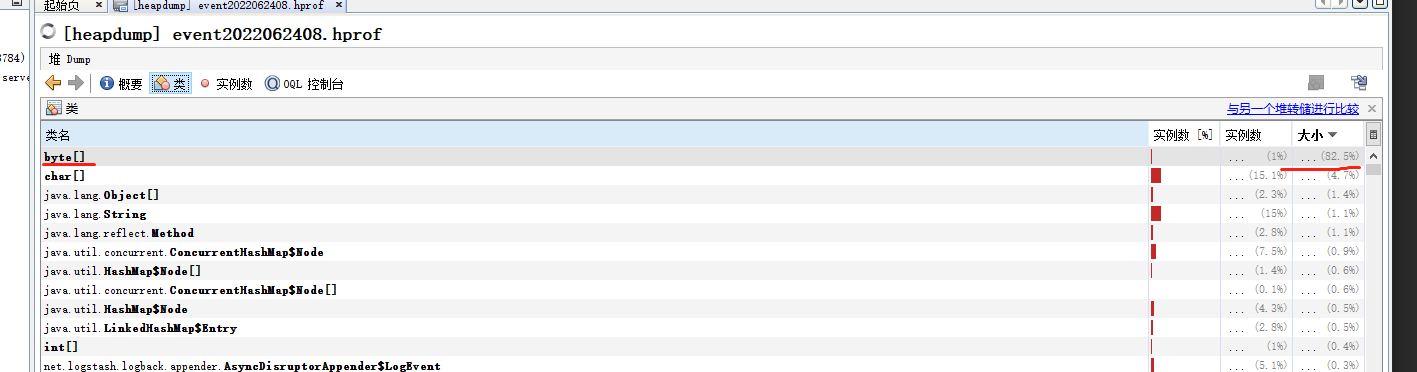

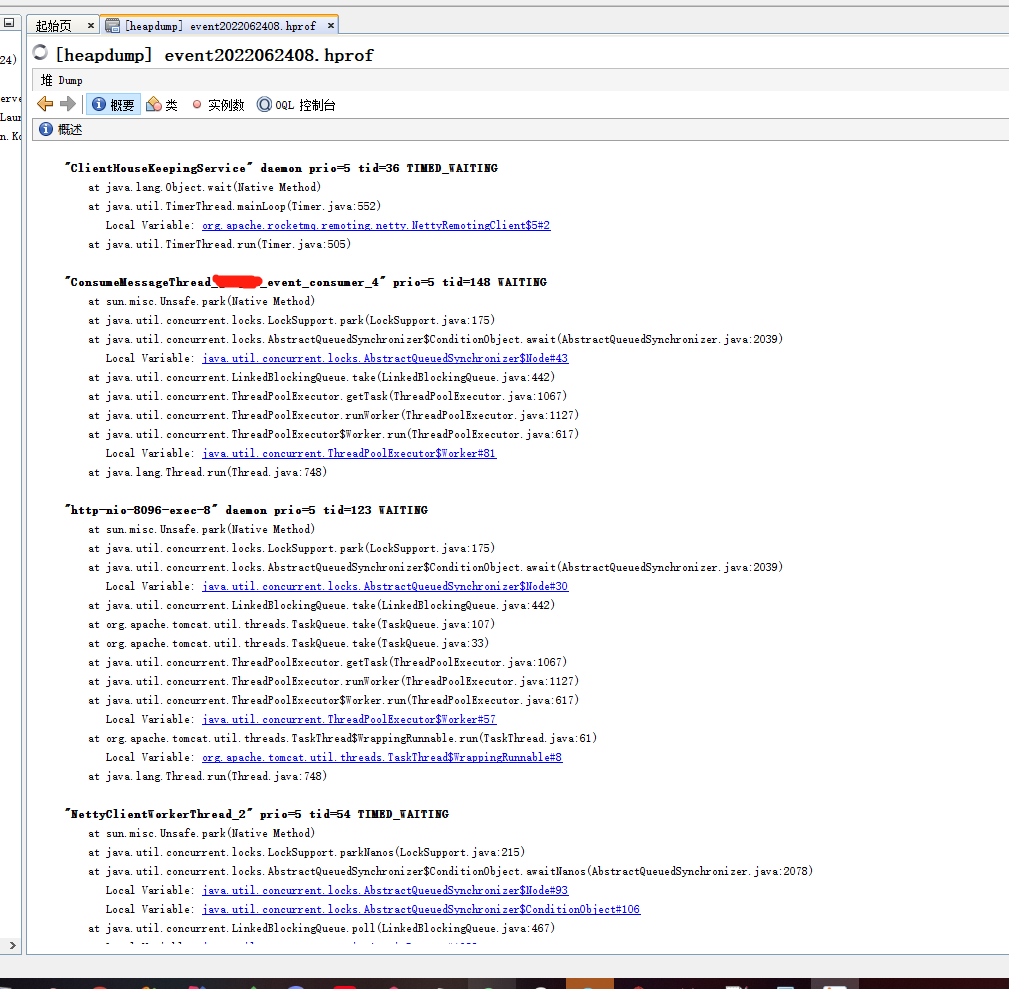

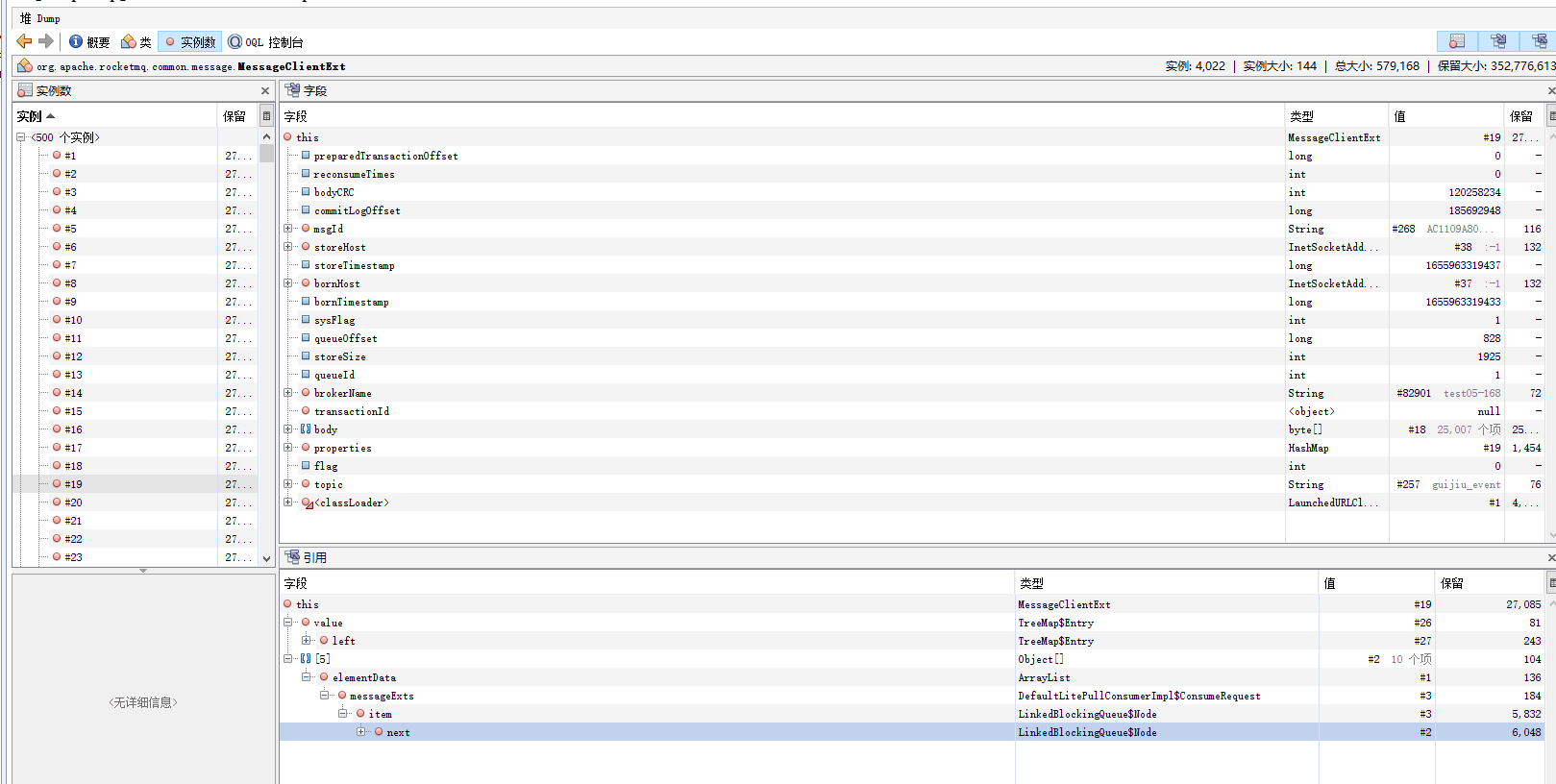

Look at the call chain here

Look at the call chain here

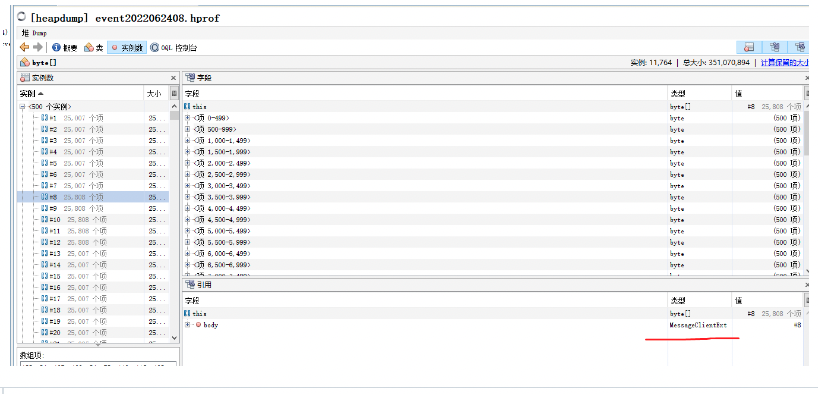

Is that it?

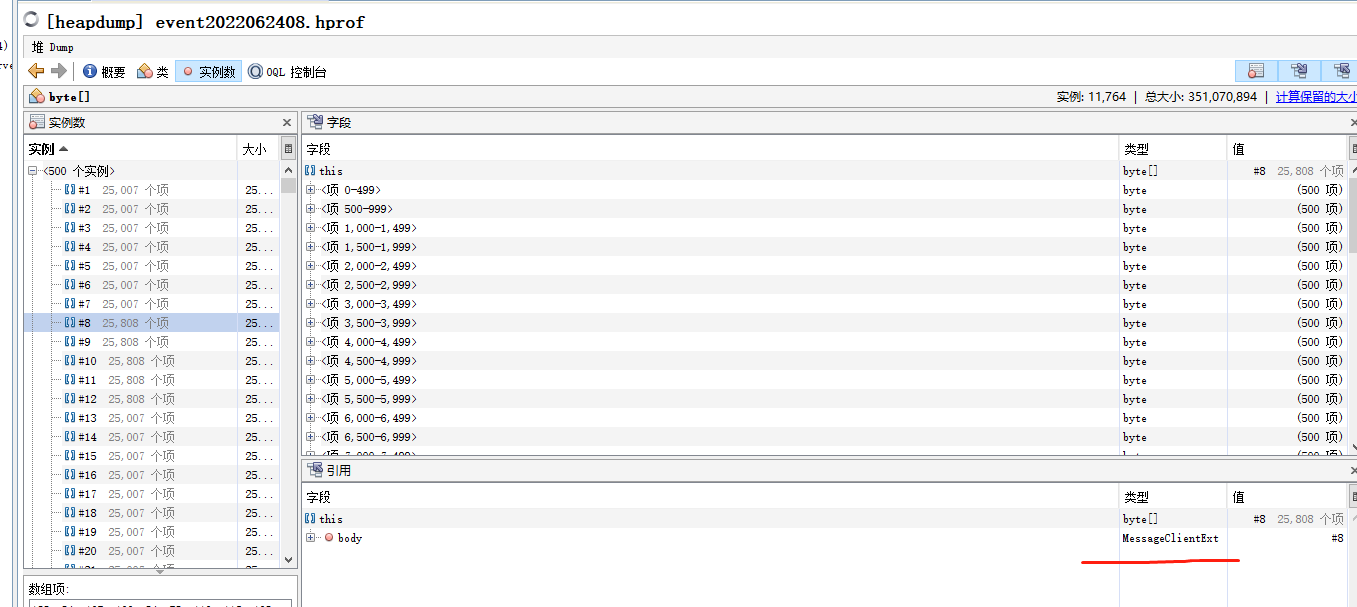

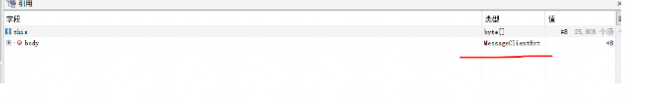

Here, want to know where this object is held for a long time

Here, want to know where this object is held for a long time

Is that it?

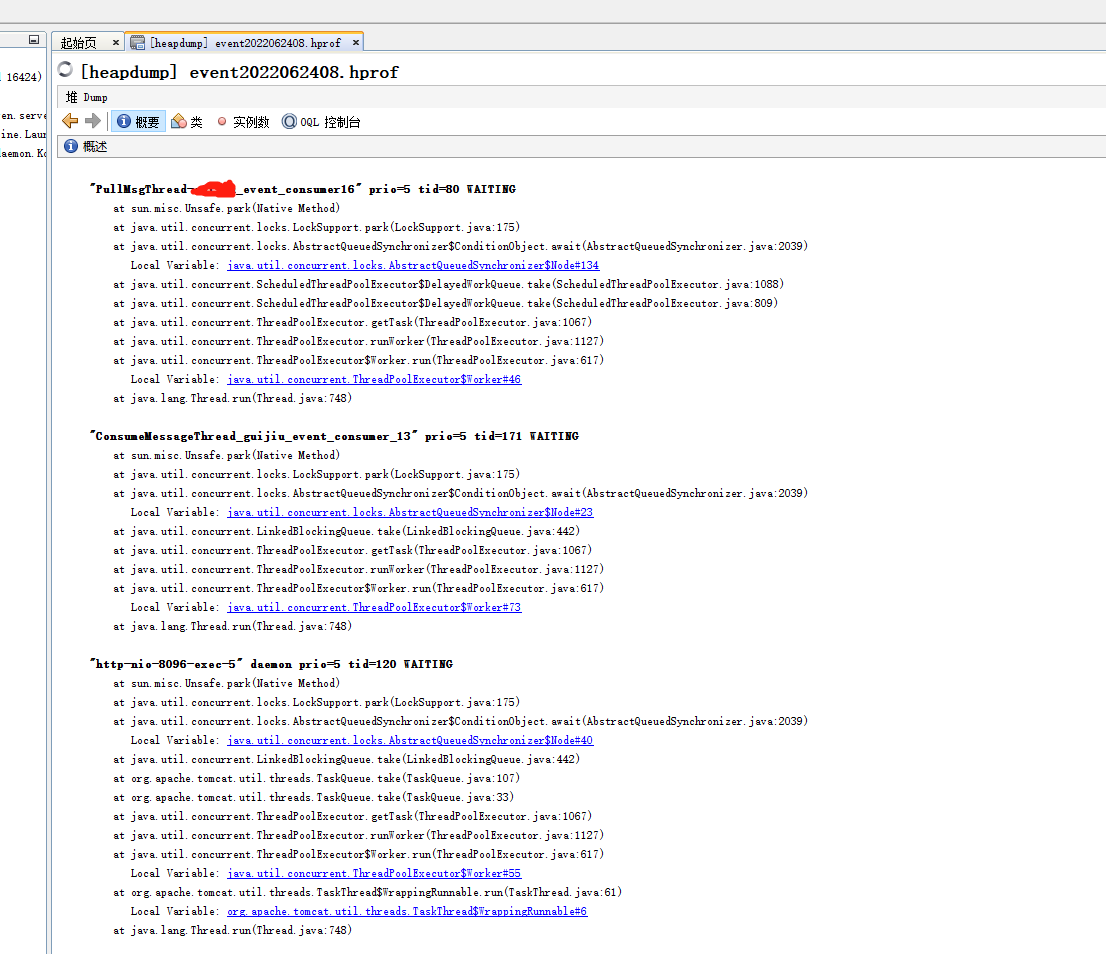

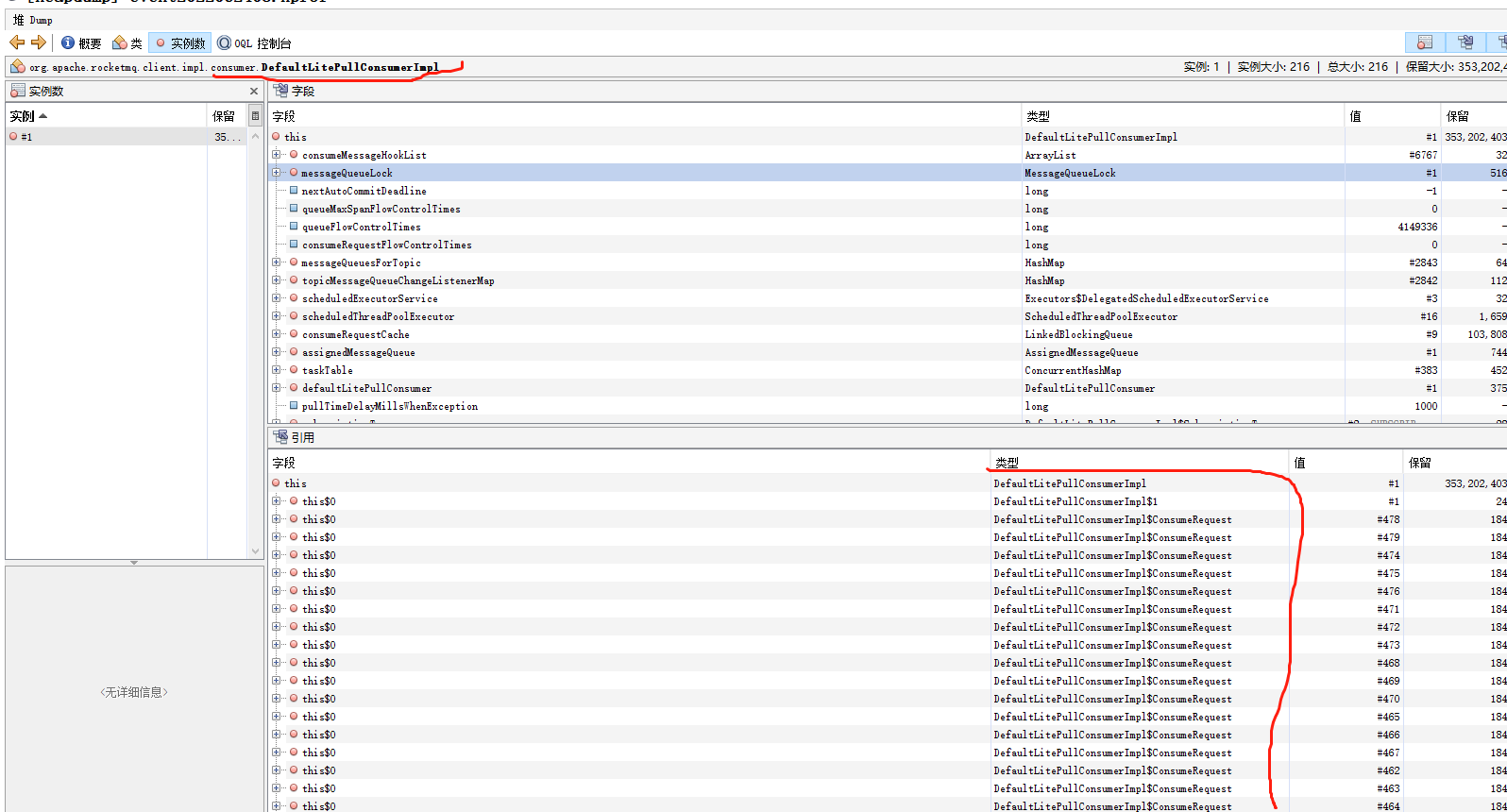

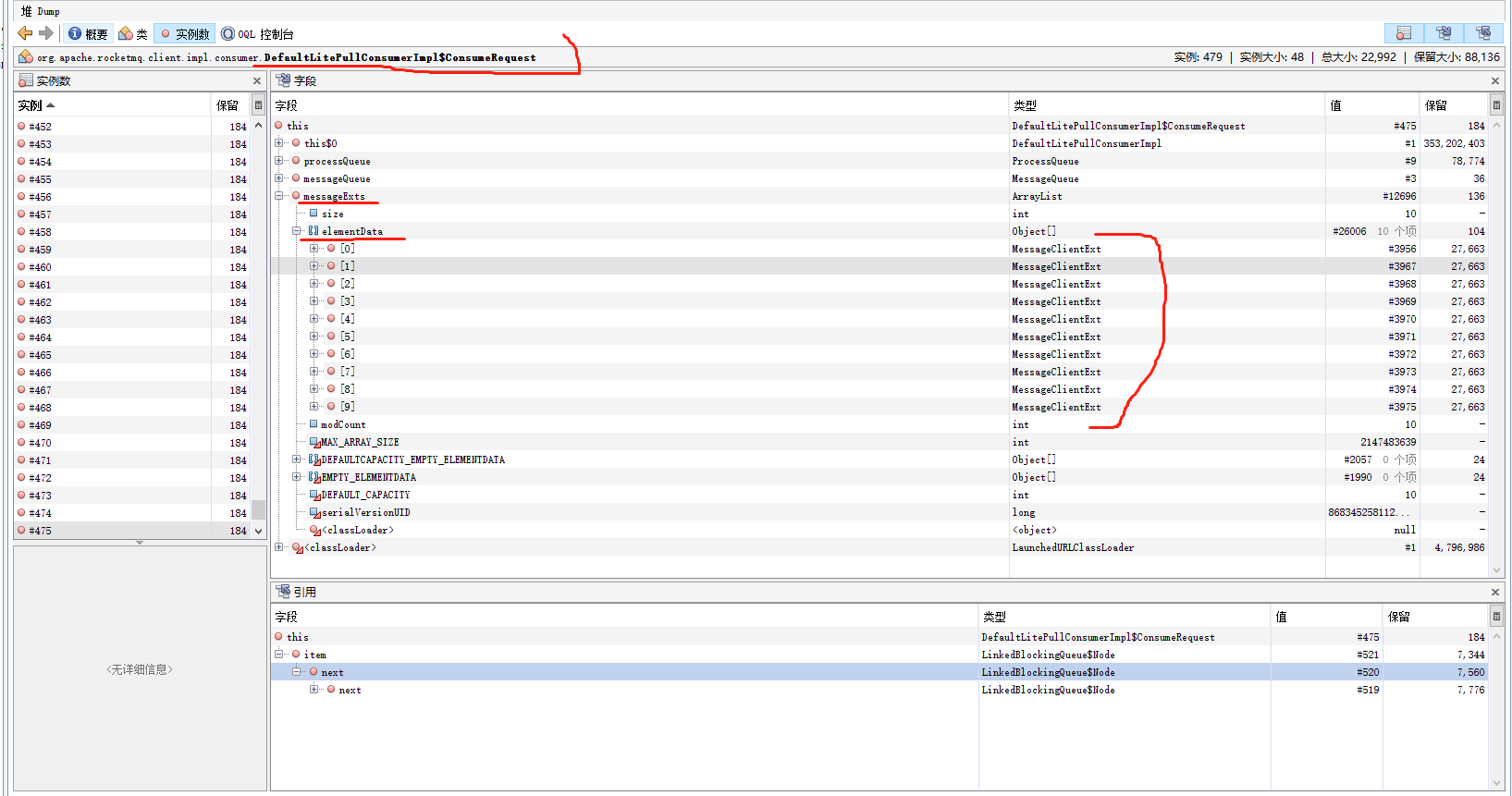

Is litePull also used when using rocketmq-client ? DefaultLitePullConsumerImpl caches a large number of ConsumeRequest objects, and ConsumeRequest also holds message objects,the reason is still consume slowly. can search rocketmq_client.log for 'The consume request count exceeds'

Look at the stack information, use litePull, the above code uses push. litePull needs to take the initiative to pull it. If you don't take the initiative to pull the cancellation fee, it will cache a large number of ConsumeRequest objects.