about the last one MySqlSnapshotSplit

Describe the bug(Please use English) A clear and concise description of what the bug is.

Environment :

- Flink version : 1.13.5

- Flink CDC version: 2.2.1

- Database and version: mysql

To Reproduce Steps to reproduce the behavior:

- Thes test data :

- The test code :

- The error :

Additional Description If applicable, add screenshots to help explain your problem.

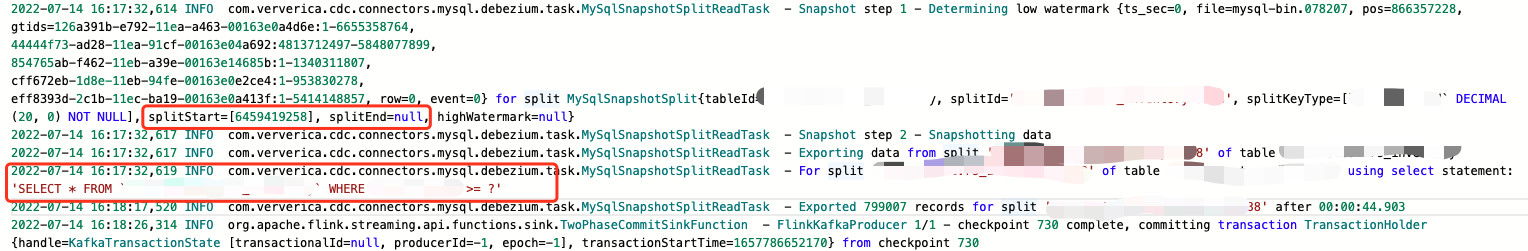

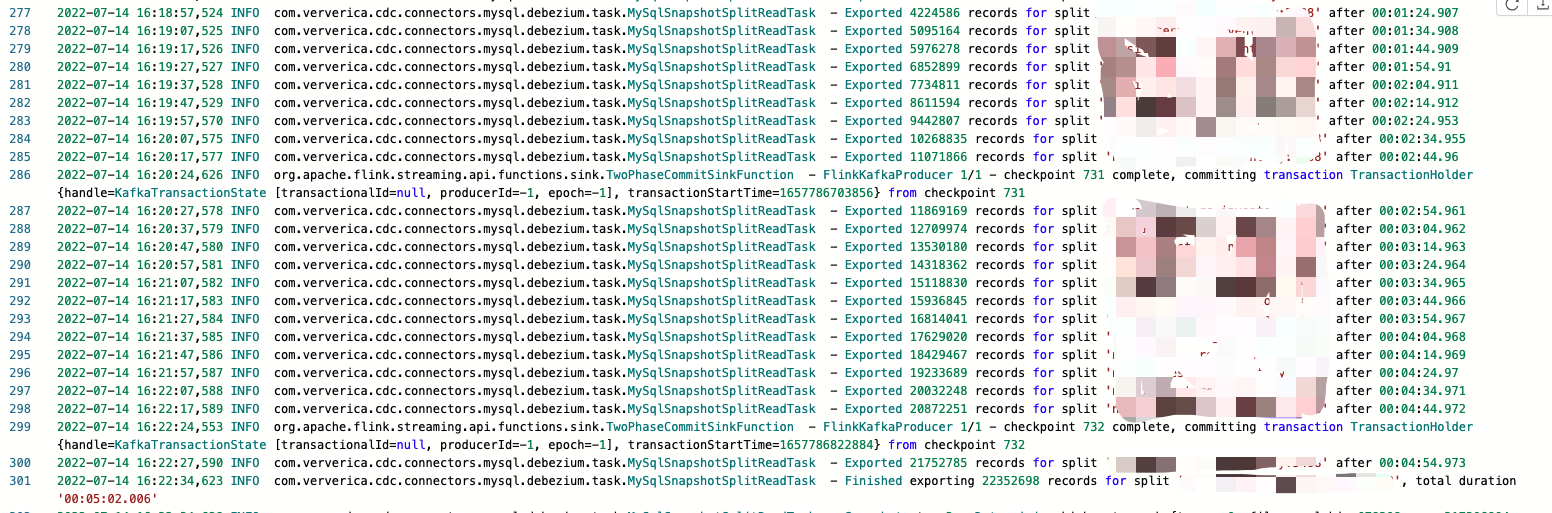

When the last split is read, if the full amount of data is large and a lot of data is written per second, the last split directly uses the primary key > =?, The data volume is too large and the task fails

I also encountered such problem, the task manager OOM, looking for a solution

I also encountered the same problem,When table is very large, it's oom

Closing this issue because it was created before version 2.3.0 (2022-11-10). Please try the latest version of Flink CDC to see if the issue has been resolved. If the issue is still valid, kindly report it on Apache Jira under project Flink with component tag Flink CDC. Thank you!