Change masterKeyCache to dynamic size

Descriptions of the changes in this PR:

Motivation

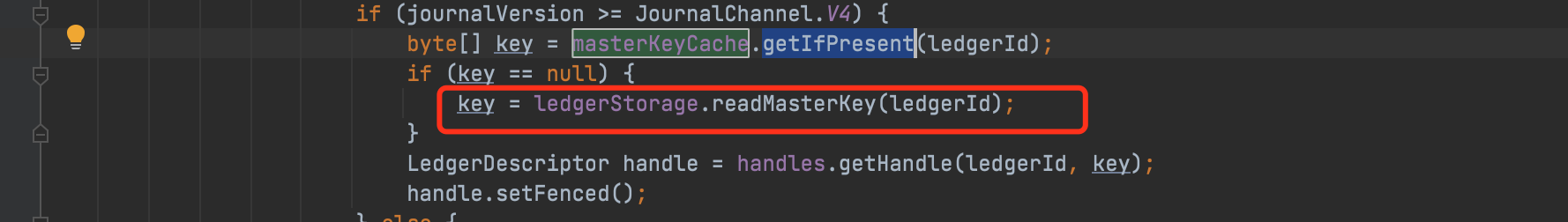

org.apache.bookkeeper.bookie.BookieImpl#masterKeyCache has a problem, when bookie has started, the data is only added but not removed, then change masterKeyCache to dynamic size,duration to prevent memory from rising because the number of ledger grow too fast

for the masterKeyCache does not need to be very large, even if the masterKeyCache cannot be hit,

-

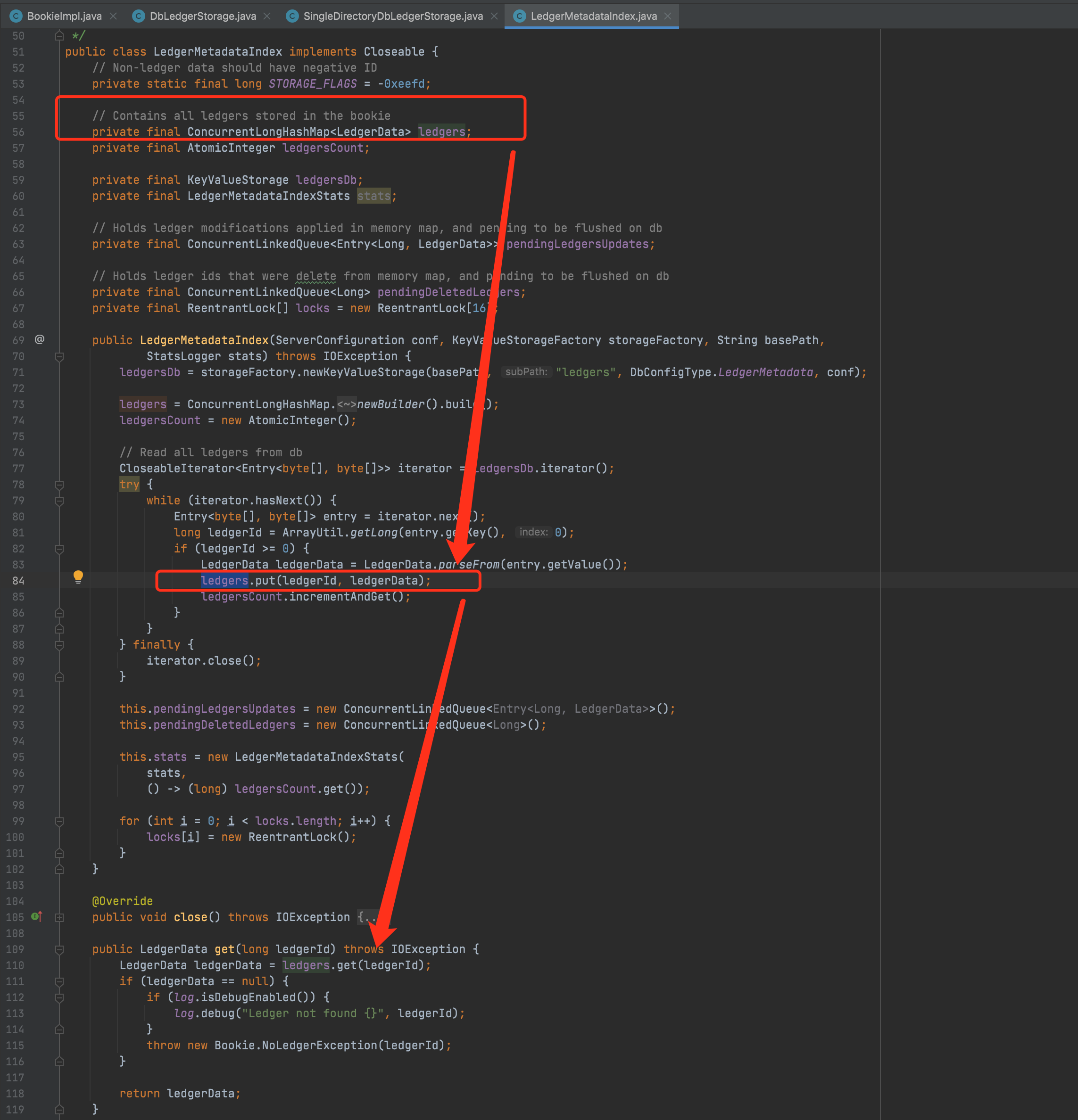

case use DbLedgerStorage as storage: there is a memory cache of org.apache.bookkeeper.bookie.storage.ldb.LedgerMetadataIndex#ledgers as the bottom line.

-

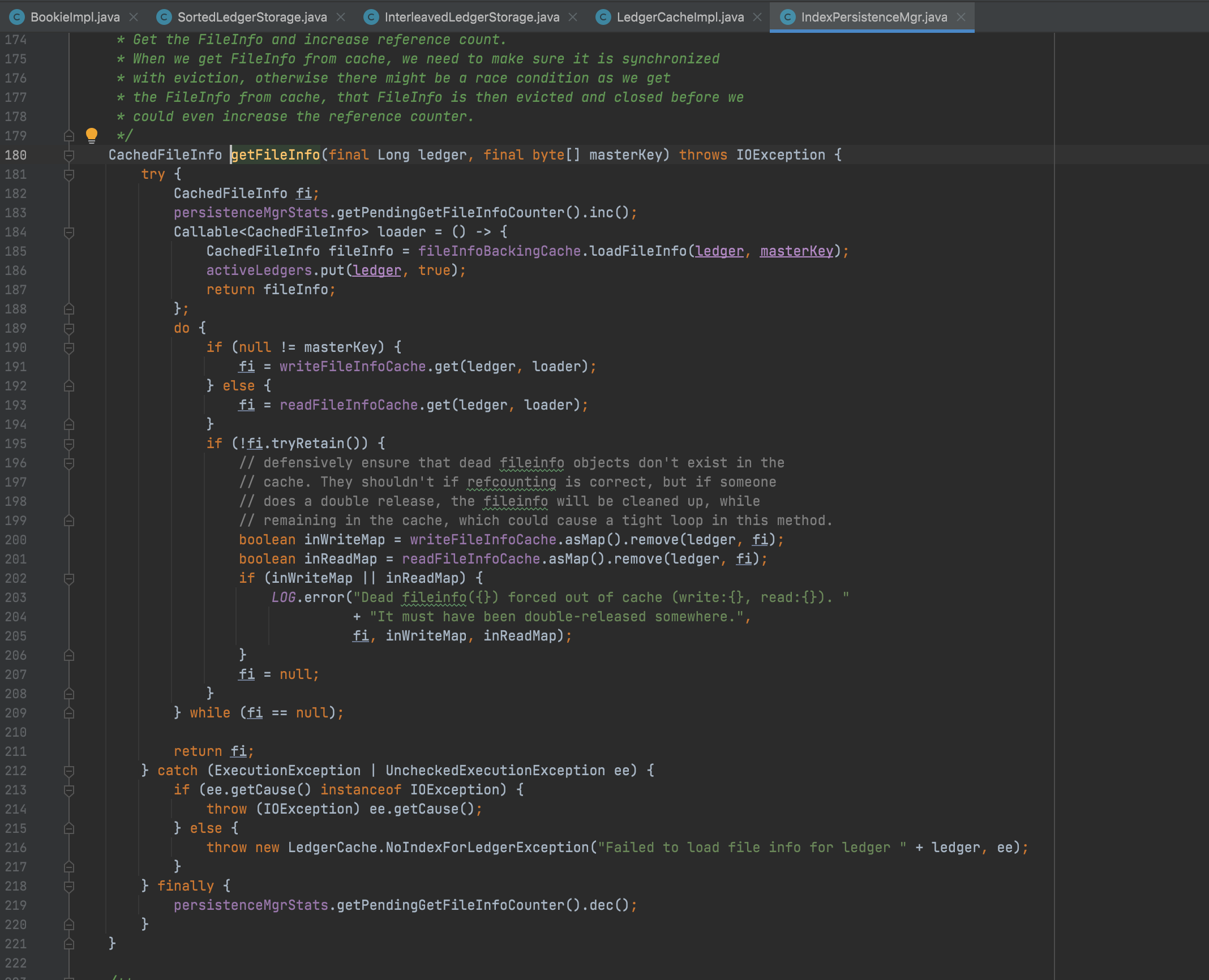

case use SortedLedgerStorage as storage: there is a memory cache of org.apache.bookkeeper.bookie.IndexPersistenceMgr#fileInfoBackingCache as the bottom line.

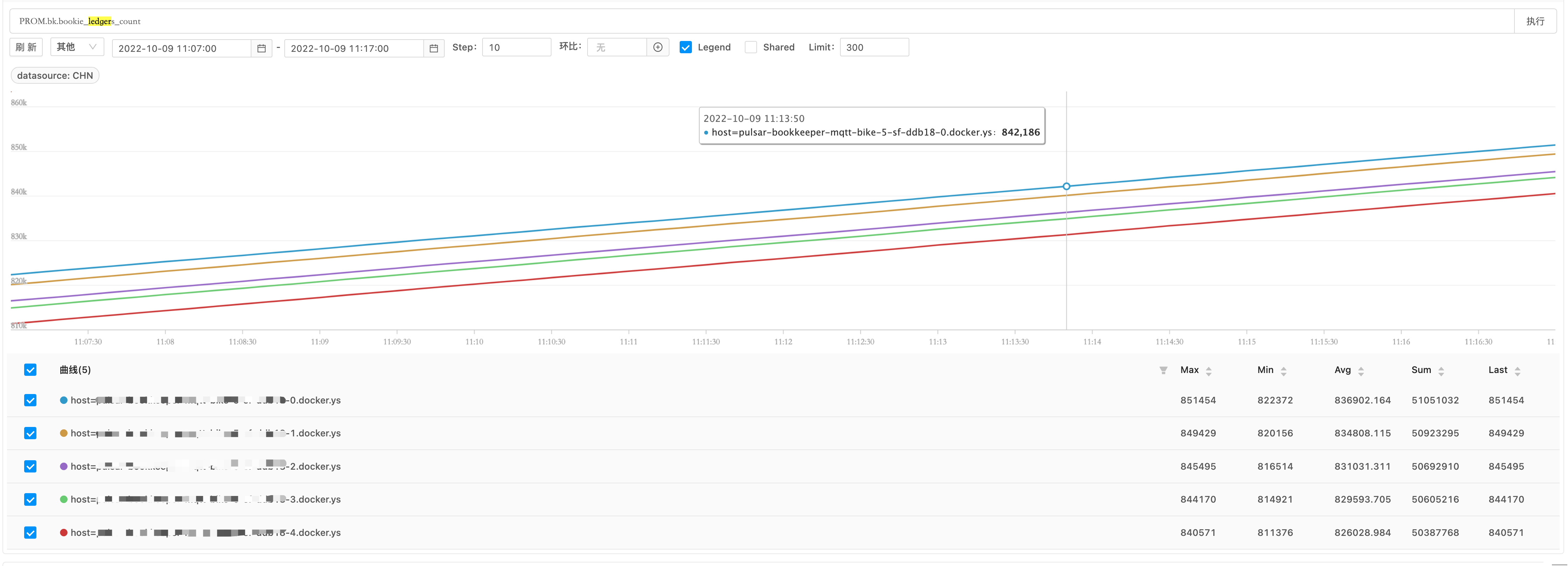

When the following scenarios occur, this problem will become more obvious:

1) when pulsar carries tens of millions of topics

2) the length of masterKey is 20

3) the number of ledger increases by 50 per second

the memory of masterKeyCache will increase by about 100MB every day

the memory of masterKeyCache will increase by about 100MB every day

Changes

- change masterKeyCache from fix size map(ConcurrentLongHashMap) to dynamic size(60 miniutes guava cache)

What happens when the key is removed from the cache? You are citing only the RocksDB ledger storage. And if you are using other storage engines?

What happens when the key is removed from the cache? You are citing only the RocksDB ledger storage. And if you are using other storage engines?

@eolivelli good question, I have updated in the Conversation, have a look, thanks

@eolivelli @zymap @Shoothzj @dlg99 @nicoloboschi help me review it,thanks

have a look @nicoloboschi @gaozhangmin I have update the code, when check masterKey exists in cache before write

// Force the load into masterKey cache

byte[] oldValue = masterKeyCache.asMap().putIfAbsent(ledgerId, masterKey);

My question is if would rather make more sense to optimize for the fact that the masterkey is empty in the overwhelmingly major part of the cases.

+1 In Pulsar, each ledger's default password is "".getBytes(StandardCharsets.UTF_8) and most clusters are not set ledger passwords, and the masterKey is generated from ledger password, which means most of the ledgers' masterKey are default value. We can optimize this case.

Changing the cache from ConcurrentLongHashMap to Guava cache will introduce extra cost no matter in putIfAbsent or eviction. This cache get and put is a high-frequency operation in Bookie, and I'm not sure about the impact on the addEntry performance.

Maybe we can try to optimize the default masterKey case first to see the achivments.

My question is if would rather make more sense to optimize for the fact that the masterkey is empty in the overwhelmingly major part of the cases.

+1 In Pulsar, each ledger's default password is

"".getBytes(StandardCharsets.UTF_8)and most clusters are not set ledger passwords, and themasterKeyis generated from ledger password, which means most of the ledgers' masterKey are default value. We can optimize this case.Changing the cache from

ConcurrentLongHashMapto Guava cache will introduce extra cost no matter inputIfAbsentor eviction. This cache get and put is a high-frequency operation in Bookie, and I'm not sure about the impact on the addEntry performance.Maybe we can try to optimize the default

masterKeycase first to see the achivments.

@hangc0276 the purpose of this PR change: The current replacement of ConcurrentLongHashMap is not because there is any problem with ConcurrentLongHashMap, but because the current masterKeyCache has not been cleaned up since bookie started

How to optimize masterKeyCache? Please give some specific advice? All I can think of is to regularly clean up the masterKeyCache logic. Now that guava cache has done this, there is no need to do this implementation.

There are currently 3 schemes for masterKeyCache:

-

Create a 1 hour guava cache, commit: first way Applicable scenarios: simple to implement; Disadvantages: the granularity of the lock is large, and the data structure will be locked when cleaning

-

Use the structure ConcurrentOpenHashMap, the key is masterKey, the value is the set of ledgerIds,commit : second way Applicable scenarios: do not set masterKey or masterKey settings are mostly the same Disadvantages: the masterKey settings are different, which increases the time-consuming of remove, but the remove operation is timed, not so frequent

-

Use the structure ConcurrentLongHashMap, the key is ledgerId, and the value is masterKey,commit: third way Applicable scenarios: do not set masterKey or masterKey settings are mostly the same; Disadvantage: there will be room to enlarge if the same masterKey is set for ledgerId

or else I send a email talking ablout it ? help me have a look at it @eolivelli @dlg99 @hangc0276 @merlimat

I am currently fine with the current approach. I am not sure that there will be a visible performance impact.

We should do optimisations like a special path for the empty string in follow up works (if really needed). Regarding the choice of the implementation of the cache...I am not sure that would make a big difference.

Maybe we can do with this patch and do more testing and get numbers: it won't be easy as the hot spots in writing to the journal are not for sure about accessing this cache