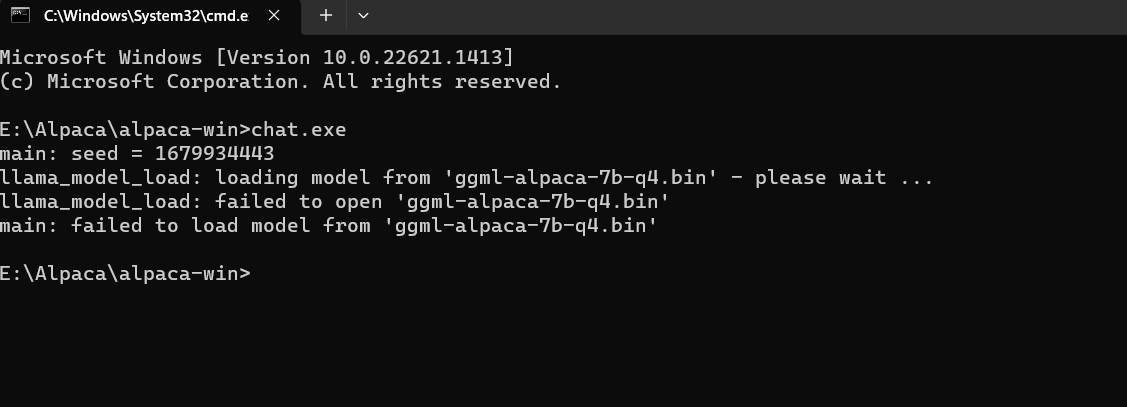

Can not load model after installation

After extracting the chat exe folder and copying model file into it, I tried to run chat.exe through terminal. However it keeps saying model failed to load.

Please note that I have installed this repo in that folder. Again did a fresh install in here, with same result. What am I missing?

model should be in the C:\Users[username]\alpaca.cpp folder not the chat folder

The directions say it goes in the chat folder, tho.

I found the copy of the .bin file from the medium article and now I'm getting this:

PS D:\stable diffusion\alpaca> .\chat.exe main: seed = 1679966496 llama_model_load: loading model from 'ggml-alpaca-7b-q4.bin' - please wait ... llama_model_load: ggml ctx size = 6065.34 MB llama_model_load: memory_size = 2048.00 MB, n_mem = 65536 llama_model_load: loading model part 1/1 from 'ggml-alpaca-7b-q4.bin' llama_model_load: ..... PS D:\stable diffusion\alpaca>

doesn't look like it works out of the box

It worked for me with the model in the same directory as the chat.exe in an arbitrary folder on my drive D.

Can you check the model hash and makes sure it matches one of the ones in this pull request: https://github.com/antimatter15/alpaca.cpp/pull/117/commits/9663ed33e0bfe5f64d5144e54688b6339f7a85d3

In windows with powershell you can do something like Get-FileHash .\ggml-alpaca-13b-q4.bin -Algorithm SHA256 where the middle item matches the path (reletive or absolute) to the file you downloaded.

Looks like I have the wrong one again. Any tips on where to find it?

Looks like I have the wrong one again. Any tips on where to find it?

I had grabbed the 13B one from one of the torrent links in the readme back when it had torrent links in it: https://github.com/antimatter15/alpaca.cpp/commit/285ca17ecbb6e7f1ef38c04bf9d961979e31b9d9

Looks like I have the wrong one again. Any tips on where to find it?

I had grabbed the 13B one from one of the torrent links in the readme back when it had torrent links in it: 285ca17

Version from the torrent worked. Thanks a ton!