分布式训练 Line Loss异常

您好,感谢能开源这么好的框架,使用起来也非常方便,但是我在分布式训练Line时遇到个问题:

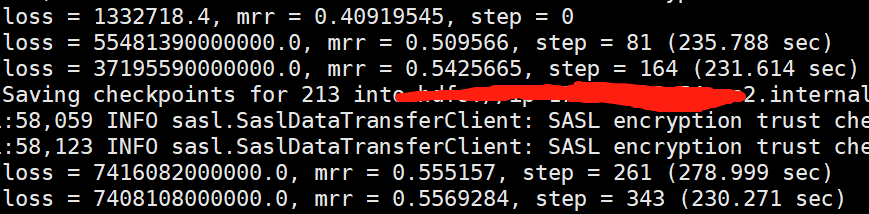

分布式(1ps+4worker)训练的loss变得非常大,我确定数据按分partitions的方式进行切分了,并进行了验证,确保没有问题,训练参数如下:

nohup python -m tf_euler

--ps_hosts=xxx:1999

--worker_hosts=xxx:2000,xxx:2000,xxx:2000,xxx:2000

--job_name=worker

--task_index=0

--data_dir hdfs:xxxxx/euler/test_data/

--model_dir=hdfs:x/euler/LINE_embedding

--euler_zk_addr xxx:2181

--euler_zk_path /test_embedding

--max_id 8428196

--learning_rate 0.001

--num_epochs 50

--batch_size 320000

--log_steps 20

--model line --mode train --dim 128 &

训练结果:

为什么loss 会变得这么大,我看好多关于line的提问,好像都有这个问题,

非常希望您能指导一下,

为什么loss 会变得这么大,我看好多关于line的提问,好像都有这个问题,

非常希望您能指导一下,

https://github.com/alibaba/euler/blob/e5387e1a4e8345ffd6f7e39fc4052a7abf6f7b56/tf_euler/python/models/base.py#L51 你试一试把这个参数的默认值改成True,line在调用无监督模型的时候,没有将xent_loss的值传进去

@alinamimi 您好,xent_loss在单机和分布式训练时是一样的,我觉得这并不能引起loss的异常,您觉得呢

@alinamimi @yangsiran 您好,我对xent_loss设置成True和False都进行设置了,但是结果还是LOSS异常的大,在batch_size=160000的情况下,几个step,loss就变成nan了,可以帮忙分析一下到底是什么原因么??

同样遇到这个问题,不太理解为什么跟单机差别很大,@ShangJP 有找到原因吗

目前还没找到原因,急需大神指点一波,我的微信,18610365704,可以交流一下

@ShangJP 请问这个问题解决了吗? 分布式情况下 单server单worker是正常的,多worker有监督正常;多worker无监督训练loss就爆炸了。。 @alinamimi 之前你们可有测试大数据下分布式的无监督训练效果? loss这种情况大概什么原因呢?烦请提供一个排查的思路,谢谢

我们业务有在大数据下做无监督训练,用GraphSage比较多,训练过程是正常的,也能收敛。LINE比较少用。loss一般我们用xent效果比较好,需要调一下学习率等参数

我们业务有在大数据下做无监督训练,用GraphSage比较多,训练过程是正常的,也能收敛。LINE比较少用。loss一般我们用xent效果比较好,需要调一下学习率等参数

多谢

INFO:tensorflow:loss = 2062.0222, step = 3956, mrr = 0.72906905 (2.395 sec) INFO:tensorflow:loss = 2044.7451, step = 3996, mrr = 0.7860352 (2.372 sec) INFO:tensorflow:loss = 2050.8723, step = 4036, mrr = 0.7676432 (2.445 sec) INFO:tensorflow:loss = 2058.0752, step = 4076, mrr = 0.7385417 (2.419 sec) INFO:tensorflow:loss = 2050.847, step = 4116, mrr = 0.7760091 (2.411 sec) INFO:tensorflow:loss = 2046.3224, step = 4157, mrr = 0.76139325 (2.398 sec) INFO:tensorflow:loss = 2049.7612, step = 4197, mrr = 0.7661133 (2.418 sec) INFO:tensorflow:loss = 2053.594, step = 4237, mrr = 0.7610026 (2.375 sec) INFO:tensorflow:loss = 2054.0017, step = 4277, mrr = 0.7486328 (2.394 sec) INFO:tensorflow:loss = 2040.7125, step = 4316, mrr = 0.77233076 (2.346 sec) INFO:tensorflow:loss = 2047.3507, step = 4357, mrr = 0.7617513 (2.447 sec) INFO:tensorflow:loss = 2046.4552, step = 4397, mrr = 0.7681315 (2.402 sec) INFO:tensorflow:loss = 2038.2688, step = 4437, mrr = 0.76389974 (2.405 sec)

在ppi 数据集上 learning_rate 0.005 --num_epochs 40 --order 2 --optimizer momentum,超参数如上,adam比较容易出现loss变得巨大的情况,换成momentum,训练比较平稳

INFO:tensorflow:loss = 2062.0222, step = 3956, mrr = 0.72906905 (2.395 sec) INFO:tensorflow:loss = 2044.7451, step = 3996, mrr = 0.7860352 (2.372 sec) INFO:tensorflow:loss = 2050.8723, step = 4036, mrr = 0.7676432 (2.445 sec) INFO:tensorflow:loss = 2058.0752, step = 4076, mrr = 0.7385417 (2.419 sec) INFO:tensorflow:loss = 2050.847, step = 4116, mrr = 0.7760091 (2.411 sec) INFO:tensorflow:loss = 2046.3224, step = 4157, mrr = 0.76139325 (2.398 sec) INFO:tensorflow:loss = 2049.7612, step = 4197, mrr = 0.7661133 (2.418 sec) INFO:tensorflow:loss = 2053.594, step = 4237, mrr = 0.7610026 (2.375 sec) INFO:tensorflow:loss = 2054.0017, step = 4277, mrr = 0.7486328 (2.394 sec) INFO:tensorflow:loss = 2040.7125, step = 4316, mrr = 0.77233076 (2.346 sec) INFO:tensorflow:loss = 2047.3507, step = 4357, mrr = 0.7617513 (2.447 sec) INFO:tensorflow:loss = 2046.4552, step = 4397, mrr = 0.7681315 (2.402 sec) INFO:tensorflow:loss = 2038.2688, step = 4437, mrr = 0.76389974 (2.405 sec)

在ppi 数据集上 learning_rate 0.005 --num_epochs 40 --order 2 --optimizer momentum,超参数如上,adam比较容易出现loss变得巨大的情况,换成momentum,训练比较平稳

I0909 19:39:17.823302 13046 graph_builder.cc:147] Graph Load Finish! Node Count:2537870 Edge Count:50189051 I0909 19:39:37.767843 13046 graph_builder.cc:159] Done: build all sampler I0909 19:39:37.767902 13046 graph_builder.cc:162] Graph build finish WARNING:tensorflow:use_feature is deprecated and would not have any effect. WARNING:tensorflow:use_feature is deprecated and would not have any effect. WARNING:tensorflow:From /data/relateRecom/anaconda3/envs/euler/lib/python2.7/site-packages/tf_euler/python/base_layers.py:78: init (from tensorflow.python.ops.init_ops) is deprecated and will be removed in a future version. Instructions for updating: Use tf.initializers.variance_scaling instead with distribution=uniform to get equivalent behavior. INFO:tensorflow:Create CheckpointSaverHook. INFO:tensorflow:Graph was finalized. 2021-09-09 19:39:38.871631: I tensorflow/core/platform/cpu_feature_guard.cc:141] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 FMA INFO:tensorflow:Running local_init_op. INFO:tensorflow:Done running local_init_op. INFO:tensorflow:Saving checkpoints for 0 into /data/relateRecom/gsm/euler-1/model/model.ckpt. 2021-09-09 19:39:40.270788: W tensorflow/core/framework/allocator.cc:122] Allocation of 8675328000 exceeds 10% of system memory. INFO:tensorflow:loss = 4258.7017, mrr = 0.2720053, step = 1 2021-09-09 19:39:47.527971: W tensorflow/core/framework/allocator.cc:122] Allocation of 8675328000 exceeds 10% of system memory. 2021-09-09 19:39:58.683909: W tensorflow/core/framework/allocator.cc:122] Allocation of 8675328000 exceeds 10% of system memory. 2021-09-09 19:40:09.057049: W tensorflow/core/framework/allocator.cc:122] Allocation of 8675328000 exceeds 10% of system memory. 2021-09-09 19:40:16.252187: W tensorflow/core/framework/allocator.cc:122] Allocation of 8675328000 exceeds 10% of system memory. INFO:tensorflow:Saving timeline for 23 into '/data/relateRecom/gsm/euler-1/model/timeline-23.json'. INFO:tensorflow:Saving timeline for 47 into '/data/relateRecom/gsm/euler-1/model/timeline-47.json'. INFO:tensorflow:loss = 4251.6416, mrr = 1.0, step = 51 (415.241 sec) INFO:tensorflow:Saving timeline for 70 into '/data/relateRecom/gsm/euler-1/model/timeline-70.json'. INFO:tensorflow:Saving checkpoints for 73 into /data/relateRecom/gsm/euler-1/model/model.ckpt. INFO:tensorflow:Saving timeline for 93 into '/data/relateRecom/gsm/euler-1/model/timeline-93.json'. INFO:tensorflow:loss = 4019.0908, mrr = 1.0, step = 101 (405.777 sec) INFO:tensorflow:Saving timeline for 118 into '/data/relateRecom/gsm/euler-1/model/timeline-118.json'.

我的单机学习率是0.0001了,setp50 mrr就是1.0 这是什么情况呢,