Notebook server extremely slow to shut down

Describe the bug

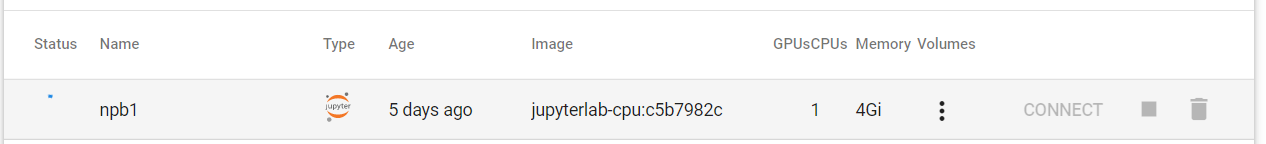

Rarely I see a notebook server that when terminated, takes an extremely long time (a day or more) to delete. The most recent case is with a non-Protected B notebook server called npb1.

Environment info

Namespace: greenhouse-detection

Notebook/server: npb1

Steps to reproduce

This is not reliably reproducible (as far as I know), it rarely seems to occur whenever a notebook server is shut down. I don't know if there's some specific condition that occurs to make it reliably reproducible.

Expected behaviour

Shutting down a notebook server should take a few minutes at most, not hours or days.

Screenshots

Additional context

Here is the output of kubectl describe pod npb1-0, a bunch of stuff is redacted (I can send the full unredacted version to AAW maintainers if necessary):

Name: npb1-0

Namespace: greenhouse-detection

Priority: 0

Node: REDACTED

Start Time: Fri, 27 May 2022 15:29:31 +0000

Labels: access-ml-pipeline=true

controller-revision-hash=npb1-REDACTED

istio.io/rev=default

minio-mounts=true

notebook-name=npb1

security.istio.io/tlsMode=istio

service.istio.io/canonical-name=npb1

service.istio.io/canonical-revision=latest

statefulset=npb1

statefulset.kubernetes.io/pod-name=npb1-0

Annotations: data.statcan.gc.ca/inject-boathouse: true

poddefault.admission.kubeflow.org/poddefault-access-ml-pipeline: REDACTED

poddefault.admission.kubeflow.org/poddefault-minio-mounts: REDACTED

prometheus.io/path: /stats/prometheus

prometheus.io/port: REDACTED

prometheus.io/scrape: true

sidecar.istio.io/status:

{"version":"REDACTED","initContainers":["istio-validation"],"containers":[REDACTED

Status: Terminating (lasts 18h)

Termination Grace Period: 30s

IP: REDACTED

Controlled By: StatefulSet/npb1

Init Containers:

istio-validation:

REDACTED

Containers:

npb1:

Container ID:

Image: REDACTED

Image ID:

Port: 8888/TCP

Host Port: 0/TCP

State: Waiting

Reason: PodInitializing

Last State: Terminated

Reason: ContainerStatusUnknown

Message: The container could not be located when the pod was deleted. The container used to be Running

Exit Code: 137

Started: Mon, 01 Jan 0001 00:00:00 +0000

Finished: Mon, 01 Jan 0001 00:00:00 +0000

Ready: False

Restart Count: 0

Limits:

cpu: 1

memory: 4Gi

Requests:

cpu: 1

memory: 4Gi

Environment:

REDACTED

Mounts:

REDACTED

istio-proxy:

Container ID:

Image: docker.io/istio/proxyv2:1.7.8

Image ID:

Port: 15090/TCP

Host Port: 0/TCP

Args:

REDACTED

State: Waiting

Reason: PodInitializing

Last State: Terminated

Reason: ContainerStatusUnknown

Message: The container could not be located when the pod was deleted. The container used to be Running

Exit Code: 137

Started: Mon, 01 Jan 0001 00:00:00 +0000

Finished: Mon, 01 Jan 0001 00:00:00 +0000

Ready: False

Restart Count: 0

Limits:

cpu: 2

memory: 1Gi

Requests:

cpu: 100m

memory: 128Mi

Readiness: http-get http://:15021/healthz/ready delay=1s timeout=1s period=2s #success=1 #failure=30

Environment:

REDACTED

Mounts:

REDACTED

vault-agent:

Container ID:

Image: vault:1.7.2

Image ID:

Port: <none>

Host Port: <none>

Command:

/bin/sh

-ec

Args:

echo ${VAULT_CONFIG?} | base64 -d > /home/vault/config.json && vault agent -config=/home/vault/config.json

State: Waiting

Reason: PodInitializing

Last State: Terminated

Reason: ContainerStatusUnknown

Message: The container could not be located when the pod was deleted. The container used to be Running

Exit Code: 137

Started: Mon, 01 Jan 0001 00:00:00 +0000

Finished: Mon, 01 Jan 0001 00:00:00 +0000

Ready: False

Restart Count: 0

Limits:

cpu: 500m

memory: 128Mi

Requests:

cpu: 250m

memory: 64Mi

Environment:

VAULT_LOG_LEVEL: info

VAULT_LOG_FORMAT: standard

VAULT_CONFIG: REDACTED

Mounts:

/home/vault from home-sidecar (rw)

/var/run/secrets/kubernetes.io/serviceaccount from REDACTED

/vault/secrets from vault-secrets (rw)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

workspace-npb1:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: workspace-npb1

ReadOnly: false

REDACTED

istio-envoy:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium: Memory

SizeLimit: <unset>

istio-data:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium:

SizeLimit: <unset>

istio-podinfo:

Type: DownwardAPI (a volume populated by information about the pod)

Items:

metadata.labels -> labels

metadata.annotations -> annotations

istio-token:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 43200

istiod-ca-cert:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: istio-ca-root-cert

Optional: false

home-sidecar:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium: Memory

SizeLimit: <unset>

vault-secrets:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium: Memory

SizeLimit: <unset>

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: data.statcan.gc.ca/classification=unclassified:NoSchedule

node.kubernetes.io/memory-pressure:NoSchedule

node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

node.statcan.gc.ca/purpose=user:NoSchedule

node.statcan.gc.ca/use=general:NoSchedule

Events: <none>

Maybe this is failing on unmounting the minio mounts.

For anyone that checks consult the kubelet logs on the node for the particular pod.

The notebook server npb1 is still shutting down, even now.

@brendangadd @cboin1996