CUDA error fmha_fprop_fp16_kernel.sm80.cu:68: invalid argument

I tried to run the example from the HuggingFace: https://huggingface.co/stabilityai/stable-diffusion-x4-upscaler and got error:

CUDA error (/tmp/pip-req-build-f05pbkq3/third_party/flash-attention/csrc/flash_attn/src/fmha_fprop_fp16_kernel.sm80.cu:68): invalid argument

Conda env:

active environment : phygc-rnd-stable-diffusion-2-0

shell level : 2

conda version : 4.11.0

conda-build version : 3.21.4

python version : 3.8.8.final.0

virtual packages : __cuda=11.5=0

__linux=5.15.0=0

__glibc=2.31=0

__unix=0=0

__archspec=1=x86_64

conda av metadata url : None

channel URLs : https://repo.anaconda.com/pkgs/main/linux-64

https://repo.anaconda.com/pkgs/main/noarch

https://repo.anaconda.com/pkgs/r/linux-64

https://repo.anaconda.com/pkgs/r/noarch

platform : linux-64

user-agent : conda/4.11.0 requests/2.25.1 CPython/3.8.8 Linux/5.15.0-52-generic ubuntu/20.04.3 glibc/2.31

UID:GID : 1003:1003

offline mode : False

channels:

- defaults

dependencies:

- python=3.9

- pip

- pytorch::cudatoolkit=11.3

- pytorch::pytorch==1.12.1

- pytorch::torchvision==0.13.1

- numpy

- pip:

- ftfy~=6.1.1

- omegaconf~=2.1.1

- diffusers~=0.9.0

- transformers~=4.25.1

- scipy~=1.9.3

- triton~=1.1.1

- accelerate==0.14.0

- git+https://github.com/facebookresearch/[email protected]

Videocard: RTX 3090

+1

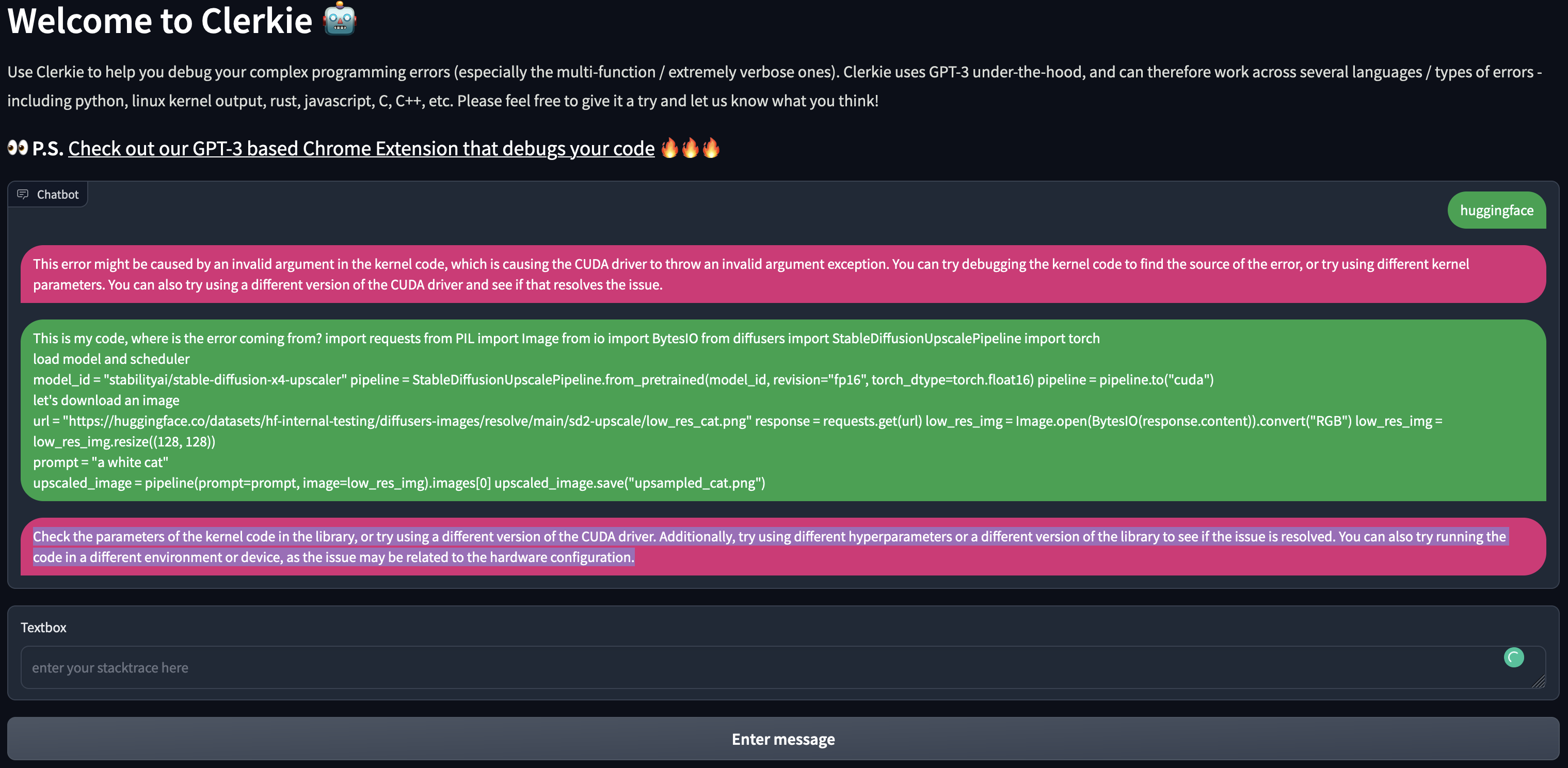

This error might be caused by an invalid argument in the kernel code, which is causing the CUDA driver to throw an invalid argument exception. You can try debugging the kernel code to find the source of the error, or try using different kernel parameters. You can also try using a different version of the CUDA driver and see if that resolves the issue.

Check the parameters of the kernel code in the library, or try using a different version of the CUDA driver. Additionally, try using different hyperparameters or a different version of the library to see if the issue is resolved. You can also try running the code in a different environment or device, as the issue may be related to the hardware configuration.

Let me know if that helps!

Got this from Clerkie (ai code debugger) - https://bit.ly/clerkie_github

Issue probably in the xformers library: https://github.com/facebookresearch/xformers

It is working with follow list of dependencies and no torch.autocast:

channels:

- defaults

dependencies:

- python=3.9

- pip

- pytorch::cudatoolkit=11.3

- pytorch::pytorch==1.12.1

- pytorch::torchvision==0.13.1

- numpy

- ninja

- pip:

- ftfy~=6.1.1

- omegaconf~=2.1.1

- diffusers~=0.10.2

- transformers~=4.25.1

- scipy~=1.9.3

- triton==2.0.0.dev20221202

- accelerate==0.15.0

- git+https://github.com/facebookresearch/xformers.git@7835679ed1d91837de3b2e0391098469a8a8b6d6