BinaryRecordingExtractor.write_recording creates different .dat file depending on n_jobs and total_memory

(Using SpikeInterface version 0.94.0)

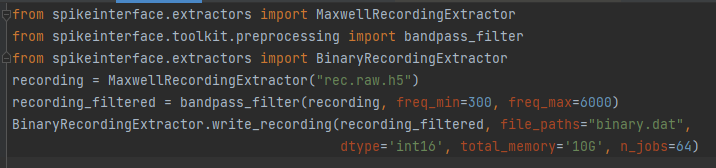

I have ephys data with type "uint16" stored in .raw.h5 format. I use the following code to convert the data to .dat format for Kilosort2.

When I change,

When I change, n_jobs or total_memory, the .dat file changes as well. (Kilosort2 detects different number of threshold crossings and units.) This might be caused by how the recording is divided into different chunks for parallel processing, but I'm not sure.

Can someone please tell me how I can change the parallel job parameters without affecting the .dat file? (I would like the .dat file to be independent of n_jobs and total_memory.) Thank you.

Hi @max-c-lim

Unfortunately, because of the filter, the binary input data will be slightly different using different chunk sizes. We use a margin for the chunks to ameliorate the margin problems, but ideally you would need to apply the filter over the entire signal. If you have enough RAM, you could do it, but the changes due to this should be very small. Did you take a look at the traces?

Also, note that Kilosort always find a slightly different set of units and spikes. So I don't think the mismatch is due to the different chunking. You can also try to run the same recording twice to see if the changes are similar to using different chunking options

Hi @alejoe91

No, I did not look at the traces, but sometimes the difference in the binary input data will cause Kilosort2 to raise the error

Error using / Matrix dimensions must agree after finishing splitting. Kilosort2 also seems to give the same outputs when run on the same recording twice.

What is the best way to apply the filter over the entire signal?

Thanks for the quick response!

I see. You could try to use total_memory='100G', but then it would only use one job..so it's a tradeoff. Anyways, I would recommend to check the traces ;)

Thanks for the help! I'll take a look at the traces. Do you know what could be causing the Kilosort2 error? The error happened when I switched total_memory from 4G to 6G. Could this be unrelated?

Unfortunately KS GPU errors are not very interpretable! Honestly I usually use smaller chunks, like total_memory='1G'! Give it a try!

For filter, I would recommend large chunks, something like a 1s.

We have now chunk_duration='1s' that can be used instead of chunk_memory / chunk_size / total_memory.

My feeling here is that the uint16 converted to int16 directly at filtering step could have some problems. Maybe not. Look at traces! And particularly at traces on chunk border!

We close now, feel free to reopen.