image regression demo/tutorial

Is your feature request related to a problem? Please describe. would be great to have an image regression demo/tutorial (for example MR to CT conversion using MAE as loss function)

https://github.com/Project-MONAI/MONAI/discussions/1401

I would also be interested in this. I am currently trying to map 16-bit, noisy, low-resolution images to corresponding 16-bit, less-noisy, higher-resolution, same-size, 16-bit images size with an U-Net.

If I use a standard U-Net implementation (directly after Ronneberger et al., 2015), I get the expected output; however, if I use Monai's UNet I get bad results both in the dynamic range (-1.0...1.0 with the expected image background around 0.0, i.e. ~32,000 in 16 bit instead of <<1000) and in the quality of the reconstruction (very dotty appearance of the pixels, with neighboring pixels alternating between strongly negative and strongly positive values).

I am obviously doing something wrong, but I don't understand what.

Thanks!

I would also be interested in this. I am currently trying to map 16-bit, noisy, low-resolution images to corresponding 16-bit, less-noisy, higher-resolution, same-size, 16-bit images size with an U-Net.

If I use a standard U-Net implementation (directly after Ronneberger et al., 2015), I get the expected output; however, if I use Monai's UNet I get bad results both in the dynamic range (-1.0...1.0 with the expected image background around 0.0, i.e. ~32,000 in 16 bit instead of <<1000) and in the quality of the reconstruction (very dotty appearance of the pixels, with neighboring pixels alternating between strongly negative and strongly positive values).

I am obviously doing something wrong, but I don't understand what.

Thanks!

Hi aarpon,

I actually have exactly the same problem with dotty appearances and alternating pixel values. Have you figured out a solution yet? I'd love to use MONAI for image synthesis but haven't figured it out yet

I would also be interested in this. I am currently trying to map 16-bit, noisy, low-resolution images to corresponding 16-bit, less-noisy, higher-resolution, same-size, 16-bit images size with an U-Net.

If I use a standard U-Net implementation (directly after Ronneberger et al., 2015), I get the expected output; however, if I use Monai's UNet I get bad results both in the dynamic range (-1.0...1.0 with the expected image background around 0.0, i.e. ~32,000 in 16 bit instead of <<1000) and in the quality of the reconstruction (very dotty appearance of the pixels, with neighboring pixels alternating between strongly negative and strongly positive values).

I am obviously doing something wrong, but I don't understand what.

Thanks!

Hi aarpon,

I actually have exactly the same problem with dotty appearances and alternating pixel values. Have you figured out a solution yet? I'd love to use MONAI for image synthesis but haven't figured it out yet

As I said, I switched to another implementation of U-Net, that works better but still way worse than other approaches like CARE (Google: csbdeep). One difference is most likely the fact that CARE only uses patches where there is enough foreground signal, while so far I have been sampling randomly (and I have quite a lot of background in my images). But I don't believe that this is enough to explain the vast difference in results. I had to work on other things recently, but I will dedicate some time next week to look carefully into it. I will keep you posted.

perhaps could try the basic unet as well, it's less flexible but easier to config https://docs.monai.io/en/latest/networks.html#monai.networks.nets.BasicUNet

perhaps could try the basic unet as well, it's less flexible but easier to config https://docs.monai.io/en/latest/networks.html#monai.networks.nets.BasicUNet

Indeed, thanks! I will also try the basic U-Net.

Having such a tutorial would be really helpful. I have now heard of several people facing similar problems when trying to use Unets in MONAI for various image-to-image regression tasks.

As a starting point, a good simple demo could focus on image denoising with a UNet backbone. This could be done with a toy example synthetic dataset generated say by adding Gaussian noise to the MedNIST dataset. From a quick look at the default parameters of MONAI's UNets, I guess it would at least require moving away from instance normalization.

@wyli Would you be aware of anyone who could spend some time putting such a demo together?

There are also some related tutorial requests (e.g. #866) but are a bit more complex. I would thus vote to prioritise the easier denoising demo.

Sure I'll ask, for now there is a work-in-progress demo for MRI reconstruction, it has a regression-like pipeline... https://github.com/Project-MONAI/tutorials/pull/838

This is a great idea. MONAI Label would also benefit from this tutorial.

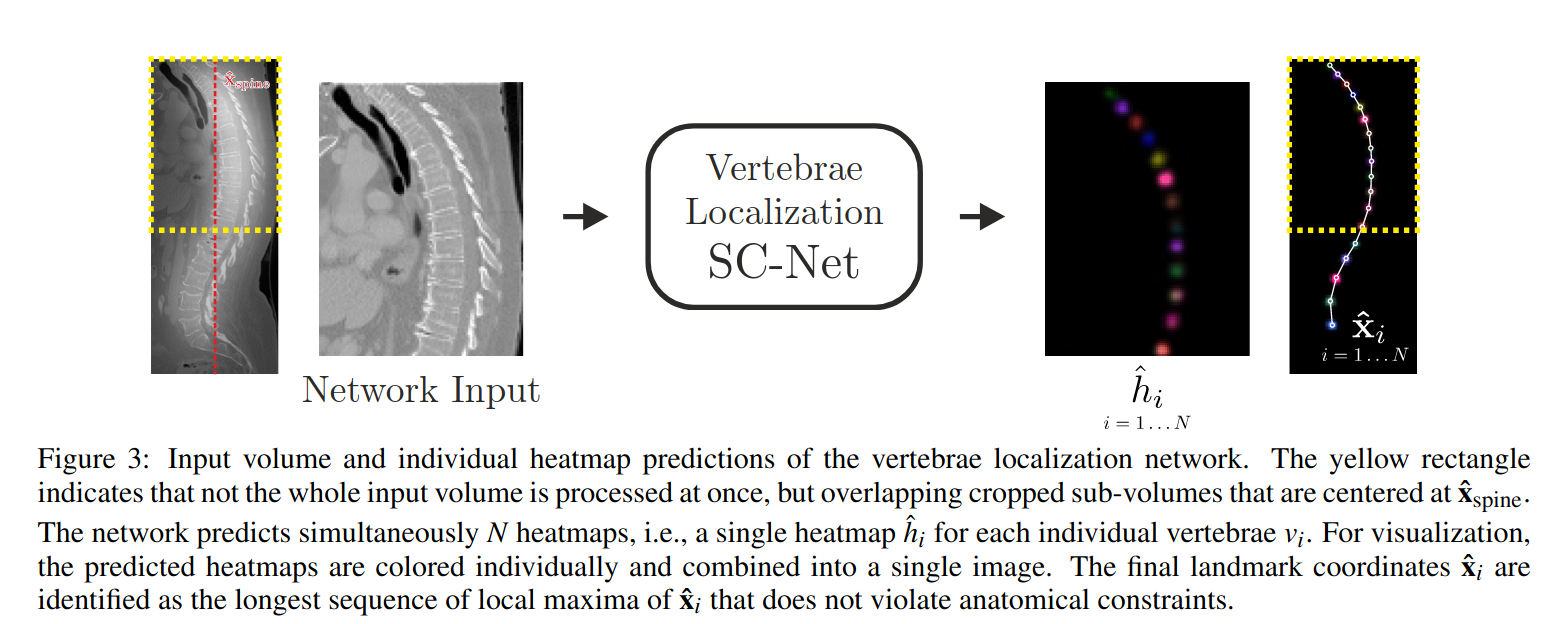

Another use case can be heatmap regression - like stage two of this multistage approach for vertebra segmentation.

Labels can be easily created from the ground truth segmentation

@rijobro Something spine related to what we're working on could fit here.

@rijobro Something spine related to what we're working on could fit here.

Agree with @ericspod!

FYI, a version of the multistage vertebra segmentation model is now working in MONAI Label. If you fetch the latest version, you should be able to test this model on the VerSe dataset:

monailabel start_server --app sample-apps/radiology --studies PATH_TO_IMAGES --conf models localization_spine,localization_vertebra,segmentation_vertebra

The second stage of this model (localization_vertebra) uses segmentation instead of regression. It'd be good to see how this performs with regression as well.

@diazandr3s Your first stage also uses segmentation rather than regression, right?

Yes, currently all stages are working and they use segmentation. It'd be nice to use regression for the second stage and see how that improves performance