Does TensoRT support the fusion of leayrelu and conv?

you may refer to https://docs.nvidia.com/deeplearning/tensorrt/developer-guide/index.html#fusion-types

or create a case and run it with trtexec --verbose, you are able to see the final engine structure in the log. which will tell if TRT can support your case.

or create a case and run it with trtexec --verbose, you are able to see the final engine structure in the log. which will tell if TRT can support your case.

Ok, Thank you for your reply

Does TensorRT support leakyrelu quantization?

Whether the fusion happens depends on whether TRT has tactics supporting that. The very rough guidelines are:

- Conv+LeakyReLU should be fused in FP16 or in INT8 on Turing and Ampere GPUs.

- Other precisions and older GPUs are not guaranteed. If TRT doesn't have a tactic supporting this fusion, TRT will fall back to two-kernel approach.

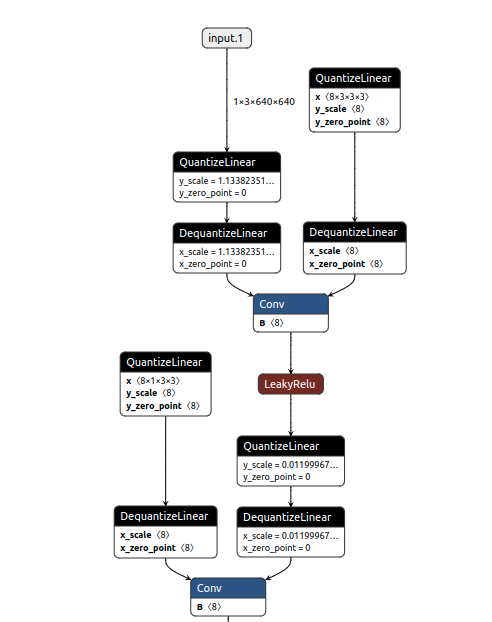

- If QAT (i.e. Q/DQ ops) is used, then Conv+LeakyReLU can only be fused if there are no Q/DQ ops between Conv and LeakyReLU and There are Q/DQ ops before Conv and after LeakyReLU. Basically, it will be fused if the pattern looks like:

...->Q->DQ->Conv->LeakyReLU->Q->DQ->...

Whether the fusion happens depends on whether TRT has tactics supporting that. The very rough guidelines are:

- Conv+LeakyReLU should be fused in FP16 or in INT8 on Turing and Ampere GPUs.

- Other precisions and older GPUs are not guaranteed. If TRT doesn't have a tactic supporting this fusion, TRT will fall back to two-kernel approach.

- If QAT (i.e. Q/DQ ops) is used, then Conv+LeakyReLU can only be fused if there are no Q/DQ ops between Conv and LeakyReLU and There are Q/DQ ops before Conv and after LeakyReLU. Basically, it will be fused if the pattern looks like:

...->Q->DQ->Conv->LeakyReLU->Q->DQ->...

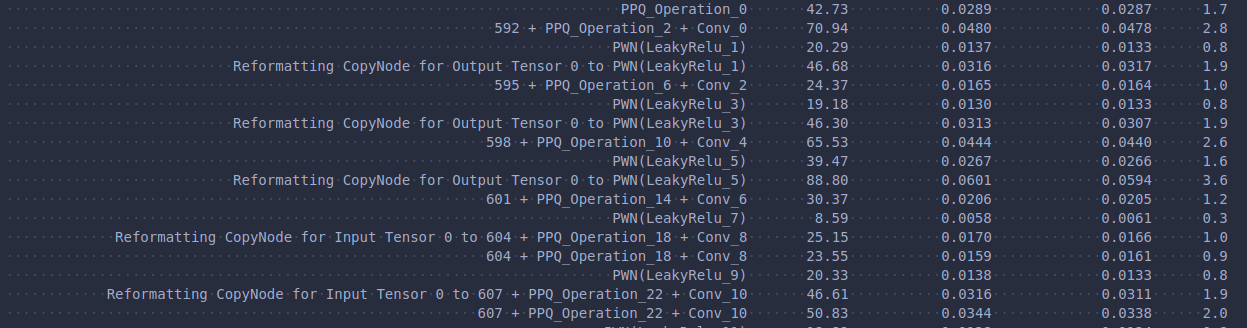

Thank you for your reply, I tried the above method, but the model inference dump profile is as follows, leakyrelu and conv do not seem to be fused

@pangr what's your GPU and have you tried latest 8.6GA? thanks!

closing legacy issues, please reopen if you still have issue with latest TRT, thanks!