Text-Summarizer-Pytorch-Chinese

Text-Summarizer-Pytorch-Chinese copied to clipboard

Text-Summarizer-Pytorch-Chinese copied to clipboard

提供一款中文版生成式摘要服务

Traceback (most recent call last): File "D:/Text-Summarizer-Pytorch-Chinese-master/eval.py", line 171, in eval_processor.evaluate_batch(True) File "D:/Text-Summarizer-Pytorch-Chinese-master/eval.py", line 78, in evaluate_batch start_id, end_id, unk_id) File "D:\Text-Summarizer-Pytorch-Chinese-master\beam_search.py", line 179, in beam_search sum_temporal_srcs_i, prev_s_i).type(T.long) File "D:\Text-Summarizer-Pytorch-Chinese-master\beam_search.py",...

Bumps [numpy](https://github.com/numpy/numpy) from 1.18.4 to 1.22.0. Release notes Sourced from numpy's releases. v1.22.0 NumPy 1.22.0 Release Notes NumPy 1.22.0 is a big release featuring the work of 153 contributors spread...

大佬,这里面没有加指针生成网络吧?

Bumps [tensorflow](https://github.com/tensorflow/tensorflow) from 2.2.1 to 2.7.2. Release notes Sourced from tensorflow's releases. TensorFlow 2.7.2 Release 2.7.2 This releases introduces several vulnerability fixes: Fixes a code injection in saved_model_cli (CVE-2022-29216) Fixes...

我学习率和batch_size都跳了,还是这样

实验数据无法复现

如题,按照readme步骤走下来之后,迭代30万次,loss最少是3点.多,Rouge-1连0,25都没达到

就是找不到40001 40002 400xx 这种的vocab,怎么解决呢,是自己生成一个新的词表就行是吗

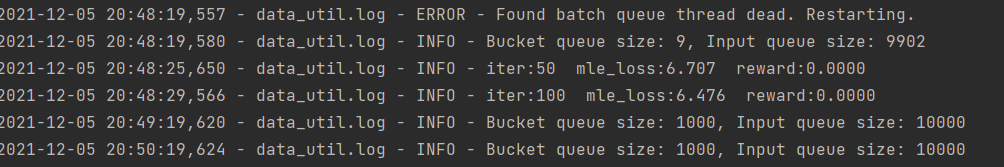

不管max_iterations调成什么代码也没有修改过训练时会出现  后面一直会出现这句话

博主,再出结果后会有比较多[unk]出现,是因为训练不够嘛? 比如 rticle: 5 元 一条 长裤 8 元 一件 毛衣 12 元 一双 球鞋 还有 各式 家具用品 … … 这 不是 清仓 大 卖场 而是 广州 一个 长年 的...