LINSTOR leaves orphan resources on the nodes

Hello. We faced with issue when some linstor nodes become offline:

# linstor n l

Defaulted container "linstor-controller" out of: linstor-controller, kube-rbac-proxy

+---------------------------------------------------------------------------------------+

| Node | NodeType | Addresses | State |

|=======================================================================================|

| b-node0 | SATELLITE | 172.30.250.150:3367 (SSL) | OFFLINE |

| h-node0 | SATELLITE | 172.30.250.2:3367 (SSL) | OFFLINE |

| i-node0 | SATELLITE | 172.30.250.3:3367 (SSL) | OFFLINE |

| linstor-controller-b85954459-vkpxr | CONTROLLER | 10.111.0.177:3367 (SSL) | Online |

| master0 | SATELLITE | 172.30.250.200:3367 (SSL) | Online |

| node0 | SATELLITE | 172.30.250.202:3367 (SSL) | Online |

| node1 | SATELLITE | 172.30.250.203:3367 (SSL) | Online |

| node2 | SATELLITE | 172.30.250.204:3367 (SSL) | Online |

| system0 | SATELLITE | 172.30.250.201:3367 (SSL) | Online |

+---------------------------------------------------------------------------------------+

While satellite is reachable linstor-controller is not trying to recover connection to them:

root@master0:~# telnet 172.30.250.150 3367

Trying 172.30.250.150...

Connected to 172.30.250.150.

Escape character is '^]'.

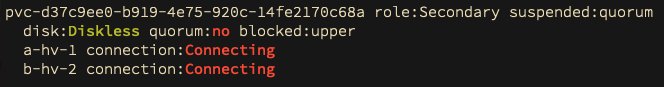

After restarting linstor-controller nodes become online, but some deleted resources remain orphan on them:

pvc-bb9e3318-5554-475c-a8b5-b78f19ffc807 role:Secondary

disk:Diskless quorum:no

node0 connection:Connecting

node2 connection:Connecting

Kernel log:

Apr 22 14:49:26 h-node0 kernel: drbd pvc-bb9e3318-5554-475c-a8b5-b78f19ffc807/0 drbd1003 node0: pdsk( UpToDate -> DUnknown ) repl( Established -> Off )

Apr 22 14:49:26 h-node0 kernel: drbd pvc-bb9e3318-5554-475c-a8b5-b78f19ffc807 node0: ack_receiver terminated

Apr 22 14:49:26 h-node0 kernel: drbd pvc-bb9e3318-5554-475c-a8b5-b78f19ffc807 node0: Terminating ack_recv thread

Apr 22 14:49:26 h-node0 kernel: drbd pvc-bb9e3318-5554-475c-a8b5-b78f19ffc807 node0: Terminating sender thread

Apr 22 14:49:26 h-node0 kernel: drbd pvc-bb9e3318-5554-475c-a8b5-b78f19ffc807 node0: Starting sender thread (from drbd_r_pvc-bb9e [1112666])

Apr 22 14:49:26 h-node0 kernel: drbd pvc-bb9e3318-5554-475c-a8b5-b78f19ffc807 node0: Connection closed

Apr 22 14:49:26 h-node0 kernel: drbd pvc-bb9e3318-5554-475c-a8b5-b78f19ffc807 node0: conn( TearDown -> Unconnected )

Apr 22 14:49:26 h-node0 kernel: drbd pvc-bb9e3318-5554-475c-a8b5-b78f19ffc807 node0: Restarting receiver thread

Apr 22 14:49:26 h-node0 kernel: drbd pvc-bb9e3318-5554-475c-a8b5-b78f19ffc807 node0: conn( Unconnected -> Connecting )

Apr 22 14:49:26 h-node0 kernel: drbd pvc-bb9e3318-5554-475c-a8b5-b78f19ffc807 node2: Preparing remote state change 1428372717

Apr 22 14:49:26 h-node0 kernel: drbd pvc-bb9e3318-5554-475c-a8b5-b78f19ffc807 node2: Committing remote state change 1428372717 (primary_nodes=0)

Apr 22 14:49:26 h-node0 kernel: drbd pvc-bb9e3318-5554-475c-a8b5-b78f19ffc807 node2: conn( Connected -> TearDown ) peer( Secondary -> Unknown )

Apr 22 14:49:26 h-node0 kernel: drbd pvc-bb9e3318-5554-475c-a8b5-b78f19ffc807/0 drbd1003: quorum( yes -> no )

Apr 22 14:49:26 h-node0 kernel: drbd pvc-bb9e3318-5554-475c-a8b5-b78f19ffc807/0 drbd1003 node2: pdsk( UpToDate -> DUnknown ) repl( Established -> Off )

Apr 22 14:49:26 h-node0 kernel: drbd pvc-bb9e3318-5554-475c-a8b5-b78f19ffc807 node2: ack_receiver terminated

Apr 22 14:49:26 h-node0 kernel: drbd pvc-bb9e3318-5554-475c-a8b5-b78f19ffc807 node2: Terminating ack_recv thread

Apr 22 14:49:26 h-node0 kernel: drbd pvc-bb9e3318-5554-475c-a8b5-b78f19ffc807 node2: Terminating sender thread

Apr 22 14:49:26 h-node0 kernel: drbd pvc-bb9e3318-5554-475c-a8b5-b78f19ffc807 node2: Starting sender thread (from drbd_r_pvc-bb9e [1112668])

Apr 22 14:49:26 h-node0 kernel: drbd pvc-bb9e3318-5554-475c-a8b5-b78f19ffc807 node2: Connection closed

Apr 22 14:49:26 h-node0 kernel: drbd pvc-bb9e3318-5554-475c-a8b5-b78f19ffc807 node2: conn( TearDown -> Unconnected )

Apr 22 14:49:26 h-node0 kernel: drbd pvc-bb9e3318-5554-475c-a8b5-b78f19ffc807 node2: Restarting receiver thread

Apr 22 14:49:26 h-node0 kernel: drbd pvc-bb9e3318-5554-475c-a8b5-b78f19ffc807 node2: conn( Unconnected -> Connecting )

Unfortunately logs from satellite and controller are missing

cc @duckhawk

errorlog.txt Example of error log from offline linstor node

errorlog-controller.txt Logs from controller at that moment

Hello. Have the same problem, a lot of resources are in the same state w/o opportunity to delete them.

┊ pvc-2cbf647d-e21c-44f5-8577-96a9ec173fa2 ┊ k8s-eu-de-n01 ┊ 7015 ┊ Unused ┊ ┊ Unknown ┊ 2022-05-10 02:08:38 ┊

┊ pvc-2cbf647d-e21c-44f5-8577-96a9ec173fa2 ┊ k8s-eu-de-n02 ┊ 7015 ┊ Unused ┊ ┊ Unknown ┊ ┊

┊ pvc-2cbf647d-e21c-44f5-8577-96a9ec173fa2 ┊ k8s-eu-de-n03 ┊ 7015 ┊ Unused ┊ ┊ Unknown ┊ 2022-05-10 02:08:40 ┊

root@linstor-controller-5bcb9d7bc6-2k7bw:/# linstor r d k8s-eu-de-n01 pvc-2cbf647d-e21c-44f5-8577-96a9ec173fa2

INFO:

Tie breaker marked for deletion

INFO:

Resource-definition property 'DrbdOptions/Resource/quorum' was removed as there are not enough resources for quorum

INFO:

Resource-definition property 'DrbdOptions/Resource/on-no-quorum' was removed as there are not enough resources for quorum

SUCCESS:

Description:

Node: k8s-eu-de-n01, Resource: pvc-2cbf647d-e21c-44f5-8577-96a9ec173fa2 preparing for deletion.

Details:

Node: k8s-eu-de-n01, Resource: pvc-2cbf647d-e21c-44f5-8577-96a9ec173fa2 UUID is: 09ac3830-7654-4a66-9a4a-b731093ac41c

SUCCESS:

Preparing deletion of resource on 'k8s-eu-de-n01'

ERROR:

(Node: 'k8s-eu-de-n02') Failed to adjust DRBD resource pvc-2cbf647d-e21c-44f5-8577-96a9ec173fa2

Show reports:

linstor error-reports show 627A45FE-76E09-001588

SUCCESS:

Preparing deletion of resource on 'k8s-eu-de-n03'

ERROR:

Description:

Deletion of resource 'pvc-2cbf647d-e21c-44f5-8577-96a9ec173fa2' on node 'k8s-eu-de-n01' failed due to an unknown exception.

Details:

Node: k8s-eu-de-n01, Resource: pvc-2cbf647d-e21c-44f5-8577-96a9ec173fa2

Show reports:

linstor error-reports show 627A245C-00000-000389

root@linstor-controller-5bcb9d7bc6-2k7bw:/# linstor error-reports show 627A45FE-76E09-001588

ERROR REPORT 627A45FE-76E09-001588

============================================================

Application: LINBIT�� LINSTOR

Module: Satellite

Version: 1.18.0

Build ID: 648ab925644f53039239c5aec366a11f046f5977

Build time: 2022-04-06T15:53:04+00:00

Error time: 2022-05-10 15:16:32

Node: k8s-eu-de-n02

============================================================

Reported error:

===============

Description:

Failed to adjust DRBD resource pvc-2cbf647d-e21c-44f5-8577-96a9ec173fa2

Category: LinStorException

Class name: ResourceException

Class canonical name: com.linbit.linstor.core.devmgr.exceptions.ResourceException

Generated at: Method 'adjustDrbd', Source file 'DrbdLayer.java', Line #819

Error message: Failed to adjust DRBD resource pvc-2cbf647d-e21c-44f5-8577-96a9ec173fa2

Error context:

An error occurred while processing resource 'Node: 'k8s-eu-de-n02', Rsc: 'pvc-2cbf647d-e21c-44f5-8577-96a9ec173fa2''

Call backtrace:

Method Native Class:Line number

adjustDrbd N com.linbit.linstor.layer.drbd.DrbdLayer:819

process N com.linbit.linstor.layer.drbd.DrbdLayer:393

process N com.linbit.linstor.core.devmgr.DeviceHandlerImpl:847

processResourcesAndSnapshots N com.linbit.linstor.core.devmgr.DeviceHandlerImpl:359

dispatchResources N com.linbit.linstor.core.devmgr.DeviceHandlerImpl:169

dispatchResources N com.linbit.linstor.core.devmgr.DeviceManagerImpl:309

phaseDispatchDeviceHandlers N com.linbit.linstor.core.devmgr.DeviceManagerImpl:1083

devMgrLoop N com.linbit.linstor.core.devmgr.DeviceManagerImpl:735

run N com.linbit.linstor.core.devmgr.DeviceManagerImpl:631

run N java.lang.Thread:829

Caused by:

==========

Description:

Execution of the external command 'drbdadm' failed.

Cause:

The external command exited with error code 1.

Correction:

- Check whether the external program is operating properly.

- Check whether the command line is correct.

Contact a system administrator or a developer if the command line is no longer valid

for the installed version of the external program.

Additional information:

The full command line executed was:

drbdadm -vvv adjust pvc-2cbf647d-e21c-44f5-8577-96a9ec173fa2

The external command sent the following output data:

drbdsetup resource-options pvc-2cbf647d-e21c-44f5-8577-96a9ec173fa2 --set-defaults --quorum=off

drbdsetup del-peer pvc-2cbf647d-e21c-44f5-8577-96a9ec173fa2 2

drbdsetup new-peer pvc-2cbf647d-e21c-44f5-8577-96a9ec173fa2 0 --_name=k8s-eu-de-n01 --verify-alg=crct10dif-pclmul --shared-secret=GN46k81lfx6OR9dFCfJL --cram-hmac-alg=sha1

drbdsetup new-path pvc-2cbf647d-e21c-44f5-8577-96a9ec173fa2 0 ipv4:10.0.0.2:7015 ipv4:10.0.0.3:7015

The external command sent the following error information:

Value of 'quorum' differs: r=majority c=off

pvc-2cbf647d-e21c-44f5-8577-96a9ec173fa2: Failure: (102) Local address(port) already in use.

Command 'drbdsetup new-path pvc-2cbf647d-e21c-44f5-8577-96a9ec173fa2 0 ipv4:10.0.0.2:7015 ipv4:10.0.0.3:7015' terminated with exit code 10

Category: LinStorException

Class name: ExtCmdFailedException

Class canonical name: com.linbit.extproc.ExtCmdFailedException

Generated at: Method 'execute', Source file 'DrbdAdm.java', Line #593

Error message: The external command 'drbdadm' exited with error code 1

Call backtrace:

Method Native Class:Line number

execute N com.linbit.linstor.layer.drbd.utils.DrbdAdm:593

adjust N com.linbit.linstor.layer.drbd.utils.DrbdAdm:90

adjustDrbd N com.linbit.linstor.layer.drbd.DrbdLayer:741

process N com.linbit.linstor.layer.drbd.DrbdLayer:393

process N com.linbit.linstor.core.devmgr.DeviceHandlerImpl:847

processResourcesAndSnapshots N com.linbit.linstor.core.devmgr.DeviceHandlerImpl:359

dispatchResources N com.linbit.linstor.core.devmgr.DeviceHandlerImpl:169

dispatchResources N com.linbit.linstor.core.devmgr.DeviceManagerImpl:309

phaseDispatchDeviceHandlers N com.linbit.linstor.core.devmgr.DeviceManagerImpl:1083

devMgrLoop N com.linbit.linstor.core.devmgr.DeviceManagerImpl:735

run N com.linbit.linstor.core.devmgr.DeviceManagerImpl:631

run N java.lang.Thread:829

END OF ERROR REPORT.

root@linstor-controller-5bcb9d7bc6-2k7bw:/# linstor error-reports show 627A245C-00000-000389

ERROR REPORT 627A245C-00000-000389

============================================================

Application: LINBIT�� LINSTOR

Module: Controller

Version: 1.18.0

Build ID: 648ab925644f53039239c5aec366a11f046f5977

Build time: 2022-04-06T15:53:04+00:00

Error time: 2022-05-10 15:16:34

Node: linstor-controller-5bcb9d7bc6-2k7bw

Peer: RestClient(10.111.1.1; 'PythonLinstor/1.13.0 (API1.0.4): Client 1.13.0')

============================================================

Reported error:

===============

Category: RuntimeException

Class name: DelayedApiRcException

Class canonical name: com.linbit.linstor.core.apicallhandler.response.CtrlResponseUtils.DelayedApiRcException

Generated at: Method 'lambda$mergeExtractingApiRcExceptions$4', Source file 'CtrlResponseUtils.java', Line #126

Error message: Exceptions have been converted to responses

Error context:

Deletion of resource 'pvc-2cbf647d-e21c-44f5-8577-96a9ec173fa2' on node 'k8s-eu-de-n01' failed due to an unknown exception.

Asynchronous stage backtrace:

(Node: 'k8s-eu-de-n02') Failed to adjust DRBD resource pvc-2cbf647d-e21c-44f5-8577-96a9ec173fa2

Error has been observed at the following site(s):

|_ checkpoint ? Prepare resource delete

Stack trace:

Call backtrace:

Method Native Class:Line number

lambda$mergeExtractingApiRcExceptions$4 N com.linbit.linstor.core.apicallhandler.response.CtrlResponseUtils:126

Suppressed exception 1 of 2:

===============

Category: RuntimeException

Class name: ApiRcException

Class canonical name: com.linbit.linstor.core.apicallhandler.response.ApiRcException

Generated at: Method 'handleAnswer', Source file 'CommonMessageProcessor.java', Line #337

Error message: (Node: 'k8s-eu-de-n02') Failed to adjust DRBD resource pvc-2cbf647d-e21c-44f5-8577-96a9ec173fa2

Error context:

Deletion of resource 'pvc-2cbf647d-e21c-44f5-8577-96a9ec173fa2' on node 'k8s-eu-de-n01' failed due to an unknown exception.

ApiRcException entries:

Nr: 1

Message: (Node: 'k8s-eu-de-n02') Failed to adjust DRBD resource pvc-2cbf647d-e21c-44f5-8577-96a9ec173fa2

Call backtrace:

Method Native Class:Line number

handleAnswer N com.linbit.linstor.proto.CommonMessageProcessor:337

handleDataMessage N com.linbit.linstor.proto.CommonMessageProcessor:284

doProcessInOrderMessage N com.linbit.linstor.proto.CommonMessageProcessor:235

lambda$doProcessMessage$3 N com.linbit.linstor.proto.CommonMessageProcessor:220

subscribe N reactor.core.publisher.FluxDefer:46

subscribe N reactor.core.publisher.Flux:8357

onNext N reactor.core.publisher.FluxFlatMap$FlatMapMain:418

drainAsync N reactor.core.publisher.FluxFlattenIterable$FlattenIterableSubscriber:414

drain N reactor.core.publisher.FluxFlattenIterable$FlattenIterableSubscriber:679

onNext N reactor.core.publisher.FluxFlattenIterable$FlattenIterableSubscriber:243

drainFused N reactor.core.publisher.UnicastProcessor:286

drain N reactor.core.publisher.UnicastProcessor:329

onNext N reactor.core.publisher.UnicastProcessor:408

next N reactor.core.publisher.FluxCreate$IgnoreSink:618

next N reactor.core.publisher.FluxCreate$SerializedSink:153

processInOrder N com.linbit.linstor.netcom.TcpConnectorPeer:383

doProcessMessage N com.linbit.linstor.proto.CommonMessageProcessor:218

lambda$processMessage$2 N com.linbit.linstor.proto.CommonMessageProcessor:164

onNext N reactor.core.publisher.FluxPeek$PeekSubscriber:177

runAsync N reactor.core.publisher.FluxPublishOn$PublishOnSubscriber:439

run N reactor.core.publisher.FluxPublishOn$PublishOnSubscriber:526

call N reactor.core.scheduler.WorkerTask:84

call N reactor.core.scheduler.WorkerTask:37

run N java.util.concurrent.FutureTask:264

run N java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask:304

runWorker N java.util.concurrent.ThreadPoolExecutor:1128

run N java.util.concurrent.ThreadPoolExecutor$Worker:628

run N java.lang.Thread:829

Suppressed exception 2 of 2:

===============

Category: RuntimeException

Class name: OnAssemblyException

Class canonical name: reactor.core.publisher.FluxOnAssembly.OnAssemblyException

Generated at: Method 'lambda$mergeExtractingApiRcExceptions$4', Source file 'CtrlResponseUtils.java', Line #126

Error message:

Error has been observed at the following site(s):

|_ checkpoint ��� Prepare resource delete

Stack trace:

Error context:

Deletion of resource 'pvc-2cbf647d-e21c-44f5-8577-96a9ec173fa2' on node 'k8s-eu-de-n01' failed due to an unknown exception.

Call backtrace:

Method Native Class:Line number

lambda$mergeExtractingApiRcExceptions$4 N com.linbit.linstor.core.apicallhandler.response.CtrlResponseUtils:126

subscribe N reactor.core.publisher.FluxDefer:46

subscribe N reactor.core.publisher.Flux:8357

onComplete N reactor.core.publisher.FluxConcatArray$ConcatArraySubscriber:207

onComplete N reactor.core.publisher.FluxMap$MapSubscriber:136

checkTerminated N reactor.core.publisher.FluxFlatMap$FlatMapMain:838

drainLoop N reactor.core.publisher.FluxFlatMap$FlatMapMain:600

innerComplete N reactor.core.publisher.FluxFlatMap$FlatMapMain:909

onComplete N reactor.core.publisher.FluxFlatMap$FlatMapInner:1013

onComplete N reactor.core.publisher.Operators$MultiSubscriptionSubscriber:2016

onComplete N reactor.core.publisher.FluxMap$MapSubscriber:136

onComplete N reactor.core.publisher.FluxConcatArray$ConcatArraySubscriber:191

onComplete N reactor.core.publisher.MonoIgnoreElements$IgnoreElementsSubscriber:81

onComplete N reactor.core.publisher.FluxPeek$PeekSubscriber:252

onComplete N reactor.core.publisher.Operators$MultiSubscriptionSubscriber:2016

onComplete N reactor.core.publisher.FluxMap$MapSubscriber:136

onComplete N reactor.core.publisher.FluxSwitchIfEmpty$SwitchIfEmptySubscriber:78

complete N reactor.core.publisher.FluxCreate$BaseSink:438

drain N reactor.core.publisher.FluxCreate$BufferAsyncSink:784

complete N reactor.core.publisher.FluxCreate$BufferAsyncSink:732

drainLoop N reactor.core.publisher.FluxCreate$SerializedSink:239

drain N reactor.core.publisher.FluxCreate$SerializedSink:205

complete N reactor.core.publisher.FluxCreate$SerializedSink:196

apiCallComplete N com.linbit.linstor.netcom.TcpConnectorPeer:465

handleComplete N com.linbit.linstor.proto.CommonMessageProcessor:363

handleDataMessage N com.linbit.linstor.proto.CommonMessageProcessor:287

doProcessInOrderMessage N com.linbit.linstor.proto.CommonMessageProcessor:235

lambda$doProcessMessage$3 N com.linbit.linstor.proto.CommonMessageProcessor:220

subscribe N reactor.core.publisher.FluxDefer:46

subscribe N reactor.core.publisher.Flux:8357

onNext N reactor.core.publisher.FluxFlatMap$FlatMapMain:418

drainAsync N reactor.core.publisher.FluxFlattenIterable$FlattenIterableSubscriber:414

drain N reactor.core.publisher.FluxFlattenIterable$FlattenIterableSubscriber:679

onNext N reactor.core.publisher.FluxFlattenIterable$FlattenIterableSubscriber:243

drainFused N reactor.core.publisher.UnicastProcessor:286

drain N reactor.core.publisher.UnicastProcessor:329

onNext N reactor.core.publisher.UnicastProcessor:408

next N reactor.core.publisher.FluxCreate$IgnoreSink:618

next N reactor.core.publisher.FluxCreate$SerializedSink:153

processInOrder N com.linbit.linstor.netcom.TcpConnectorPeer:383

doProcessMessage N com.linbit.linstor.proto.CommonMessageProcessor:218

lambda$processMessage$2 N com.linbit.linstor.proto.CommonMessageProcessor:164

onNext N reactor.core.publisher.FluxPeek$PeekSubscriber:177

runAsync N reactor.core.publisher.FluxPublishOn$PublishOnSubscriber:439

run N reactor.core.publisher.FluxPublishOn$PublishOnSubscriber:526

call N reactor.core.scheduler.WorkerTask:84

call N reactor.core.scheduler.WorkerTask:37

run N java.util.concurrent.FutureTask:264

run N java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask:304

runWorker N java.util.concurrent.ThreadPoolExecutor:1128

run N java.util.concurrent.ThreadPoolExecutor$Worker:628

run N java.lang.Thread:829

END OF ERROR REPORT.

Same again, linstor version: 1.20.3, drbd version: 9.2.1

resource does not exists in linstor anymore

We fixed a bug in v1.23.0 that could have caused this issue. The problem was that a volume-definition delete did not properly check if all satellites are online. If a satellite was offline during the/all vd d operations of a resource-definition, the resource-definition could afterwards be deleted also (since there are no volumes left), leaving the offline satellite with the orphan resource behind.

This mechanic (delete VDs before RD) is used by the CSI driver.

Feel free to reopen this issue if this bug happens again with Linstor v1.23.0 or higher.