Keeping people from copy-pasting ChatGPT Answers

I couldn't find an issue for this yet so I thought I'd make one

It's been made clear that ChatGPT Answers aren't optimal because of legal issues, corporate language, quality and more.

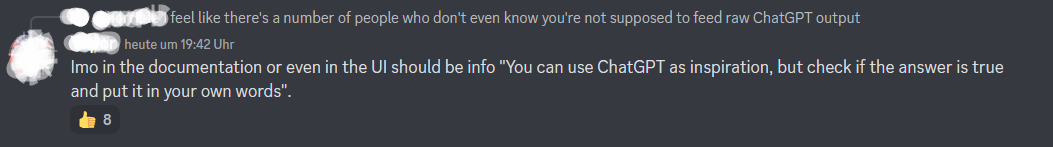

Ideas to keep these answers lower are:

- Informing people when writing answers using an UI prompt/alert

- Adding it to more style guides / documentation

Also other approaches (like a heuristic detecting copy-pasting, keystrokes, chatgpt detectors) were suggested

A way I've seen in a couple programming questions is that when you copy ChatGPT code, it always has "Copy code" written in the code block.

@opfromthestart Worse is if even "regenerate answer" is still contained 😄

But to be serious, some thought regarding the overlying topic:

- I wouldn't ban copy/paste by any means, some people use it very actively for the right reasons, as they simply capture their content in another editor - you don't want to punish these people who are really putting a lot of time into their answers which lose their work accidentally (I read this multiple times on Discord).

- If you could connect something like https://openai-openai-detector.hf.space/ directly to the input field, you probably wouldn't have to comment much at all (and could send the score right along with it)

- I think this is where you really have to be realistic and think about what you're aiming for in terms of a threshold share that needs to be at least organic. I'm uncertain if it's really realistic to require scores above 90%, which may not come from an AI.

I believe a big portion of people just don't know that they aren't supposed to do this, so just giving an alert when it seems like someone is spamming ChatGpt Answers might fix a portion. It could maybe also be combined with other quality alerts, such as running a grammar checker beneath, etc.

Detection is becoming a hot topic. Stanford did something more general here: https://arxiv.org/pdf/2301.11305.pdf

I wonder if we could train DetectGPT and just have it as a service with OpenAssistant. We might be able to include multiple model detection (not just ChatGPT.3, but the other models and future ones as well so long as we have access to the output for cheap).

Not sure if this is as bad as ChatGPT answers but I've seen Wikipedia answers copy pasted. The first 1-2 lines of an article word for word and that doesn't have any added value to the open assistant as it already "knows" that. This kind of copy paste could possibly be easier to detect as the wiki doesn't change and a simple web research gave me the exact wiki page.

Not sure if this is as bad as ChatGPT answers but I've seen Wikipedia answers copy pasted. The first 1-2 lines of an article word for word and that doesn't have any added value to the open assistant as it already "knows" that. This kind of copy paste could possibly be easier to detect as the wiki doesn't change and a simple web research gave me the exact wiki page.

Shouldn't every correct answer be reinforced? Even if it has appeared in the dataset already, it probably didn't appear in the context of the specific question being asked, and would be great for it to learn that this excerpt is the expected answer.

Obviously, it should be formatted as a proper assistant answer, and a pure copy-paste would probably not have the expected tone of an assistant.

We can never "stop people" as this content cannot be automatically detected reliably, but we now have moderator message search and editing so are at least able to deal with clear cases