About the number of epoches

Hello!

Thanks for open source your code.

I was training the summer2winter_yosemite dataset, but when I trained 50 epochs, I tested FID=115.7, when I trained 100 epochs, I found that the FID was greatly improved, and I checked the index and found that it was indeed trained later When fakeB becomes very inexplicable.

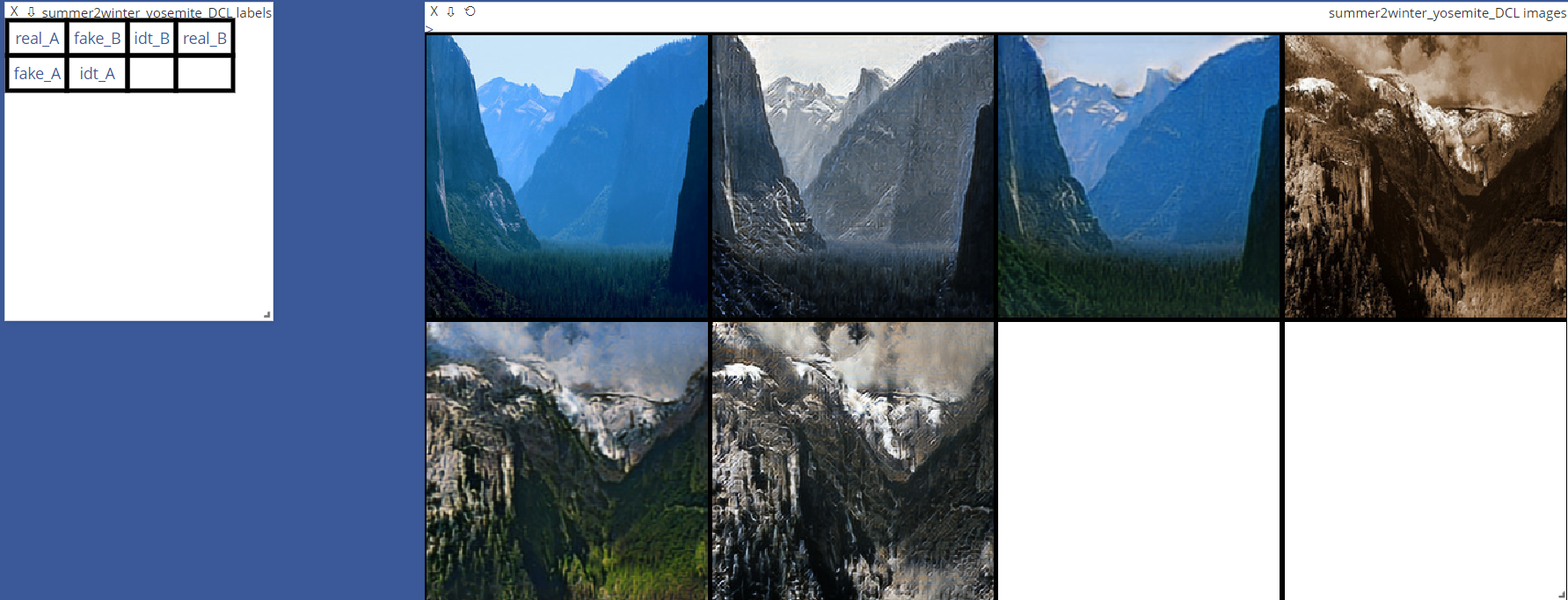

the following pictures are the effects of 50epoch and 100epoch, it is obvious that 100epoch becomes very strange

And there seems to be something wrong with the names displayed on visdom (please see the idt_B and real_B). Aren't they supposed to be very similar?

I would appreciate it if you could reply! Thank you!

Hello Liuzhen, Thanks for your attention in our work!

1: 50ep/100ep If possible, would you mind sharing more details (traiing setting) ? Especially, learning rate, batch_size, and total training epoch in your setting.

2: idt_A/idt_B Oh that's not an error. Idt_b is passing real_A to a Generator with translation direction A->B, so idt_b should be similar to real_A.

Hello Junlin, Thank you for your reply!

-

I have not changed your setup parameters(but maybe when I train the first 50 epoch, I change the batch_size=2. But when I train the second 50 epoch, I change it back to 1). And when I train the second time I use the --epoch_count 50 --continue_train. I don't know if this will work because I follow CycleGAN‘s operation. I recalculated the FID for 50epoch and 100epoch and find that the first equals to 115 and the second equals to 117. Does this mean that there is no point in training this dataset again?

-

Thanks for the explanation, I guess I should have gotten confused with the other paper, I'll go back and read the details of the paper.

Hello again,

1: Oh most setting in this repo follows CycleGAN, so it's ok to follow CycleGAN. Batch_size = 1 would be better

Regarding FID: Usually, the highest FID occurs during the middle of training. But higher FID does not always guarantee better results. It's better to train for ~1000000 iterations.

2: The naming actually follows CycleGAN, which might be confusing.

Thank you very much!