CompatHelper: bump compat for ImplicitDifferentiation in [weakdeps] to 0.8 for package ControlSystemsBase, (keep existing compat)

This pull request changes the compat entry for the ImplicitDifferentiation package from 0.7.2 to 0.7.2, 0.8 for package ControlSystemsBase.

This keeps the compat entries for earlier versions.

Note: I have not tested your package with this new compat entry. It is your responsibility to make sure that your package tests pass before you merge this pull request.

Your test error should be fixed by https://github.com/JuliaDecisionFocusedLearning/ImplicitDifferentiation.jl/pull/174. I had a bit of a fight with input types in the new release

Fix released on my end, wanna close this PR and reopen again?

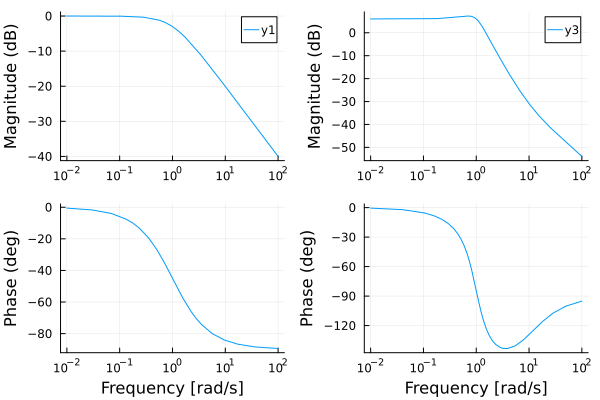

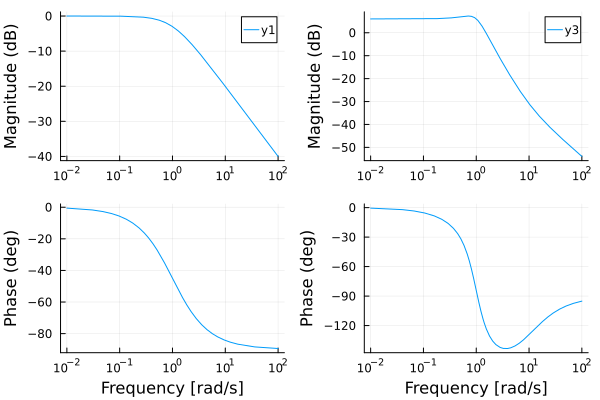

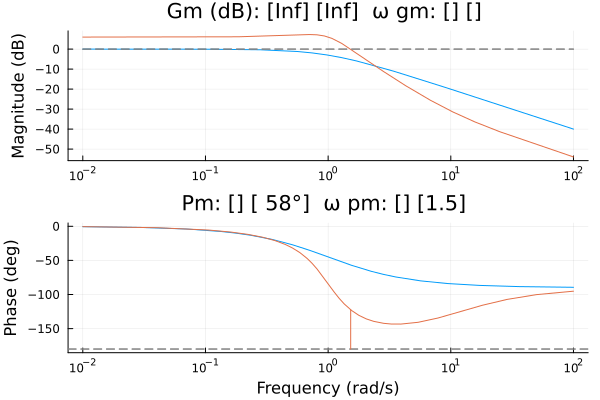

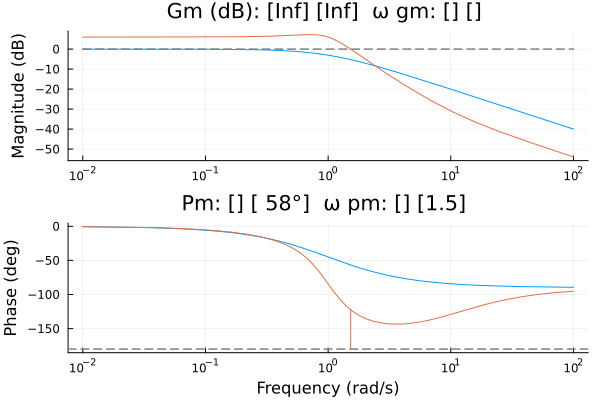

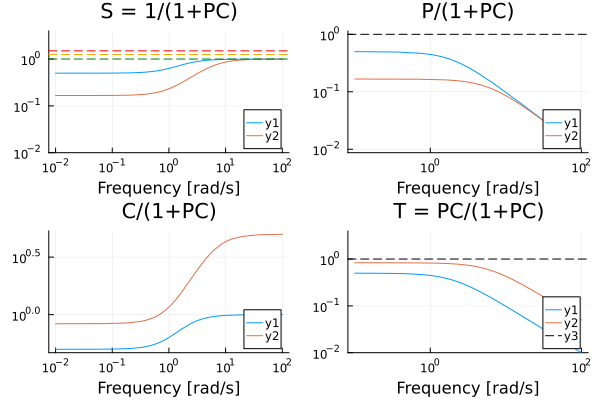

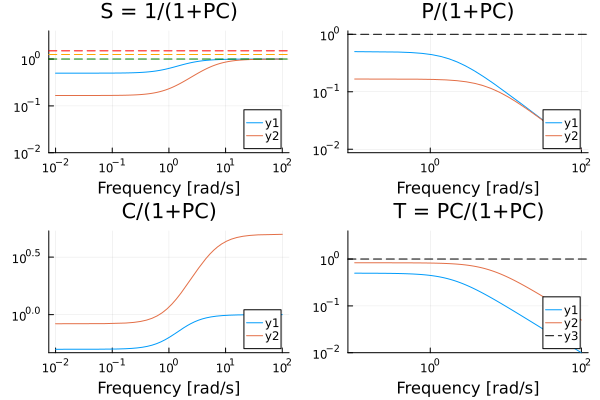

This is an automated message. Plots were compared to references. 3/11 images have changed, see differences below. After pulling this PR, please update the reference images by creating a PR to ControlExamplePlots.jl here.

| Difference | Reference Image | New Image |

|---|---|---|

| :heavy_check_mark: 0.0 |  |

|

| :heavy_check_mark: 0.0 |  |

|

| :heavy_check_mark: 0.0 |  |

|

Codecov Report

All modified and coverable lines are covered by tests :white_check_mark:

Project coverage is 24.86%. Comparing base (

cbfe3c9) to head (c766ddc).

Additional details and impacted files

@@ Coverage Diff @@

## master #998 +/- ##

===========================================

- Coverage 92.52% 24.86% -67.67%

===========================================

Files 41 41

Lines 5085 5043 -42

===========================================

- Hits 4705 1254 -3451

- Misses 380 3789 +3409

:umbrella: View full report in Codecov by Sentry.

:loudspeaker: Have feedback on the report? Share it here.

:rocket: New features to boost your workflow:

- :snowflake: Test Analytics: Detect flaky tests, report on failures, and find test suite problems.

Okay, so what's happening now is:

- In the update of ImplicitDifferentiation.jl, I bumped DifferentiationInterface.jl from v0.6 to v0.7, which enforces "strict" preparation: the type of inputs during preparation must now be the same as during execution.

- Iterative solvers from Krylov.jl like working with

Vectors rather than whatever custom array type you're using (in this caseUpperTriangular). - To make this strict check pass, I added a

copyto!step from theVectorto your reshaped custom array type before the pullback. - This is the step that is erroring because an

UpperTriangularis not writable in-place.

Could you maybe extract an MWE for me and put it in https://github.com/JuliaDecisionFocusedLearning/ImplicitDifferentiation.jl/issues/176?

Gentle bump here for @baggepinnen, an MWE would help me with design decisions on what should be supported or not in ImplicitDifferentiation :)

Would it help if I convert some triangular matrix to a regular matrix? I don't have much time to dedicate to this extension, it was developed as a technology demonstrator but I have no use cases for it at the moment. It was likely a mistake of mine to rely on such an actively developed package in ControlSystems.jl which is intended to be very robust and stable, but I can't quite remove the support without making a breaking release, which I don't want to do. I'd be willing to accept some loss of performance for reduced maintenance burden and maybe a well-placed Matrix(X) would be sufficient?

On the plus side, you're the only external user of the library, so I can easily take your needs into account! I will be using ImplicitDifferentiation for my research a lot more in the near future, hence the recent wave of changes. But it's hard for me to settle on the right design with basically no external feedback, hence the trial and error ^^ sorry about that.

My main design hesitation is whether I should allow arbitrary AbstractArrays to serve as the solution y and conditions c. Understanding your use case for UpperTriangular would be a useful data point for my reflection, even if you cannot extract an MWE for lack of time.

No hard feelings, the library is accurately labeled as experimental with the 0.x version number, so I take absolutely no issue with you experimenting with your library!

Understanding your use case for UpperTriangular would be a useful data point for my reflection

I assume that UpperTriangular comes into play due to plyapc/plyapd returning UpperTriangular matrices. The solution to the Lyapunov equation is positive definite, and these functions return a Cholesky factor of the solution rather than the solution itself for numerical reasons.

Hey @baggepinnen,

I've thought long and hard about this, and my conclusion is that structured matrices like UpperTriangular or SparseMatrixCSC won't play nice with ImplicitDifferentiation anyway. One of the reasons is that linear solvers prefer vectors, so we flatten everything, which tends to forget the underlying structure.

My recommendation would be to express the solver and conditions based on the vector of values above and including the diagonal, and then reconstruct the UpperTriangular after the implicit function has been called. Here's an MWE of what that could look like:

using ImplicitDifferentiation

using LinearAlgebra

using ForwardDiff

function vector_from_uppertriangular(U::UpperTriangular)

mask = triu(trues(size(U)))

return U[mask]

end

function uppertriangular_from_vector(v::AbstractVector)

n = isqrt(2 * length(v))

M = broadcast(1:n, transpose(1:n)) do i, j

if i <= j

return v[(j - 1) * j ÷ 2 + i]

else

return zero(eltype(v))

end

end

return UpperTriangular(M)

end

initial_solver(x) = UpperTriangular(sqrt.(x * x'))

initial_conditions(x, U) = UpperTriangular(U .^ 2 .- x * x')

function flat_solver(x)

U = initial_solver(x)

y = vector_from_uppertriangular(U)

return y, nothing

end

function flat_conditions(x, y, _z)

U = uppertriangular_from_vector(y)

C = initial_conditions(x, U)

return vector_from_uppertriangular(C)

end

implicit = ImplicitFunction(flat_solver, flat_conditions)

differentiable_solver(x) = uppertriangular_from_vector(implicit(x)[1])

Let's check that it works:

julia> for n in 1:10

U = UpperTriangular(rand(n, n))

@assert uppertriangular_from_vector(vector_from_uppertriangular(U)) == U

end

julia> x = float.(1:3)

3-element Vector{Float64}:

1.0

2.0

3.0

julia> differentiable_solver(x)

3×3 UpperTriangular{Float64, Matrix{Float64}}:

1.0 1.41421 1.73205

⋅ 2.0 2.44949

⋅ ⋅ 3.0

julia> ForwardDiff.jacobian(differentiable_solver, x)

9×3 Matrix{Float64}:

1.0 0.0 0.0

0.0 0.0 0.0

0.0 0.0 0.0

0.707107 0.353553 0.0

0.0 1.0 0.0

0.0 0.0 0.0

0.866025 0.0 0.288675

0.0 0.612372 0.408248

0.0 0.0 1.0

julia> ForwardDiff.jacobian(differentiable_solver, x) ≈ ForwardDiff.jacobian(initial_solver, x)

true

This should keep working across versions of ImplicitDifferentiation, the main thing that I'm changing at the moment are the keyword arguments

Alternately, I have a prototype branch which should be completely agnostic to types here: https://github.com/JuliaDecisionFocusedLearning/ImplicitDifferentiation.jl/pull/180

Can you maybe try it out?

EDIT: it is now on the main branch

Gentle bump on this one @baggepinnen, hopefully it should save you the trouble of capping the version of ImplicitDifferentiation

Edit: ImplicitDifferentiation v0.9 should work here

Doesn't look like it did? https://github.com/JuliaControl/ControlSystems.jl/actions/runs/16394242076/job/46324258559?pr=1008#step:6:1345

Just heads up, I'm mostly off for vacation during until mid August so I may not respond as usual until then

Okay, moving on to the other PR