Optimizations on creation of nodes given jobs?

It seems that the creation of the proper nodes are not well optimized, leading to stalling of jobs for various reasons. Below are some example cases. While I mention the use of spot instances below, same was observed if using on-demand instances.

- Creating forever instances that never lead to run. There are examples where a cwl tool had for example ramMin of 3.8 GB (corresponding to requested resource of 3.7GB). The toil got stuck in a loop trying to creating ec2 instances only with t2.medium (on mesos, this describes it as having total available memory of 2.7 GB) - even though from the node type list, there are plenty of other node types the scaler could have picked from. As a result, it keep on creating and terminating t2.medium, and never runs anything. Same observed with other GB requirements. Below is example of it going on forever creating nodes that never run

[2022-07-11T13:49:42+0000] [scaler ] [I] [toil.lib.aws.ami] Selected Flatcar AMI: ami-07fe947197276d27e

[2022-07-11T13:49:43+0000] [scaler ] [I] [toil.lib.ec2] 1 spot requests(s) are open (1 of which are pending evaluation and 0 are pending fulfillment), 0 are active and 0 are in another state.

[2022-07-11T13:49:43+0000] [scaler ] [I] [toil.lib.ec2] Sleeping for 10s

[2022-07-11T13:49:53+0000] [scaler ] [I] [toil.lib.ec2] 0 spot requests(s) are open (0 of which are pending evaluation and 0 are pending fulfillment), 1 are active and 0 are in another state.

[2022-07-11T13:50:41+0000] [scaler ] [I] [toil.provisioners.clusterScaler] Removing 1 preemptable nodes to get to desired cluster size of 0.

[2022-07-11T13:50:41+0000] [scaler ] [I] [toil.provisioners.clusterScaler] Adding 1 preemptable nodes to get to desired cluster size of 1.

[2022-07-11T13:50:41+0000] [scaler ] [I] [toil] Using default user-defined custom docker init command of as TOIL_CUSTOM_DOCKER_INIT_COMMAND is not set.

[2022-07-11T13:50:41+0000] [scaler ] [I] [toil] Using default user-defined custom init command of as TOIL_CUSTOM_INIT_COMMAND is not set.

[2022-07-11T13:50:41+0000] [scaler ] [I] [toil] Using default docker registry of quay.io/ucsc_cgl as TOIL_DOCKER_REGISTRY is not set.

[2022-07-11T13:50:41+0000] [scaler ] [I] [toil] Using default docker name of toil as TOIL_DOCKER_NAME is not set.

[2022-07-11T13:50:41+0000] [scaler ] [I] [toil] Overriding docker appliance of quay.io/ucsc_cgl/toil:5.7.0a1-b7d543ff8ed4e5d32fae85ba11a7df425709d1d6-py3.8 with quay.io/ucsc_cgl/toil:5.7.0a1-b7d543ff8ed4e5d32fae85ba11a7df425709d1d6-py3.8 from TOIL_APPLIANCE_SELF.

[2022-07-11T13:50:43+0000] [scaler ] [I] [toil.lib.ec2] 1 spot requests(s) are open (1 of which are pending evaluation and 0 are pending fulfillment), 0 are active and 0 are in another state.

[2022-07-11T13:50:43+0000] [scaler ] [I] [toil.lib.ec2] Sleeping for 10s

[2022-07-11T13:50:53+0000] [scaler ] [I] [toil.lib.ec2] 0 spot requests(s) are open (0 of which are pending evaluation and 0 are pending fulfillment), 1 are active and 0 are in another state.

[2022-07-11T13:51:41+0000] [scaler ] [I] [toil.provisioners.clusterScaler] Removing 1 preemptable nodes to get to desired cluster size of 0.

[2022-07-11T13:51:42+0000] [scaler ] [I] [toil.provisioners.clusterScaler] Adding 1 preemptable nodes to get to desired cluster size of 1.

[2022-07-11T13:51:42+0000] [scaler ] [I] [toil] Using default user-defined custom docker init command of as TOIL_CUSTOM_DOCKER_INIT_COMMAND is not set.

[2022-07-11T13:51:42+0000] [scaler ] [I] [toil] Using default user-defined custom init command of as TOIL_CUSTOM_INIT_COMMAND is not set.

[2022-07-11T13:51:42+0000] [scaler ] [I] [toil] Using default docker registry of quay.io/ucsc_cgl as TOIL_DOCKER_REGISTRY is not set.

[2022-07-11T13:51:42+0000] [scaler ] [I] [toil] Using default docker name of toil as TOIL_DOCKER_NAME is not set.

[2022-07-11T13:51:42+0000] [scaler ] [I] [toil] Overriding docker appliance of quay.io/ucsc_cgl/toil:5.7.0a1-b7d543ff8ed4e5d32fae85ba11a7df425709d1d6-py3.8 with quay.io/ucsc_cgl/toil:5.7.0a1-b7d543ff8ed4e5d32fae85ba11a7df425709d1d6-py3.8 from TOIL_APPLIANCE_SELF.

[2022-07-11T13:51:43+0000] [scaler ] [I] [toil.lib.ec2] 1 spot requests(s) are open (1 of which are pending evaluation and 0 are pending fulfillment), 0 are active and 0 are in another state.

[2022-07-11T13:51:43+0000] [scaler ] [I] [toil.lib.ec2] Sleeping for 10s

[2022-07-11T13:51:53+0000] [scaler ] [I] [toil.lib.ec2] 0 spot requests(s) are open (0 of which are pending evaluation and 0 are pending fulfillment), 1 are active and 0 are in another state.

[2022-07-11T13:52:41+0000] [scaler ] [I] [toil.provisioners.clusterScaler] Removing 1 preemptable nodes to get to desired cluster size of 0.

[2022-07-11T13:52:42+0000] [scaler ] [I] [toil.provisioners.clusterScaler] Adding 1 preemptable nodes to get to desired cluster size of 1.

[2022-07-11T13:52:42+0000] [scaler ] [I] [toil] Using default user-defined custom docker init command of as TOIL_CUSTOM_DOCKER_INIT_COMMAND is not set.

[2022-07-11T13:52:42+0000] [scaler ] [I] [toil] Using default user-defined custom init command of as TOIL_CUSTOM_INIT_COMMAND is not set.

[2022-07-11T13:52:42+0000] [scaler ] [I] [toil] Using default docker registry of quay.io/ucsc_cgl as TOIL_DOCKER_REGISTRY is not set.

[2022-07-11T13:52:42+0000] [scaler ] [I] [toil] Using default docker name of toil as TOIL_DOCKER_NAME is not set.

[2022-07-11T13:52:42+0000] [scaler ] [I] [toil] Overriding docker appliance of quay.io/ucsc_cgl/toil:5.7.0a1-b7d543ff8ed4e5d32fae85ba11a7df425709d1d6-py3.8 with quay.io/ucsc_cgl/toil:5.7.0a1-b7d543ff8ed4e5d32fae85ba11a7df425709d1d6-py3.8 from TOIL_APPLIANCE_SELF.

....

-

The job stalls where the job is issued but never lead to run when the ramMin and resources available are too closely defined. For example, if cwl has ramMin of 3GB (leading to a requested resource of 2.9GB), and there is a node created with t2.medium (available total memory according to mesos as 2.7GB), the job would get stuck. Toil or auto-scaler or mesos doesn't know this is not going to work, and it should create a new instance with a higher total available memory (even though the node type list allows it). Same is observed is ramMin was defined at 16GB, 32GB, so I needed to purposely defined it as say 15GB or 30GB and it seems to only then accept the offers and run. I also have another example mentioned in #4147 involving 64GB request.

-

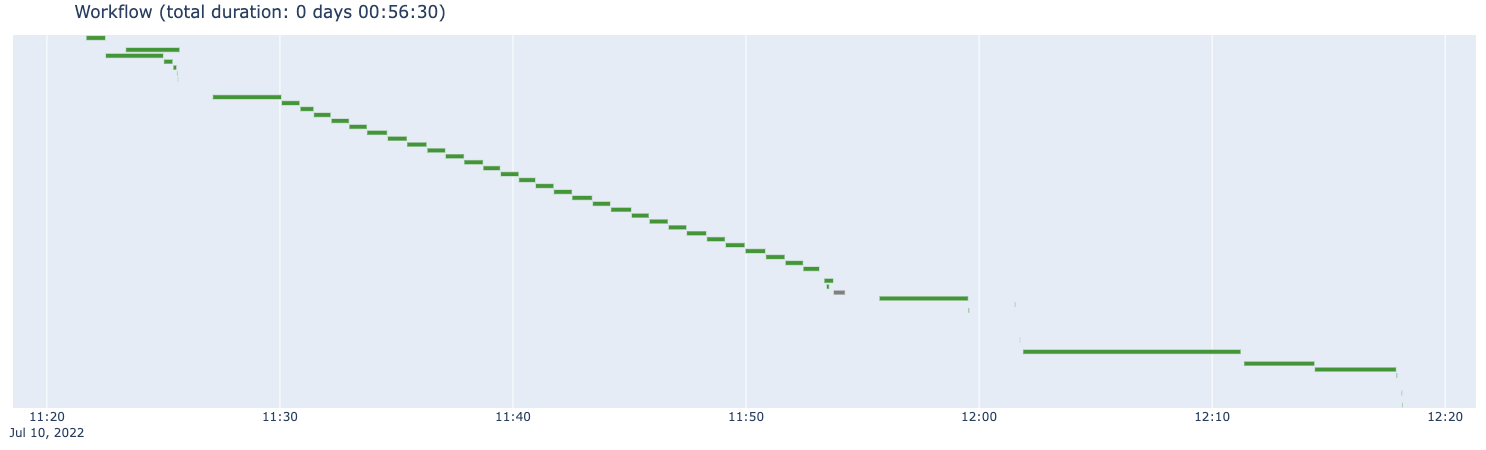

With cwl tasks that uses scatter, it seems toil doesn't scale up to multiple nodes to allow parallelization even though I had for example

--maxNodes 10, only one node was created. Below is an example plot I created detailing the the tasks run (the mini steps in the middle are where a job was supposed to be scattered and can be run in parallel, but instead only one node was created and it decided to run it in sequence instead).

┆Issue is synchronized with this Jira Story ┆friendlyId: TOIL-1196