When to Stop the Training for Unconditional Training on FFHQ Dataset

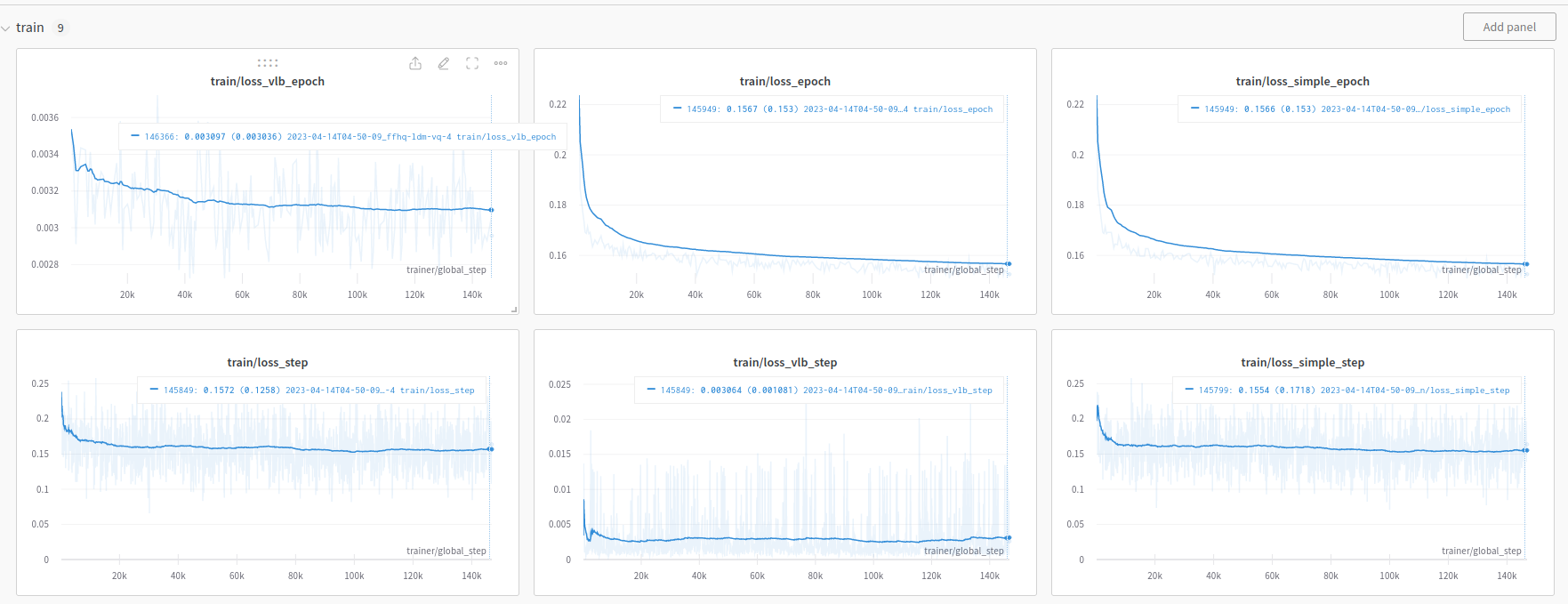

I am training from scratch on all FFHQ dataset (70k), with base learning rate as 1.0e-06, and I use the scale_lr=True parameter.

- But the training process seems very oscillating. With the smoothing as 0.99, I can see the loss descending. Is that correct?

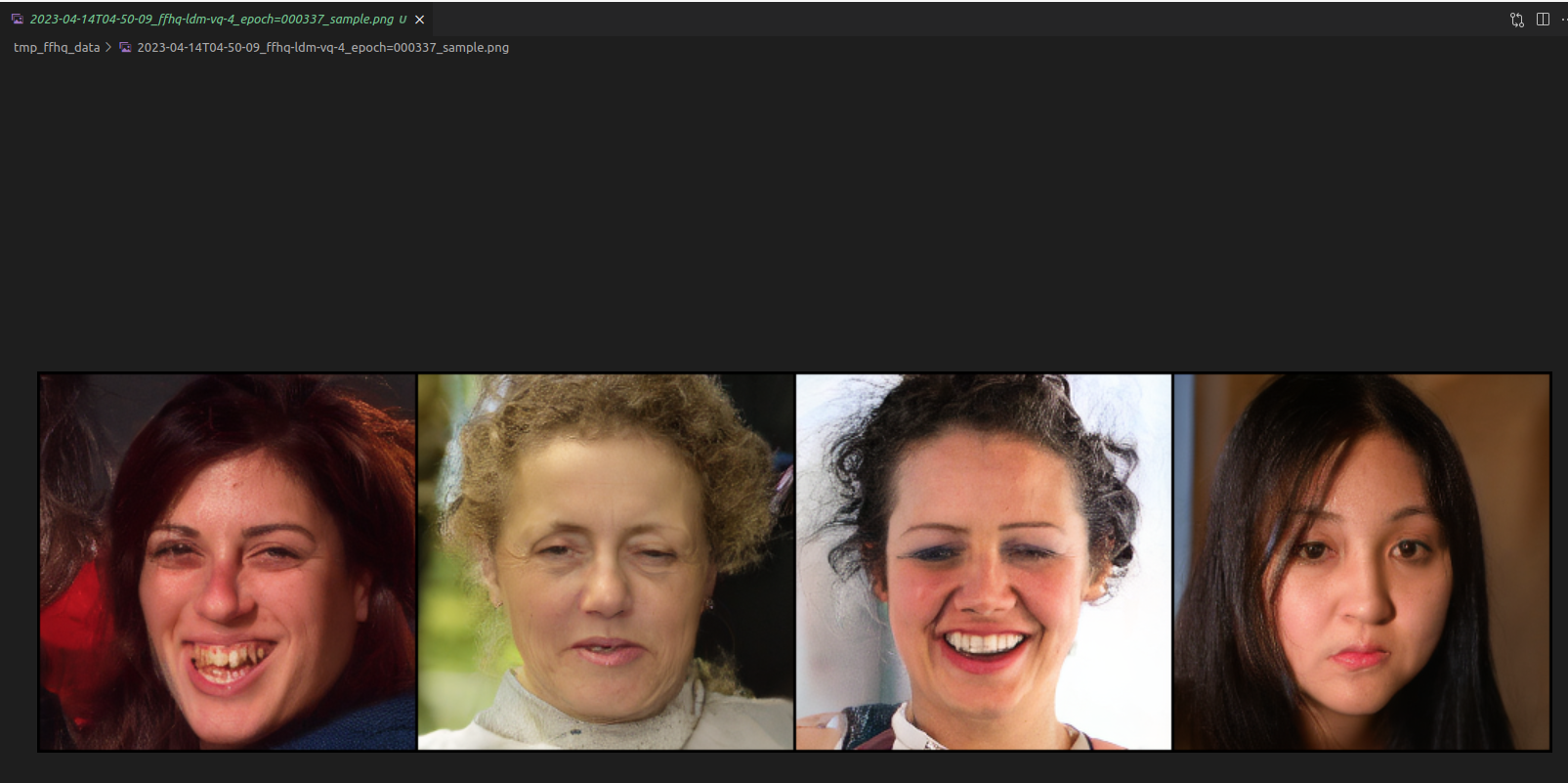

- And when can I stop the training. I found that even in after 300 epochs, there are still some artifacts in sampled data.

Here is the training process in training dataset.

Here is the sampled data. The teeth of the 1st person seems unnormal.

Hi, have you caculated the FID score?

Hi, have you caculated the FID score?

Opps, good catch! I will do that. Thanks.

Hi, have you caculated the FID score?

With base lr=1e-6, batch size=42, scale_lr=True, DDIM step=200, I got 18.36, while the official is 4.98.

It is still a gap here. But I already trained 420 epochs on FFHQ 70k data.

Hi, have you caculated the FID score?

Opps, good catch! I will do that. Thanks.

Hi, have you caculated the FID score?

With

base lr=1e-6, batch size=42, scale_lr=True, DDIM step=200, I got 18.36, while the official is 4.98.It is still a gap here. But I already trained 420 epochs on FFHQ 70k data.

I trained 400 epoch on LSUN_Churches, with provided config file, but the FID was about 14. while the FID reported is 4.02. I can not reproduce the result, too.

I trained 400 epoch on LSUN_Churches, with provided config file, but the FID was about 14. while the FID reported is 4.02. I can not reproduce the result, too.

Thanks for sharing. I am assuming that there might be some other tricks.. May I know how long (time and epochs) did that converge in LSUN?

I trained 400 epoch on LSUN_Churches, with provided config file, but the FID was about 14. while the FID reported is 4.02. I can not reproduce the result, too.

Thanks for sharing. I am assuming that there might be some other tricks.. May I know how long (time and epochs) did that converge in LSUN?

On LSUN_Churches, loss converge only use several epochs (1-3), but the images are bad, I have to train about 140 epoch, the FID can be about 14. and no more decrease. About thirty minutes per epoch on 2 RTX3090.

Thanks. That helps a lot.

Instead of using pretrained vq-4 from latent repo, I used the KL-8 pretrained from stable diffusion and managed to reproduce the result (you could see the DiT repo for it) by training from the scatch.

Instead of using pretrained vq-4 from latent repo, I used the KL-8 pretrained from stable diffusion and managed to reproduce the result (you could see the DiT repo for it) by training from the scatch.

Thank you so much! I‘ll try it!

Instead of using pretrained vq-4 from latent repo, I used the KL-8 pretrained from stable diffusion and managed to reproduce the result (you could see the DiT repo for it) by training from the scatch.

Have you changed the parameters of the Unet or the learning rate?

Yeah, I used fixed learning rate 5e-5 and the Unet architecture is pretty the same, the only difference here is KL-8 downsample from 256 to 32 (instead of vq-4 from 256 to 64), then you could use smaller architecture Unet. Note, I used the pretrained from stable diffusion, not from this repo.

Yeah, I used fixed learning rate 5e-5 and the Unet architecture is pretty the same, the only difference here is KL-8 downsample from 256 to 32 (instead of vq-4 from 256 to 64), then you could use smaller architecture Unet. Note, I used the pretrained from stable diffusion, not from this repo.

Thanks! That helps a lot!! 🥹🥹

Hi,@quandao10 which dataset have you reproduced? I found that the VAE used in stable diffusion is same with the VAE used in the lsun_church dataset.

Hi @ader47 What's your batch size and memory used? I met a out of memory issue which forced me to set a lower batch size.

Hi @ader47 What's your batch size and memory used? I met a out of memory issue which forced me to set a lower batch size.

I used RTX3090 24GB, batch size was about 50 for 4x32x32 latent size, and 24 for 3x64x64. I have to use 2 cards to set the same batch size with the original paper.

2. artifacts

I trained 400 epoch on LSUN_Churches, with provided config file, but the FID was about 14. while the FID reported is 4.02. I can not reproduce the result, too.

Thanks for sharing. I am assuming that there might be some other tricks.. May I know how long (time and epochs) did that converge in LSUN?

On LSUN_Churches, loss converge only use several epochs (1-3), but the images are bad, I have to train about 140 epoch, the FID can be about 14. and no more decrease. About thirty minutes per epoch on 2 RTX3090.

Hi, I am trying to reproduce the result on Lsun Churches. But I faced the problem that calculating the FID . could you please give some advice about the authoritative method of calculation FID? I am not sure whether the FID result right when I use some opensource.

- artifacts

I trained 400 epoch on LSUN_Churches, with provided config file, but the FID was about 14. while the FID reported is 4.02. I can not reproduce the result, too.

Thanks for sharing. I am assuming that there might be some other tricks.. May I know how long (time and epochs) did that converge in LSUN?

On LSUN_Churches, loss converge only use several epochs (1-3), but the images are bad, I have to train about 140 epoch, the FID can be about 14. and no more decrease. About thirty minutes per epoch on 2 RTX3090.

Hi, I am trying to reproduce the result on Lsun Churches. But I faced the problem that calculating the FID . could you please give some advice about the authoritative method of calculation FID? I am not sure whether the FID result right when I use some opensource.

The paper was calculated via the ‘torch-fidelity’

torch-fidelity

Thanks a lot for the amazing quick reply :) . It very helpful to me

- artifacts

I trained 400 epoch on LSUN_Churches, with provided config file, but the FID was about 14. while the FID reported is 4.02. I can not reproduce the result, too.

Thanks for sharing. I am assuming that there might be some other tricks.. May I know how long (time and epochs) did that converge in LSUN?

On LSUN_Churches, loss converge only use several epochs (1-3), but the images are bad, I have to train about 140 epoch, the FID can be about 14. and no more decrease. About thirty minutes per epoch on 2 RTX3090.

Hi, I am trying to reproduce the result on Lsun Churches. But I faced the problem that calculating the FID . could you please give some advice about the authoritative method of calculation FID? I am not sure whether the FID result right when I use some opensource.

The paper was calculated via the ‘torch-fidelity’

大佬顺便问下,Lsun Churches这个最后FID你训练到多少了? 有没有复现paper中的结果啊?

3. artifacts

I trained 400 epoch on LSUN_Churches, with provided config file, but the FID was about 14. while the FID reported is 4.02. I can not reproduce the result, too.

Thanks for sharing. I am assuming that there might be some other tricks.. May I know how long (time and epochs) did that converge in LSUN?

On LSUN_Churches, loss converge only use several epochs (1-3), but the images are bad, I have to train about 140 epoch, the FID can be about 14. and no more decrease. About thirty minutes per epoch on 2 RTX3090.

Hi, I am trying to reproduce the result on Lsun Churches. But I faced the problem that calculating the FID . could you please give some advice about the authoritative method of calculation FID? I am not sure whether the FID result right when I use some opensource.

The paper was calculated via the ‘torch-fidelity’

大佬顺便问下,Lsun Churches这个最后FID你训练到多少了? 有没有复现paper中的结果啊?

没有,我最低FID是11.几😥

- artifacts

I trained 400 epoch on LSUN_Churches, with provided config file, but the FID was about 14. while the FID reported is 4.02. I can not reproduce the result, too.

Thanks for sharing. I am assuming that there might be some other tricks.. May I know how long (time and epochs) did that converge in LSUN?

On LSUN_Churches, loss converge only use several epochs (1-3), but the images are bad, I have to train about 140 epoch, the FID can be about 14. and no more decrease. About thirty minutes per epoch on 2 RTX3090.

Hi, I am trying to reproduce the result on Lsun Churches. But I faced the problem that calculating the FID . could you please give some advice about the authoritative method of calculation FID? I am not sure whether the FID result right when I use some opensource.

The paper was calculated via the ‘torch-fidelity’

大佬顺便问下,Lsun Churches这个最后FID你训练到多少了? 有没有复现paper中的结果啊?

没有,我最低FID是11.几disappointed_relieved

扎心了

- artifacts

I trained 400 epoch on LSUN_Churches, with provided config file, but the FID was about 14. while the FID reported is 4.02. I can not reproduce the result, too.

Thanks for sharing. I am assuming that there might be some other tricks.. May I know how long (time and epochs) did that converge in LSUN?

On LSUN_Churches, loss converge only use several epochs (1-3), but the images are bad, I have to train about 140 epoch, the FID can be about 14. and no more decrease. About thirty minutes per epoch on 2 RTX3090.

Hi, I am trying to reproduce the result on Lsun Churches. But I faced the problem that calculating the FID . could you please give some advice about the authoritative method of calculation FID? I am not sure whether the FID result right when I use some opensource.

The paper was calculated via the ‘torch-fidelity’

大佬顺便问下,Lsun Churches这个最后FID你训练到多少了? 有没有复现paper中的结果啊?

没有,我最低FID是11.几😥

大佬们我能不能咨询一个问题,就是训练好autoencoder以后,如何在latent space 中进行diffusion模型的训练;比如说原图像x经过autoencoder的encoder后,即postrior=autoencoder.encoder(x),z = postrior.sample(),那么这个z是不是就是图像x对应在latent space中的x0?在这个x0上加噪声得到xt,然后预测出噪声后计算损失。训练完成后,采样的时候就是用xT=torch.randn(latent space的形状)一步一步往回走直到x0,最后autoencoder.decoder(x0)获得采样图像。请问这样的流程是对的吗,希望能得到大佬们的回复,感谢!

- artifacts

I trained 400 epoch on LSUN_Churches, with provided config file, but the FID was about 14. while the FID reported is 4.02. I can not reproduce the result, too.

Thanks for sharing. I am assuming that there might be some other tricks.. May I know how long (time and epochs) did that converge in LSUN?

On LSUN_Churches, loss converge only use several epochs (1-3), but the images are bad, I have to train about 140 epoch, the FID can be about 14. and no more decrease. About thirty minutes per epoch on 2 RTX3090.

Hi, I am trying to reproduce the result on Lsun Churches. But I faced the problem that calculating the FID . could you please give some advice about the authoritative method of calculation FID? I am not sure whether the FID result right when I use some opensource.

The paper was calculated via the ‘torch-fidelity’

I have stumbled upon this passage in the paper "We follow common practice and estimate the statistics for calculating the FID-, Precision- and Recall-scores [29,50] shown in Tab. 1 and 10 based on 50k samples from our models and the entire training set of each of the shown datasets."

does this mean they calculated the FID on the training dataset instead of validation set?

Hi @quandao10 Are sure that using Kl-8 instead of VQGAN-4 works for. As I tried it despite it does not make sense and as expected it didn't work and I got only 13 FID score.

Does anyone manage to reproduce the results in the paper?

- artifacts

I trained 400 epoch on LSUN_Churches, with provided config file, but the FID was about 14. while the FID reported is 4.02. I can not reproduce the result, too.

Thanks for sharing. I am assuming that there might be some other tricks.. May I know how long (time and epochs) did that converge in LSUN?

On LSUN_Churches, loss converge only use several epochs (1-3), but the images are bad, I have to train about 140 epoch, the FID can be about 14. and no more decrease. About thirty minutes per epoch on 2 RTX3090.

Hi, I am trying to reproduce the result on Lsun Churches. But I faced the problem that calculating the FID . could you please give some advice about the authoritative method of calculation FID? I am not sure whether the FID result right when I use some opensource.

The paper was calculated via the ‘torch-fidelity’

May I ask how long it takes to calculate a Fid? Why does it take a long time for me to test? It takes about 40 seconds to generate 8 (batch_size=8) images at once. Looking forward to your reply