Codis-HA的使用问题

在使用codis-ha时出现如下问题,不知是否正常。

场景如下: 启用codis-ha后,当一个组内的master节点down掉后,codis-ha会选举group内的一个slave节点作为master节点。但随即会剔除group内除新提升为master的节点以外,其他所有节点都会被强制剔除且服务器上确认codis-server进程退出。 当手动启动被剔除的节点后,再次添加到该group依然无法添加(添加后会被自动剔除且进程自动退出)。

此外,想要了解一些问题:

- 想要了解codis-ha的实现机制,是基于redis-sentinel实现的吗?

- codis-ha在使用时,需要另外以sentinel的模式运行redis吗?

- codis-ha的主从切换是怎样的?

- 后续的数据同步的流程(当原master重启上线再加入到group中时)是怎样的呢?

望解答,谢谢。

相关信息如下

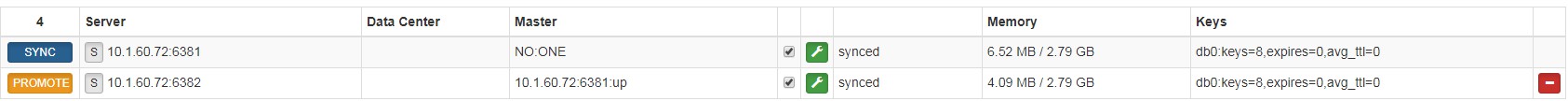

group组实例

6181为master,6282为slave。

Codis-server日志信息 6381

8541:signal-handler (1521448920) Received SIGTERM scheduling shutdown... 8541:M 19 Mar 16:42:00.622 # User requested shutdown... 8541:M 19 Mar 16:42:00.622 * Removing the pid file. 8541:M 19 Mar 16:42:00.623 # Redis is now ready to exit, bye bye...

6282

1002:S 19 Mar 16:42:00.625 # Connection with master lost. 1002:S 19 Mar 16:42:00.626 * Caching the disconnected master state. 1002:S 19 Mar 16:42:00.630 * Connecting to MASTER 10.1.60.72:6381 1002:S 19 Mar 16:42:00.631 * MASTER <-> SLAVE sync started 1002:S 19 Mar 16:42:00.631 # Error condition on socket for SYNC: Connection refused 1002:S 19 Mar 16:42:01.632 * Connecting to MASTER 10.1.60.72:6381 1002:S 19 Mar 16:42:01.632 * MASTER <-> SLAVE sync started 1002:S 19 Mar 16:42:01.632 # Error condition on socket for SYNC: Connection refused 1002:M 19 Mar 16:42:01.729 * Discarding previously cached master state. 1002:M 19 Mar 16:42:01.730 * MASTER MODE enabled (user request from 'id=307 addr=10.1.60.71:49794 fd=5 name= age=0 idle=0 flags=x db=0 sub=0 psub=0 multi=4 qbuf=0 qbuf-free=32768 obl=50 oll=0 omem=0 events=r cmd=exec') 1002:M 19 Mar 16:42:01.730 # CONFIG REWRITE executed with success.

Codis-HA日志如下(日志级别Debug)

2018/03/19 16:42:01 api.go:139: [DEBUG] call rpc [GET] http://10.1.60.71:18080/api/topom/stats/95b62887719520f17e312eaa76d28f2b in 12.590253ms 2018/03/19 16:42:01 main.go:206: [INFO] [ ] proxy-1 [T] 73c66af68de27c782306597171dd8a35 [A] 10.1.60.71:11080 [P] 10.1.60.71:19000 2018/03/19 16:42:01 main.go:206: [INFO] [ ] proxy-2 [T] 0fd8a1488d9b366dac8e09ac5235e4c2 [A] 10.1.60.72:11080 [P] 10.1.60.72:19000 2018/03/19 16:42:01 main.go:206: [INFO] [ ] proxy-3 [T] e3619878e11ed86d6c0d378f9b0a918e [A] 10.1.60.73:11080 [P] 10.1.60.73:19000 2018/03/19 16:42:01 main.go:240: [INFO] [ ] group-1 [0] 10.1.60.71:6379 2018/03/19 16:42:01 main.go:240: [INFO] [ ] group-1 [1] 10.1.60.71:6380 2018/03/19 16:42:01 main.go:240: [INFO] [ ] group-2 [0] 10.1.60.72:6379 2018/03/19 16:42:01 main.go:240: [INFO] [ ] group-2 [1] 10.1.60.72:6380 2018/03/19 16:42:01 main.go:240: [INFO] [ ] group-3 [0] 10.1.60.73:6379 2018/03/19 16:42:01 main.go:240: [INFO] [ ] group-3 [1] 10.1.60.73:6380 2018/03/19 16:42:01 main.go:236: [WARN] [E] group-4 [0] 10.1.60.72:6381 2018/03/19 16:42:01 main.go:242: [WARN] [X] group-4 [1] 10.1.60.72:6382 2018/03/19 16:42:01 main.go:274: [WARN] codis-server (master) 10.1.60.72:6381 state error 2018/03/19 16:42:01 main.go:360: [WARN] try to promote group-[4] with slave 10.1.60.72:6382 2018/03/19 16:42:01 api.go:139: [DEBUG] call rpc [PUT] http://10.1.60.71:18080/api/topom/group/promote/95b62887719520f17e312eaa76d28f2b/4/10.1.60.72:6382 in 31.323133ms 2018/03/19 16:42:01 main.go:364: [WARN] done.

2018/03/19 16:42:04 api.go:139: [DEBUG] call rpc [GET] http://10.1.60.71:18080/api/topom/stats/95b62887719520f17e312eaa76d28f2b in 13.616047ms 2018/03/19 16:42:04 main.go:206: [INFO] [ ] proxy-1 [T] 73c66af68de27c782306597171dd8a35 [A] 10.1.60.71:11080 [P] 10.1.60.71:19000 2018/03/19 16:42:04 main.go:206: [INFO] [ ] proxy-2 [T] 0fd8a1488d9b366dac8e09ac5235e4c2 [A] 10.1.60.72:11080 [P] 10.1.60.72:19000 2018/03/19 16:42:04 main.go:206: [INFO] [ ] proxy-3 [T] e3619878e11ed86d6c0d378f9b0a918e [A] 10.1.60.73:11080 [P] 10.1.60.73:19000 2018/03/19 16:42:04 main.go:240: [INFO] [ ] group-1 [0] 10.1.60.71:6379 2018/03/19 16:42:04 main.go:240: [INFO] [ ] group-1 [1] 10.1.60.71:6380 2018/03/19 16:42:04 main.go:240: [INFO] [ ] group-2 [0] 10.1.60.72:6379 2018/03/19 16:42:04 main.go:240: [INFO] [ ] group-2 [1] 10.1.60.72:6380 2018/03/19 16:42:04 main.go:240: [INFO] [ ] group-3 [0] 10.1.60.73:6379 2018/03/19 16:42:04 main.go:240: [INFO] [ ] group-3 [1] 10.1.60.73:6380 2018/03/19 16:42:04 main.go:240: [INFO] [ ] group-4 [0] 10.1.60.72:6382 2018/03/19 16:42:04 main.go:236: [WARN] [E] group-4 [1] 10.1.60.72:6381 2018/03/19 16:42:04 main.go:286: [WARN] try to group-del-server to dashboard 10.1.60.72:6381 2018/03/19 16:42:04 api.go:139: [DEBUG] call rpc [PUT] http://10.1.60.71:18080/api/topom/group/del/95b62887719520f17e312eaa76d28f2b/4/10.1.60.72:6381 in 2.467563ms 2018/03/19 16:42:04 main.go:291: [DEBUG] call rpc group-del-server OK 2018/03/19 16:42:04 main.go:294: [WARN] try to shutdown codis-server(slave) 10.1.60.72:6381 2018/03/19 16:42:04 main.go:297: [WARN] connect to codis-server(slave) 10.1.60.72:6381 failed [error]: dial tcp 10.1.60.72:6381: getsockopt: connection refused 2 /usr/local/codis/src/github.com/CodisLabs/codis/pkg/utils/redis/client.go:42 github.com/CodisLabs/codis/pkg/utils/redis.NewClient 1 /usr/local/codis/src/github.com/CodisLabs/codis/cmd/ha/main.go:295 main.(*HealthyChecker).Maintains 0 /usr/local/codis/src/github.com/CodisLabs/codis/cmd/ha/main.go:95 main.main ... ...

codis ha 实在没有 sentinel 的时候写的一个单点 ha,建议不要使用。因为他是单点的,而且也依赖单点的dashboard。

我建议使用 sentinel。

YrlixJoe [email protected]于2018年3月19日 周一16:52写道:

在使用codis-ha时出现如下问题,不知是否正常。

场景如下: 启用codis-ha后,当一个组内的master节点down掉后,codis-ha会选举group内的一个slave节点作为master节点。 但随即会剔除group内除新提升为master的节点以外,其他所有节点都会被强制剔除且服务器上确认codis-server进程退出。 当手动启动被剔除的节点后,再次添加到该group依然无法添加(添加后会被自动剔除且进程自动退出)。

此外,想要了解一些问题:

- 想要了解codis-ha的实现机制,是基于redis-sentinel实现的吗?

- codis-ha在使用时,需要另外以sentinel的模式运行redis吗?

- codis-ha的主从切换是怎样的?

- 后续的数据同步的流程(当原master重启上线再加入到group中时)是怎样的呢?

望解答,谢谢。

相关信息如下

group组实例如下 [image: snipaste_20180319_163447.jpg] https://camo.githubusercontent.com/08b01068032a0defb91138880f94d0920744e8aa/68747470733a2f2f692e6c6f6c692e6e65742f323031382f30332f31392f356161663736633038323330332e6a7067

6181为master,6282为slave。

Codis-server日志信息 6381

8541:signal-handler (1521448920) Received SIGTERM scheduling shutdown... 8541:M 19 Mar 16:42:00.622 # User requested shutdown... 8541:M 19 Mar 16:42:00.622 * Removing the pid file. 8541:M 19 Mar 16:42:00.623 # Redis is now ready to exit, bye bye...

6282

1002:S 19 Mar 16:42:00.625 # Connection with master lost. 1002:S 19 Mar 16:42:00.626 * Caching the disconnected master state. 1002:S 19 Mar 16:42:00.630 * Connecting to MASTER 10.1.60.72:6381 1002:S 19 Mar 16:42:00.631 * MASTER <-> SLAVE sync started 1002:S 19 Mar 16:42:00.631 # Error condition on socket for SYNC: Connection refused 1002:S 19 Mar 16:42:01.632 * Connecting to MASTER 10.1.60.72:6381 1002:S 19 Mar 16:42:01.632 * MASTER <-> SLAVE sync started 1002:S 19 Mar 16:42:01.632 # Error condition on socket for SYNC: Connection refused 1002:M 19 Mar 16:42:01.729 * Discarding previously cached master state. 1002:M 19 Mar 16:42:01.730 * MASTER MODE enabled (user request from 'id=307 addr=10.1.60.71:49794 fd=5 name= age=0 idle=0 flags=x db=0 sub=0 psub=0 multi=4 qbuf=0 qbuf-free=32768 obl=50 oll=0 omem=0 events=r cmd=exec') 1002:M 19 Mar 16:42:01.730 # CONFIG REWRITE executed with success.

HA日志如下(日志级别Debug):

2018/03/19 16:42:01 api.go:139: [DEBUG] call rpc [GET] http://10.1.60.71:18080/api/topom/stats/95b62887719520f17e312eaa76d28f2b in 12.590253ms 2018/03/19 16:42:01 main.go:206: [INFO] [ ] proxy-1 [T] 73c66af68de27c782306597171dd8a35 [A] 10.1.60.71:11080 [P] 10.1.60.71:19000 2018/03/19 16:42:01 main.go:206: [INFO] [ ] proxy-2 [T] 0fd8a1488d9b366dac8e09ac5235e4c2 [A] 10.1.60.72:11080 [P] 10.1.60.72:19000 2018/03/19 16:42:01 main.go:206: [INFO] [ ] proxy-3 [T] e3619878e11ed86d6c0d378f9b0a918e [A] 10.1.60.73:11080 [P] 10.1.60.73:19000 2018/03/19 16:42:01 main.go:240: [INFO] [ ] group-1 [0] 10.1.60.71:6379 2018/03/19 16:42:01 main.go:240: [INFO] [ ] group-1 [1] 10.1.60.71:6380 2018/03/19 16:42:01 main.go:240: [INFO] [ ] group-2 [0] 10.1.60.72:6379 2018/03/19 16:42:01 main.go:240: [INFO] [ ] group-2 [1] 10.1.60.72:6380 2018/03/19 16:42:01 main.go:240: [INFO] [ ] group-3 [0] 10.1.60.73:6379 2018/03/19 16:42:01 main.go:240: [INFO] [ ] group-3 [1] 10.1.60.73:6380 2018/03/19 16:42:01 main.go:236: [WARN] [E] group-4 [0] 10.1.60.72:6381 2018/03/19 16:42:01 main.go:242: [WARN] [X] group-4 [1] 10.1.60.72:6382 2018/03/19 16:42:01 main.go:274: [WARN] codis-server (master) 10.1.60.72:6381 state error 2018/03/19 16:42:01 main.go:360: [WARN] try to promote group-[4] with slave 10.1.60.72:6382 2018/03/19 16:42:01 api.go:139: [DEBUG] call rpc [PUT] http://10.1.60.71:18080/api/topom/group/promote/95b62887719520f17e312eaa76d28f2b/4/10.1.60.72:6382 in 31.323133ms 2018/03/19 16:42:01 main.go:364: [WARN] done.

2018/03/19 16:42:04 api.go:139: [DEBUG] call rpc [GET] http://10.1.60.71:18080/api/topom/stats/95b62887719520f17e312eaa76d28f2b in 13.616047ms 2018/03/19 16:42:04 main.go:206: [INFO] [ ] proxy-1 [T] 73c66af68de27c782306597171dd8a35 [A] 10.1.60.71:11080 [P] 10.1.60.71:19000 2018/03/19 16:42:04 main.go:206: [INFO] [ ] proxy-2 [T] 0fd8a1488d9b366dac8e09ac5235e4c2 [A] 10.1.60.72:11080 [P] 10.1.60.72:19000 2018/03/19 16:42:04 main.go:206: [INFO] [ ] proxy-3 [T] e3619878e11ed86d6c0d378f9b0a918e [A] 10.1.60.73:11080 [P] 10.1.60.73:19000 2018/03/19 16:42:04 main.go:240: [INFO] [ ] group-1 [0] 10.1.60.71:6379 2018/03/19 16:42:04 main.go:240: [INFO] [ ] group-1 [1] 10.1.60.71:6380 2018/03/19 16:42:04 main.go:240: [INFO] [ ] group-2 [0] 10.1.60.72:6379 2018/03/19 16:42:04 main.go:240: [INFO] [ ] group-2 [1] 10.1.60.72:6380 2018/03/19 16:42:04 main.go:240: [INFO] [ ] group-3 [0] 10.1.60.73:6379 2018/03/19 16:42:04 main.go:240: [INFO] [ ] group-3 [1] 10.1.60.73:6380 2018/03/19 16:42:04 main.go:240: [INFO] [ ] group-4 [0] 10.1.60.72:6382 2018/03/19 16:42:04 main.go:236: [WARN] [E] group-4 [1] 10.1.60.72:6381 2018/03/19 16:42:04 main.go:286: [WARN] try to group-del-server to dashboard 10.1.60.72:6381 2018/03/19 16:42:04 api.go:139: [DEBUG] call rpc [PUT] http://10.1.60.71:18080/api/topom/group/del/95b62887719520f17e312eaa76d28f2b/4/10.1.60.72:6381 in 2.467563ms 2018/03/19 16:42:04 main.go:291: [DEBUG] call rpc group-del-server OK 2018/03/19 16:42:04 main.go:294: [WARN] try to shutdown codis-server(slave) 10.1.60.72:6381 2018/03/19 16:42:04 main.go:297: [WARN] connect to codis-server(slave) 10.1.60.72:6381 failed [error]: dial tcp 10.1.60.72:6381: getsockopt: connection refused 2 /usr/local/codis/src/ github.com/CodisLabs/codis/pkg/utils/redis/client.go:42 github.com/CodisLabs/codis/pkg/utils/redis.NewClient 1 /usr/local/codis/src/github.com/CodisLabs/codis/cmd/ha/main.go:295 main.(*HealthyChecker).Maintains 0 /usr/local/codis/src/github.com/CodisLabs/codis/cmd/ha/main.go:95 main.main ... ...

— You are receiving this because you are subscribed to this thread. Reply to this email directly, view it on GitHub https://github.com/CodisLabs/codis/issues/1462, or mute the thread https://github.com/notifications/unsubscribe-auth/AAsHpRKb_s2OlGyax5TpyZ_CubfqH3wSks5tf3HigaJpZM4SvyGu .

@spinlock 谢谢,可否帮忙解答提的几个问题。

@YrlixJoe codis 3.2.2 的 codis-ha 根据个人测试的结果来回答你的问题,不一定很准确,做参考。

作者问_1:当一组redis里master失效后,ha 会剔除该失效的master,并提升原来的slave。修复原受损的master后,无法加入codis系统? 答_1:经本人测试,失效后的master,在人为修复可以加入codis系统,不存在无法加入codis系统的可能性。

作者问_2:想要了解codis-ha的实现机制,是基于redis-sentinel实现的吗? 答_2:ha 不是基于sentinel实现监听、管理、故障转移redis的主从关心,但是实现的方式也是通过心跳来判断。3.2版本的ha与3.0和3.1不同之处在于,会剔除失效Master。需要人为启动并且再次加入Codis系统。

作者问_3:codis-ha在使用时,需要另外以sentinel的模式运行redis吗? 答_3:codis-ha 和 sentinel 是不同的组件,是独立运行的。可以选择其中一个来管理redis的主从关系。

作者问_4:codis-ha的主从切换是怎样的? 答_4:其实就是去监听master节点,当master节点异常后,提升slave为新的master。

作者问_5:后续的数据同步的流程(当原master重启上线再加入到group中时)是怎样的呢? 答_5:上线后的master,作者是想作为slave来使用,还是想作为master来使用。如果是作为slave使用,那么会自动向新的master同步数据,这是redis本身的功能,不是codis的功能。如果作者是想把原来的失效的master加入组后,依然保持master的状态,那么还需要作者人为干预一次故障切换,把原来失效的master再次提升为master。

@zhongjimax 感谢解答。 关于问题1,是本人在开启Codis-HA添加重新启动的故障Master节点时出现的剔除后无法添加的问题,通过ha的日志可以看到说是添加codis-server的操作变成了一个shutdown的操作,原因不知。

2018/03/19 16:42:04 main.go:291: [DEBUG] call rpc group-del-server OK 2018/03/19 16:42:04 main.go:294: [WARN] try to shutdown codis-server(slave) 10.1.60.72:6381 2018/03/19 16:42:04 main.go:297: [WARN] connect to codis-server(slave) 10.1.60.72:6381 failed [error]: dial tcp 10.1.60.72:6381: getsockopt: connection refused

关于问题5,人为干预一次故障切换是指在fe界面上手动店家synced按钮发送sync命令吗?

建议还是别用ha这个工具了。其实一直不推荐用

YrlixJoe [email protected]于2018年3月21日 周三16:13写道:

@zhongjimax https://github.com/zhongjimax 感谢解答。

关于问题1,是本人在开启Codis-HA添加重新启动的故障Master节点时出现的剔除后无法添加的问题,通过ha的日志可以看到说是添加codis-server的操作变成了一个shutdown的操作,原因不知。

2018/03/19 16:42:04 main.go:291: [DEBUG] call rpc group-del-server OK 2018/03/19 16:42:04 main.go:294: [WARN] try to shutdown codis-server(slave) 10.1.60.72:6381 http://10.1.60.72:6381 2018/03/19 16:42:04 main.go:297: [WARN] connect to codis-server(slave) 10.1.60.72:6381 failed [error]: dial tcp 10.1.60.72:6381: getsockopt: connection refused

关于问题5,人为干预一次故障切换是指在fe界面上手动店家synced按钮发送sync命令吗?

— You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub https://github.com/CodisLabs/codis/issues/1462#issuecomment-374860478, or mute the thread https://github.com/notifications/unsubscribe-auth/AAsHpa5kqtiD9iKxHx4fxea0x5-8PyKYks5tgguSgaJpZM4SvyGu .

@spinlock 其实并不是一定需要codis-ha,出于调研目的想要了解codis在主从切换以及容灾备份的方面的处理方案及一些流程。

谢谢解答,另外一个问题,本人在使用redis-sentinel的方案时,遇到各redis-sentinel之间无法正常通信的问题,暂时卡壳,不知道能否寻求一些指导信息。

Log

[root@node01 sentinel]# redis-server /usr/local/codis/redis/sentinel/sentinel.conf --sentinel 4414:X 21 Mar 17:11:25.504 * Increased maximum number of open files to 10032 (it was originally set to 1024). 4414:X 21 Mar 17:11:25.507 # WARNING: The TCP backlog setting of 511 cannot be enforced because /proc/sys/net/core/somaxconn is set to the lower value of 128. 4414:X 21 Mar 17:11:25.507 # Sentinel ID is 5246f969339b4e1f2175688a374413bfc12c83f9 4414:X 21 Mar 17:11:25.507 # +monitor master mymaster 10.1.60.72 6380 quorum 2 4414:X 21 Mar 17:12:25.559 # +sdown sentinel 34ff4c4b203a4bab30f32723e77b0fb51c54e00c 10.1.60.73 26379 @ mymaster 10.1.60.72 6380 4414:X 21 Mar 17:12:25.559 # +sdown sentinel 8dac6f5e489f0a04aae20b957c05dcd00de1ff12 10.1.60.71 26379 @ mymaster 10.1.60.72 6380

对 sentinel 也差劲 哈哈

YrlixJoe [email protected]于2018年3月22日 周四10:20写道:

Reopened #1462 https://github.com/CodisLabs/codis/issues/1462.

— You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub https://github.com/CodisLabs/codis/issues/1462#event-1534635664, or mute the thread https://github.com/notifications/unsubscribe-auth/AAsHpePAOd4iRzf4OfZP5qpqEu_7qMevks5tgwqEgaJpZM4SvyGu .

在使用codis-ha时,遇到在group主从切换后,proxy没有同步新master信息,导致后端连接到group报错,问一下codis-ha是怎样确保proxy及时获取新的master信息的?

看了下,codis-ha只是主从切换的动作,那为什么主从切换成功后,proxy没有同步到最新的maser信息呢?