Arkouda does not support overwriting datasets in hdf5 -> should it?

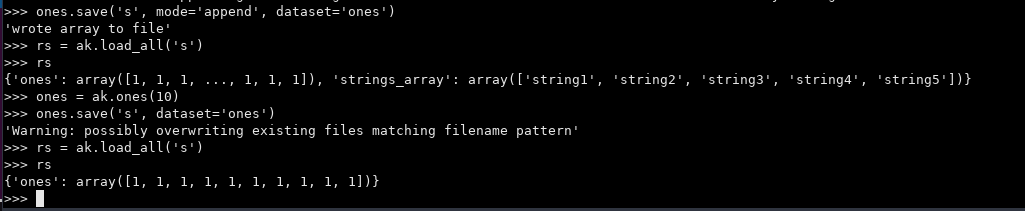

I noticed that, when I attempt to overwrite a dataset the entire hdf5 file is overwritten:

Question -> should we support overwriting individual datasets? Just wanted to open it up for discussion.

--John

@mhmerrill @reuster986 Thoughts on this one?

@hokiegeek2 I think ideally we should support overwriting an individual dataset, but if it is too hard to implement, we don't need to make it a high priority.

Assigning this to myself so that I remember to keep this in mind with other HDF5 updates/research.

@pierce314159, @joshmarshall1, @jaketrookman, @hokiegeek2 - Want to get your input here. I believe this would be extremely useful, though there are a few potential problems. If we add this to the current write funcitonality, Truncate mode would now need to only overwrite the columns with names matching the data being written, but not the entire file.

I am thinking the best way to handle this is to add update functions that would be linked to a new message and processing workflow in chapel that would update the columns if they exist or add them if they do not. Additionally, it would fail if the provided file name did not match. Does that make sense?

@Ethan-DeBandi99 I agree this would be a good feature and your plan sounds good to me 💯