AksEndpoint Versioning error (syncing with inference cluster)

- Package Name: azureml

- Package Version: 1.39.0

- Operating System: Linux

- Python Version: 3.9.10

Describe the bug

While deploying two endpoints through AksEndpoint with the same version name, for example blue, one endpoint would override the other endpoint in k8s cluster.

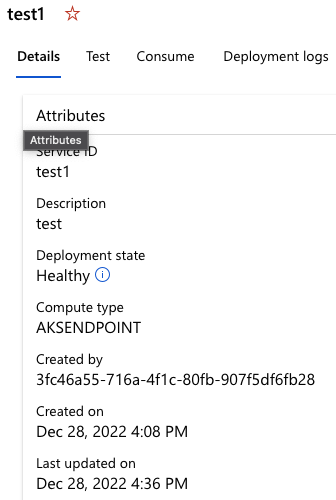

- The state of the endpoints are shown healthy after fully deployment in ML studio.

- The deployment logs would be gone in ML studio.

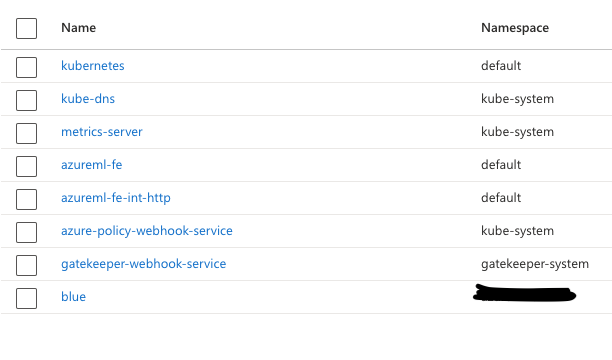

- In kubernetes service Azure Portal, there would be only one

blueinServices and Ingresses. - The url link for the blue version is different from expected.

To Reproduce Steps to reproduce the behavior:

- Create one endpoint naming

test1with versionblue, attaching to the inference clustertest-cluster. - Create another endpoint naming

test2with versionblue, attaching to the inference clustertest-cluster. - Check status of the endpoints in ML studio.

- Go to Azure Portal, find the inference cluster

test-cluster, and go toServices and Ingresses. - Check the url with specific version

blue

- Deployment script:

import os

from azureml.core import Workspace

from azureml.core.authentication import ServicePrincipalAuthentication

from azureml.core.compute import ComputeTarget

from azureml.core.environment import Environment

from azureml.core.model import InferenceConfig, Model

from azureml.core.webservice import AksEndpoint, LocalWebservice, Webservice

from azureml.exceptions import ComputeTargetException

from dotenv import dotenv_values, load_dotenv

load_dotenv(override=True)

subscription_id = os.getenv("subscriptionId")

tenant_id = os.getenv("tenantId")

client_id = os.getenv("clientId")

client_secret = os.getenv("clientSecret")

workspace = os.getenv("workspace")

resource_group = os.getenv("resource_group")

authentication = ServicePrincipalAuthentication(

tenant_id=tenant_id,

service_principal_id=client_id,

service_principal_password=client_secret,

)

workspace = Workspace.get(

name=workspace,

subscription_id=subscription_id,

resource_group=resource_group,

auth=authentication,

)

deployment_config = AksEndpoint.deploy_configuration(

cpu_cores=2,

memory_gb=2,

description="test",

traffic_percentile=100,

version_name="blue",

)

myenv = Environment.from_conda_specification(

name="myenv",

file_path="./env.yaml",

)

inference_config = InferenceConfig(

entry_script="test_inference.py",

source_directory="./deploy",

environment=myenv,

)

deployment_target = ComputeTarget(workspace=workspace, name="test")

webservice = Model.deploy(

workspace=workspace,

name="test1",

models=[],

inference_config=inference_config,

deployment_config=deployment_config,

deployment_target=deployment_target,

overwrite=True,

)

webservice.wait_for_deployment(show_output=True)

webservice = Model.deploy(

workspace=workspace,

name="test2",

models=[],

inference_config=inference_config,

deployment_config=deployment_config,

deployment_target=deployment_target,

overwrite=True,

)

webservice.wait_for_deployment(show_output=True)

- test_inference.py:

import os

import logging

import pickle

import json

import numpy

def init():

global model

logging.info("Init Complete")

def run(raw_data):

try:

logging.info("Request received")

data = json.loads(raw_data)["data"]

return data

except Exception as e:

error = str(e)

return error

Expected behavior

- Different endpoints should be able to have the same version name attaching to the same inference cluster.

- Scoring uri should be

/api/v1/service/test1/blue/scoreand/api/v1/service/test2/blue/scorewhile current setting is/api/v1/service/blue/scorefortest1andtest2.

Screenshots

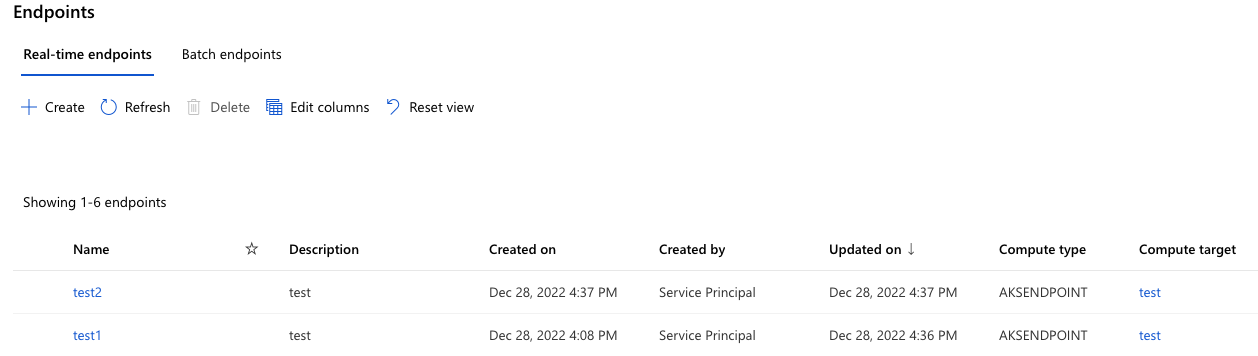

- View from ML studio / Endpoints

- View from Azure Portal / Services and Ingresses

- View from ML studio / Endpoints / test1

- View from ML studio / Endpoints / test2

Hi @hao-happify, thank you for opening an issue! I'll tag some folks who should be able to help, and we'll get back to you as soon as possible. @luigiw @azureml-github

Hello, @mccoyp any update on this? I also found while using Python SDK v1, the service is down when I add another version, causing the safe rollout failure. Is it because the versioning and safe-rollout are no longer supported by V1? I couldn't find the safe rollout document in v1 since it's all upgraded to v2.

@hao-happify thank you for the ping on this; I'll alert the ML team again and get in contact directly. @luigiw @azureml-github

@hao-happify For product improvement, we has released the new version of azureml-fe, and we are now transparently upgrading the azureml-fe from v1 to v2 in our customers' AKS clusters. However, the AKSEndpoint in v1 which is only previewed but not GAed before, had deprecated and will not be support with the new fetaures/capabilitlies. So the azureml-fe v2 doesn't support routing traffic on AKSEnpoint in v1.

We recommend you to stop using the v1 AKSEndpoint (which is not GAed), and do not build production on a previewed feature to prevent incompatibilities.

Notice that there are three options to mitigate this issue:

- If you'd like to use endpoint, you can directly migrate to our v2 stack to use SDK/CLI V2 to creat the online-endpoint in v2.

- If you'd like to stay on v1 while using the new azuremlfe-f2 v2, you have to use v1 webservice instead of the deprecated v1 endpoint, since scoring f2 v2 doesn't support v1 endpoint traffic routing.

- If you'd like to continue using the v1 AKSEndpoint, we can roll back the azureml-fe to be v1 in your clusters. In this case, the version of azureml-fe will be pinned at v1, means you can not gain the performance improvement and new feature support in the future. To roll back the azureml-fe, please send an email to [email protected], indicating that you want to pin your fe version to be v1, while providing the AKS cluster resource ID to us.

Hi @hao-happify. Thank you for opening this issue and giving us the opportunity to assist. We believe that this has been addressed. If you feel that further discussion is needed, please add a comment with the text “/unresolve” to remove the “issue-addressed” label and continue the conversation.

@jiaochenlu

Thank you for the explanation and the recommendation. I will try to upgrade to v2 instead.

Hi @hao-happify, since you haven’t asked that we “/unresolve” the issue, we’ll close this out. If you believe further discussion is needed, please add a comment “/unresolve” to reopen the issue.