FAIL get pod for host clickhouse/0-0 err:pods "chi-clickhouse-cluster-replicated-0-0-0" not found

version: 0.18.3 At the time of creation, after investigation found that service was not created resulting in dns resolution

**

**

**

could you share

kubectl get sts -l chi=clickhouse-cluster-replicated --all-namespaces

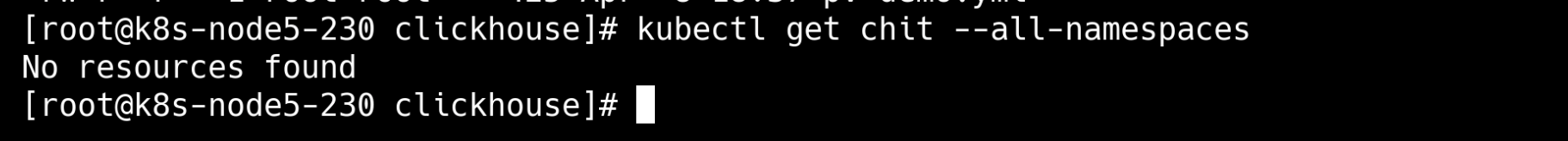

kubectl get chi --all-namespaces

@Slach

@yuweni99 please stop share images could you share as text following command results?

kubectl get chi -n clickhouse clickhouse-cluster -o yaml

@Slach

apiVersion: clickhouse.altinity.com/v1

kind: ClickHouseInstallation

metadata:

creationTimestamp: "2022-04-08T12:32:21Z"

finalizers:

- finalizer.clickhouseinstallation.altinity.com

generation: 1

managedFields:

- apiVersion: clickhouse.altinity.com/v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.: {}

f:kubectl.kubernetes.io/last-applied-configuration: {}

f:spec:

.: {}

f:configuration:

.: {}

f:clusters: {}

f:profiles: {}

f:quotas: {}

f:users:

.: {}

f:admin/networks/ip: {}

f:admin/password_sha256_hex: {}

f:admin/profile: {}

f:admin/quota: {}

f:zookeeper:

.: {}

f:nodes: {}

f:defaults:

.: {}

f:templates:

.: {}

f:dataVolumeClaimTemplate: {}

f:logVolumeClaimTemplate: {}

f:podTemplate: {}

f:templates: {}

manager: kubectl-client-side-apply

operation: Update

time: "2022-04-08T12:32:21Z"

- apiVersion: clickhouse.altinity.com/v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:finalizers:

.: {}

v:"finalizer.clickhouseinstallation.altinity.com": {}

f:spec:

f:configuration:

f:profiles:

f:default/max_memory_usage: {}

f:default/max_threads: {}

f:quotas:

f:default/interval/duration: {}

f:templates:

f:podTemplates: {}

f:volumeClaimTemplates: {}

f:status:

.: {}

f:action: {}

f:actions: {}

f:added: {}

f:chop-commit: {}

f:chop-date: {}

f:chop-version: {}

f:clusters: {}

f:endpoint: {}

f:error: {}

f:errors: {}

f:fqdns: {}

f:hosts: {}

f:normalized:

.: {}

f:apiVersion: {}

f:kind: {}

f:metadata: {}

f:spec: {}

f:status: {}

f:pods: {}

f:replicas: {}

f:shards: {}

f:status: {}

f:taskID: {}

f:taskIDsStarted: {}

manager: clickhouse-operator

operation: Update

time: "2022-04-08T12:37:28Z"

name: clickhouse-cluster

namespace: clickhouse

resourceVersion: "56737500"

selfLink: /apis/clickhouse.altinity.com/v1/namespaces/clickhouse/clickhouseinstallations/clickhouse-cluster

uid: eff8e107-da49-48bc-a7b9-71a46649fd34

spec:

configuration:

clusters:

- layout:

replicasCount: 2

shardsCount: 3

name: replicated

profiles:

default/max_memory_usage: 1000000000

default/max_threads: 8

quotas:

default/interval/duration: 3600

users:

admin/networks/ip: ::/0

admin/password_sha256_hex: c978e843bf46455e59c484ace79e813e8099103a3d42a92f9dc2fcfcce452643

admin/profile: default

admin/quota: default

zookeeper:

nodes:

- host: zookeeper.place-code

defaults:

templates:

dataVolumeClaimTemplate: data-volume-template

logVolumeClaimTemplate: log-volume-template

podTemplate: clickhouse:21.3

templates:

podTemplates:

- name: clickhouse:21.3

spec:

containers:

- image: 172.26.8.230/yandex/clickhouse-server:21.3.20.1

name: clickhouse-pod

volumeClaimTemplates:

- name: data-volume-template

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 240Gi

storageClassName: nfs-216-wrok2-client

- name: log-volume-template

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

storageClassName: nfs-216-wrok2-client

status:

action: 'FAILED to reconcile StatefulSet: chi-clickhouse-cluster-replicated-0-0

CHI: clickhouse-cluster '

actions:

- 'FAILED to reconcile StatefulSet: chi-clickhouse-cluster-replicated-0-0 CHI: clickhouse-cluster '

- Create StatefulSet clickhouse/chi-clickhouse-cluster-replicated-0-0 - error ignored

- Create StatefulSet clickhouse/chi-clickhouse-cluster-replicated-0-0 - started

- Update ConfigMap clickhouse/chi-clickhouse-cluster-deploy-confd-replicated-0-0

- Reconcile Host 0-0 started

- Update ConfigMap clickhouse/chi-clickhouse-cluster-common-usersd

- Update ConfigMap clickhouse/chi-clickhouse-cluster-common-configd

- Update Service clickhouse/clickhouse-clickhouse-cluster

- reconcile started

added: 1

chop-commit: 76f6a6a1

chop-date: 2022-04-08T10:10:40

chop-version: 0.18.3

clusters: 1

endpoint: clickhouse-clickhouse-cluster.clickhouse.svc.cluster.local

error: 'FAILED update: onStatefulSetCreateFailed - ignore'

errors:

- 'FAILED update: onStatefulSetCreateFailed - ignore'

- 'FAILED to reconcile StatefulSet: chi-clickhouse-cluster-replicated-0-0 CHI: clickhouse-cluster '

fqdns:

- chi-clickhouse-cluster-replicated-0-0.clickhouse.svc.cluster.local

- chi-clickhouse-cluster-replicated-0-1.clickhouse.svc.cluster.local

- chi-clickhouse-cluster-replicated-1-0.clickhouse.svc.cluster.local

- chi-clickhouse-cluster-replicated-1-1.clickhouse.svc.cluster.local

- chi-clickhouse-cluster-replicated-2-0.clickhouse.svc.cluster.local

- chi-clickhouse-cluster-replicated-2-1.clickhouse.svc.cluster.local

hosts: 6

normalized:

apiVersion: clickhouse.altinity.com/v1

kind: ClickHouseInstallation

metadata:

creationTimestamp: "2022-04-08T12:32:21Z"

finalizers:

- finalizer.clickhouseinstallation.altinity.com

generation: 1

managedFields:

- apiVersion: clickhouse.altinity.com/v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:finalizers:

.: {}

v:"finalizer.clickhouseinstallation.altinity.com": {}

manager: clickhouse-operator

operation: Update

time: "2022-04-08T12:32:21Z"

- apiVersion: clickhouse.altinity.com/v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.: {}

f:kubectl.kubernetes.io/last-applied-configuration: {}

f:spec:

.: {}

f:configuration:

.: {}

f:clusters: {}

f:profiles:

.: {}

f:default/max_memory_usage: {}

f:default/max_threads: {}

f:quotas:

.: {}

f:default/interval/duration: {}

f:users:

.: {}

f:admin/networks/ip: {}

f:admin/password_sha256_hex: {}

f:admin/profile: {}

f:admin/quota: {}

f:zookeeper:

.: {}

f:nodes: {}

f:defaults:

.: {}

f:templates:

.: {}

f:dataVolumeClaimTemplate: {}

f:logVolumeClaimTemplate: {}

f:podTemplate: {}

f:templates:

.: {}

f:podTemplates: {}

f:volumeClaimTemplates: {}

manager: kubectl-client-side-apply

operation: Update

time: "2022-04-08T12:32:21Z"

name: clickhouse-cluster

namespace: clickhouse

resourceVersion: "56734481"

selfLink: /apis/clickhouse.altinity.com/v1/namespaces/clickhouse/clickhouseinstallations/clickhouse-cluster

uid: eff8e107-da49-48bc-a7b9-71a46649fd34

spec:

configuration:

clusters:

- layout:

replicas:

- name: "0"

shards:

- httpPort: 8123

interserverHTTPPort: 9009

name: 0-0

tcpPort: 9000

templates:

dataVolumeClaimTemplate: data-volume-template

logVolumeClaimTemplate: log-volume-template

podTemplate: clickhouse:21.3

- httpPort: 8123

interserverHTTPPort: 9009

name: 1-0

tcpPort: 9000

templates:

dataVolumeClaimTemplate: data-volume-template

logVolumeClaimTemplate: log-volume-template

podTemplate: clickhouse:21.3

- httpPort: 8123

interserverHTTPPort: 9009

name: 2-0

tcpPort: 9000

templates:

dataVolumeClaimTemplate: data-volume-template

logVolumeClaimTemplate: log-volume-template

podTemplate: clickhouse:21.3

shardsCount: 3

templates:

dataVolumeClaimTemplate: data-volume-template

logVolumeClaimTemplate: log-volume-template

podTemplate: clickhouse:21.3

- name: "1"

shards:

- httpPort: 8123

interserverHTTPPort: 9009

name: 0-1

tcpPort: 9000

templates:

dataVolumeClaimTemplate: data-volume-template

logVolumeClaimTemplate: log-volume-template

podTemplate: clickhouse:21.3

- httpPort: 8123

interserverHTTPPort: 9009

name: 1-1

tcpPort: 9000

templates:

dataVolumeClaimTemplate: data-volume-template

logVolumeClaimTemplate: log-volume-template

podTemplate: clickhouse:21.3

- httpPort: 8123

interserverHTTPPort: 9009

name: 2-1

tcpPort: 9000

templates:

dataVolumeClaimTemplate: data-volume-template

logVolumeClaimTemplate: log-volume-template

podTemplate: clickhouse:21.3

shardsCount: 3

templates:

dataVolumeClaimTemplate: data-volume-template

logVolumeClaimTemplate: log-volume-template

podTemplate: clickhouse:21.3

replicasCount: 2

shards:

- internalReplication: "true"

name: "0"

replicas:

- httpPort: 8123

interserverHTTPPort: 9009

name: 0-0

tcpPort: 9000

templates:

dataVolumeClaimTemplate: data-volume-template

logVolumeClaimTemplate: log-volume-template

podTemplate: clickhouse:21.3

- httpPort: 8123

interserverHTTPPort: 9009

name: 0-1

tcpPort: 9000

templates:

dataVolumeClaimTemplate: data-volume-template

logVolumeClaimTemplate: log-volume-template

podTemplate: clickhouse:21.3

replicasCount: 2

templates:

dataVolumeClaimTemplate: data-volume-template

logVolumeClaimTemplate: log-volume-template

podTemplate: clickhouse:21.3

- internalReplication: "true"

name: "1"

replicas:

- httpPort: 8123

interserverHTTPPort: 9009

name: 1-0

tcpPort: 9000

templates:

dataVolumeClaimTemplate: data-volume-template

logVolumeClaimTemplate: log-volume-template

podTemplate: clickhouse:21.3

- httpPort: 8123

interserverHTTPPort: 9009

name: 1-1

tcpPort: 9000

templates:

dataVolumeClaimTemplate: data-volume-template

logVolumeClaimTemplate: log-volume-template

podTemplate: clickhouse:21.3

replicasCount: 2

templates:

dataVolumeClaimTemplate: data-volume-template

logVolumeClaimTemplate: log-volume-template

podTemplate: clickhouse:21.3

- internalReplication: "true"

name: "2"

replicas:

- httpPort: 8123

interserverHTTPPort: 9009

name: 2-0

tcpPort: 9000

templates:

dataVolumeClaimTemplate: data-volume-template

logVolumeClaimTemplate: log-volume-template

podTemplate: clickhouse:21.3

- httpPort: 8123

interserverHTTPPort: 9009

name: 2-1

tcpPort: 9000

templates:

dataVolumeClaimTemplate: data-volume-template

logVolumeClaimTemplate: log-volume-template

podTemplate: clickhouse:21.3

replicasCount: 2

templates:

dataVolumeClaimTemplate: data-volume-template

logVolumeClaimTemplate: log-volume-template

podTemplate: clickhouse:21.3

shardsCount: 3

name: replicated

templates:

dataVolumeClaimTemplate: data-volume-template

logVolumeClaimTemplate: log-volume-template

podTemplate: clickhouse:21.3

zookeeper:

nodes:

- host: zookeeper.place-code

port: 2181

profiles:

default/max_memory_usage: "1000000000"

default/max_threads: "8"

quotas:

default/interval/duration: "3600"

users:

admin/networks/host_regexp: (chi-clickhouse-cluster-[^.]+\d+-\d+|clickhouse\-clickhouse-cluster)\.clickhouse\.svc\.cluster\.local$

admin/networks/ip: ::/0

admin/password_sha256_hex: c978e843bf46455e59c484ace79e813e8099103a3d42a92f9dc2fcfcce452643

admin/profile: default

admin/quota: default

default/networks/host_regexp: (chi-clickhouse-cluster-[^.]+\d+-\d+|clickhouse\-clickhouse-cluster)\.clickhouse\.svc\.cluster\.local$

default/networks/ip:

- ::1

- 127.0.0.1

default/profile: default

default/quota: default

zookeeper:

nodes:

- host: zookeeper.place-code

port: 2181

defaults:

replicasUseFQDN: "false"

templates:

dataVolumeClaimTemplate: data-volume-template

logVolumeClaimTemplate: log-volume-template

podTemplate: clickhouse:21.3

reconciling:

cleanup:

reconcileFailedObjects:

configMap: Retain

pvc: Retain

service: Retain

statefulSet: Retain

unknownObjects:

configMap: Delete

pvc: Delete

service: Delete

statefulSet: Delete

configMapPropagationTimeout: 60

policy: unspecified

stop: "false"

taskID: 124b74c3-b148-415a-970e-d3d9672bc8b7

templates:

PodTemplatesIndex: {}

VolumeClaimTemplatesIndex: {}

podTemplates:

- metadata:

creationTimestamp: null

name: clickhouse:21.3

spec:

containers:

- image: 172.26.8.230/yandex/clickhouse-server:21.3.20.1

name: clickhouse-pod

resources: {}

zone: {}

volumeClaimTemplates:

- metadata:

creationTimestamp: null

name: data-volume-template

reclaimPolicy: Delete

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 240Gi

storageClassName: nfs-216-wrok2-client

- metadata:

creationTimestamp: null

name: log-volume-template

reclaimPolicy: Delete

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

storageClassName: nfs-216-wrok2-client

templating:

policy: manual

troubleshoot: "false"

status:

clusters: 0

hosts: 0

replicas: 0

shards: 0

status: ""

pods:

- chi-clickhouse-cluster-replicated-0-0-0

- chi-clickhouse-cluster-replicated-0-1-0

- chi-clickhouse-cluster-replicated-1-0-0

- chi-clickhouse-cluster-replicated-1-1-0

- chi-clickhouse-cluster-replicated-2-0-0

- chi-clickhouse-cluster-replicated-2-1-0

replicas: 0

shards: 3

status: InProgress

taskID: 124b74c3-b148-415a-970e-d3d9672bc8b7

taskIDsStarted:

- 124b74c3-b148-415a-970e-d3d9672bc8b7

@Slach zhe is my deploy yam file content

apiVersion: "clickhouse.altinity.com/v1"

kind: "ClickHouseInstallation"

metadata:

name: "clickhouse-cluster"

namespace: "place-code"

spec:

defaults:

templates:

dataVolumeClaimTemplate: data-volume-template

logVolumeClaimTemplate: log-volume-template

podTemplate: clickhouse:21.3

configuration:

zookeeper:

nodes:

- host: zookeeper.place-code

clusters:

- name: replicated

layout:

shardsCount: 3

replicasCount: 2

users:

admin/password_sha256_hex: c978e843bf46455e59c484ace79e813e8099103a3d42a92f9dc2fcfcce452643

admin/networks/ip: "::/0"

admin/profile: default

admin/quota: default

profiles:

default/max_memory_usage: 1000000000

default/max_threads: 8

quotas:

default/interval/duration: 3600

templates:

volumeClaimTemplates:

- name: data-volume-template

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 240Gi

storageClassName: "nfs-216-wrok2-client"

- name: log-volume-template

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

storageClassName: "nfs-216-wrok2-client"

podTemplates:

- name: clickhouse:21.3

spec:

containers:

- name: clickhouse-pod

image: 172.26.8.230/yandex/clickhouse-server:21.3.20.1

@Slach My server cannot access the Internet

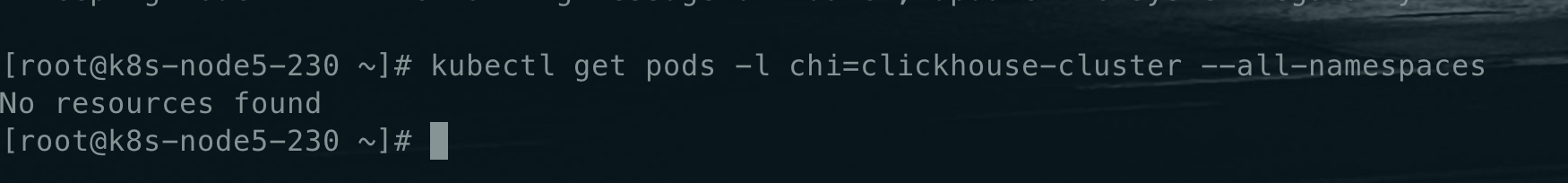

kubectl get pods -l chi=clickhouse-cluster --all-namespaces

@Slach

kubectl get sts -l chi=clickhouse-cluster --all-namespaces

@Slach

@Slach Is there any way to solve my problem?

kubectl get sts --all-namespaces

currently, i don't see root cause for operator behavior

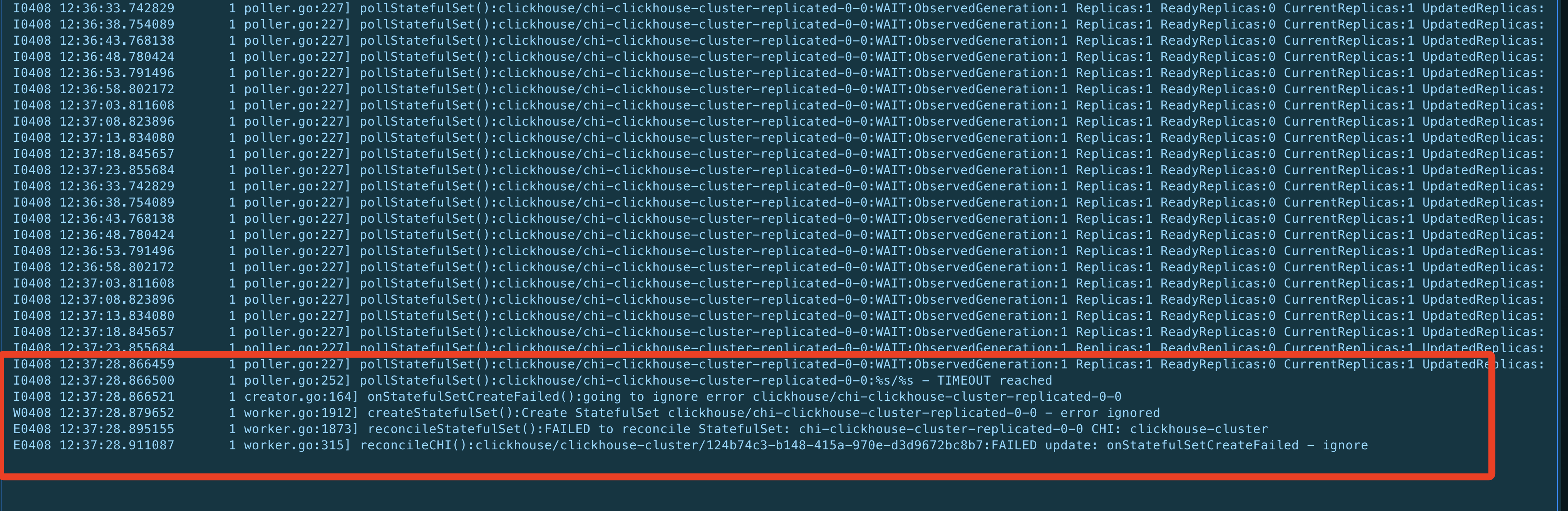

TIMEOUT mean clickhouse-operator reach 600s timeout during try to apply manifest for statefulset managed by operator

shared kind: ClickhouseInstallation manifest looks ok

could you share

kubectl get pvc -l chi=clickhouse-cluster --all-namespaces

and please stop share images, it is unusable and gets in the way of solving your problem

@Slach

[root@k8s-node5-230 ~]# kubectl get sts --all-namespaces NAMESPACE NAME READY AGE clickhouse chi-clickhouse-cluster-replicated-0-0 0/1 2d17h kube-system snapshot-controller 1/1 96d kubesphere-logging-system elasticsearch-logging-data 3/3 96d kubesphere-logging-system elasticsearch-logging-discovery 3/3 96d kubesphere-monitoring-system alertmanager-main 3/3 96d kubesphere-monitoring-system prometheus-k8s 2/2 96d kubesphere-monitoring-system thanos-ruler-kubesphere 2/2 96d kubesphere-system openldap 2/2 96d kubesphere-system redis-ha-server 3/3 96d place-code-ops es-master-cluster-v1 3/3 81d place-code-ops es-slave-cluster-v1 3/3 16d place-code minio-new-v1 1/1 16d place-code mysql-data-cloud-v1 1/1 18d place-code mysql-health-record-v1 1/1 85d place-code mysql-nacos-v1 1/1 14d place-code mysql-v1 1/1 89d place-code nacos-cluster-v1 3/3 95d place-code neo4j-cluster-neo4j-core 3/3 15d place-code neo4j-cluster-neo4j-replica 3/3 15d place-code redis-cluster 10/10 18d place-code redis-v1 1/1 95d place-code zookeeper 3/3 4d23h

@Slach [root@k8s-node5-230 ~]# kubectl get pvc -l chi=clickhouse-cluster --all-namespaces No resources found

@Slach [root@k8s-node5-230 ~]# kubectl get pvc -l chi=clickhouse-cluster --all-namespaces No resources found [root@k8s-node5-230 ~]# kubectl get pvc -n clickhouse-cluster No resources found in clickhouse-cluster namespace. [root@k8s-node5-230 ~]# kubectl get pvc -n clickhouse NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE data-volume-template-chi-clickhouse-cluster-replicated-0-0-0 Bound pvc-2a539ff7-1464-4c73-b0eb-85328edb40d2 10Gi RWO nfs-storage 93s log-volume-template-chi-clickhouse-cluster-replicated-0-0-0 Bound pvc-8f1f8565-49d6-498c-9b5e-8ad0df71fa79 10Gi RWO nfs-storage 93s [root@k8s-node5-230 ~]# kubectl get pvc -l chi=clickhouse-cluster --all-namespaces No resources found [root@k8s-node5-230 ~]# kubectl get pvc -n clickhouse-cluster No resources found in clickhouse-cluster namespace. [root@k8s-node5-230 ~]# kubectl get pvc -l clickhouse.altinity.com/chi=clickhouse-cluster --all-namespaces NAMESPACE NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE clickhouse data-volume-template-chi-clickhouse-cluster-replicated-0-0-0 Bound pvc-2a539ff7-1464-4c73-b0eb-85328edb40d2 10Gi RWO nfs-storage 3m10s clickhouse log-volume-template-chi-clickhouse-cluster-replicated-0-0-0 Bound pvc-8f1f8565-49d6-498c-9b5e-8ad0df71fa79 10Gi RWO nfs-storage 3m10s [root@k8s-node5-230 ~]#

@Slach I see it has created "pvc" but the label is wrong

I compiled your code myself with branch v0.18.3 because my machine cannot have access to the internet so I need to change your default busybox image

kubectl describe sts -n clickhouse chi-clickhouse-cluster-replicated-0-0

kubectl describe pod -n clickhouse chi-clickhouse-cluster-replicated-0-0-0

kubectl log -n clickhouse chi-clickhouse-cluster-replicated-0-0-0 --since=24h

@Slach kubectl describe sts -n clickhouse chi-clickhouse-cluster-replicated-0-0

Name: chi-clickhouse-cluster-replicated-0-0

Namespace: clickhouse

CreationTimestamp: Mon, 11 Apr 2022 13:41:01 +0800

Selector: clickhouse.altinity.com/app=chop,clickhouse.altinity.com/chi=clickhouse-cluster,clickhouse.altinity.com/cluster=replicated,clickhouse.altinity.com/namespace=clickhouse,clickhouse.altinity.com/replica=0,clickhouse.altinity.com/shard=0

Labels: clickhouse.altinity.com/app=chop

clickhouse.altinity.com/chi=clickhouse-cluster

clickhouse.altinity.com/cluster=replicated

clickhouse.altinity.com/namespace=clickhouse

clickhouse.altinity.com/object-version=561d2f40d653142d83050c12083090a9cef53407

clickhouse.altinity.com/replica=0

clickhouse.altinity.com/settings-version=e01c061d13aed42a1872cb97fa7a5c1c8842b606

clickhouse.altinity.com/shard=0

clickhouse.altinity.com/zookeeper-version=1ccb7c4a4316400407d91546b41551b9d9acd5bb

Annotations: <none>

Replicas: 1 desired | 1 total

Update Strategy: RollingUpdate

Pods Status: 1 Running / 0 Waiting / 0 Succeeded / 0 Failed

Pod Template:

Labels: clickhouse.altinity.com/app=chop

clickhouse.altinity.com/chi=clickhouse-cluster

clickhouse.altinity.com/cluster=replicated

clickhouse.altinity.com/namespace=clickhouse

clickhouse.altinity.com/ready=yes

clickhouse.altinity.com/replica=0

clickhouse.altinity.com/settings-version=e01c061d13aed42a1872cb97fa7a5c1c8842b606

clickhouse.altinity.com/shard=0

clickhouse.altinity.com/zookeeper-version=1ccb7c4a4316400407d91546b41551b9d9acd5bb

Containers:

clickhouse-pod:

Image: 172.26.8.230/yandex/clickhouse-server:21.3.20.1

Ports: 9000/TCP, 8123/TCP, 9009/TCP

Host Ports: 0/TCP, 0/TCP, 0/TCP

Liveness: http-get http://:http/ping delay=60s timeout=1s period=3s #success=1 #failure=10

Readiness: http-get http://:http/ping delay=10s timeout=1s period=3s #success=1 #failure=3

Environment: <none>

Mounts:

/etc/clickhouse-server/conf.d/ from chi-clickhouse-cluster-deploy-confd-replicated-0-0 (rw)

/etc/clickhouse-server/config.d/ from chi-clickhouse-cluster-common-configd (rw)

/etc/clickhouse-server/users.d/ from chi-clickhouse-cluster-common-usersd (rw)

/var/lib/clickhouse from data-volume-template (rw)

/var/log/clickhouse-server from log-volume-template (rw)

clickhouse-log:

Image: 172.26.8.230/library/busybox:1.35.0

Port: <none>

Host Port: <none>

Command:

/bin/sh

-c

--

Args:

while true; do sleep 30; done;

Environment: <none>

Mounts:

/var/lib/clickhouse from data-volume-template (rw)

/var/log/clickhouse-server from log-volume-template (rw)

Volumes:

chi-clickhouse-cluster-common-configd:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: chi-clickhouse-cluster-common-configd

Optional: false

chi-clickhouse-cluster-common-usersd:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: chi-clickhouse-cluster-common-usersd

Optional: false

chi-clickhouse-cluster-deploy-confd-replicated-0-0:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: chi-clickhouse-cluster-deploy-confd-replicated-0-0

Optional: false

Volume Claims:

Name: data-volume-template

StorageClass:

Labels: clickhouse.altinity.com/app=chop

clickhouse.altinity.com/chi=clickhouse-cluster

clickhouse.altinity.com/cluster=replicated

clickhouse.altinity.com/namespace=clickhouse

clickhouse.altinity.com/replica=0

clickhouse.altinity.com/shard=0

Annotations: <none>

Capacity: 10Gi

Access Modes: [ReadWriteOnce]

Name: log-volume-template

StorageClass:

Labels: clickhouse.altinity.com/app=chop

clickhouse.altinity.com/chi=clickhouse-cluster

clickhouse.altinity.com/cluster=replicated

clickhouse.altinity.com/namespace=clickhouse

clickhouse.altinity.com/replica=0

clickhouse.altinity.com/shard=0

Annotations: <none>

Capacity: 10Gi

Access Modes: [ReadWriteOnce]

Events: <none>

@Slach kubectl describe sts -n clickhouse chi-clickhouse-cluster-replicated-0-0

Name: chi-clickhouse-cluster-replicated-0-0

Namespace: clickhouse

CreationTimestamp: Mon, 11 Apr 2022 13:41:01 +0800

Selector: clickhouse.altinity.com/app=chop,clickhouse.altinity.com/chi=clickhouse-cluster,clickhouse.altinity.com/cluster=replicated,clickhouse.altinity.com/namespace=clickhouse,clickhouse.altinity.com/replica=0,clickhouse.altinity.com/shard=0

Labels: clickhouse.altinity.com/app=chop

clickhouse.altinity.com/chi=clickhouse-cluster

clickhouse.altinity.com/cluster=replicated

clickhouse.altinity.com/namespace=clickhouse

clickhouse.altinity.com/object-version=561d2f40d653142d83050c12083090a9cef53407

clickhouse.altinity.com/replica=0

clickhouse.altinity.com/settings-version=e01c061d13aed42a1872cb97fa7a5c1c8842b606

clickhouse.altinity.com/shard=0

clickhouse.altinity.com/zookeeper-version=1ccb7c4a4316400407d91546b41551b9d9acd5bb

Annotations: <none>

Replicas: 1 desired | 1 total

Update Strategy: RollingUpdate

Pods Status: 1 Running / 0 Waiting / 0 Succeeded / 0 Failed

Pod Template:

Labels: clickhouse.altinity.com/app=chop

clickhouse.altinity.com/chi=clickhouse-cluster

clickhouse.altinity.com/cluster=replicated

clickhouse.altinity.com/namespace=clickhouse

clickhouse.altinity.com/ready=yes

clickhouse.altinity.com/replica=0

clickhouse.altinity.com/settings-version=e01c061d13aed42a1872cb97fa7a5c1c8842b606

clickhouse.altinity.com/shard=0

clickhouse.altinity.com/zookeeper-version=1ccb7c4a4316400407d91546b41551b9d9acd5bb

Containers:

clickhouse-pod:

Image: 172.26.8.230/yandex/clickhouse-server:21.3.20.1

Ports: 9000/TCP, 8123/TCP, 9009/TCP

Host Ports: 0/TCP, 0/TCP, 0/TCP

Liveness: http-get http://:http/ping delay=60s timeout=1s period=3s #success=1 #failure=10

Readiness: http-get http://:http/ping delay=10s timeout=1s period=3s #success=1 #failure=3

Environment: <none>

Mounts:

/etc/clickhouse-server/conf.d/ from chi-clickhouse-cluster-deploy-confd-replicated-0-0 (rw)

/etc/clickhouse-server/config.d/ from chi-clickhouse-cluster-common-configd (rw)

/etc/clickhouse-server/users.d/ from chi-clickhouse-cluster-common-usersd (rw)

/var/lib/clickhouse from data-volume-template (rw)

/var/log/clickhouse-server from log-volume-template (rw)

clickhouse-log:

Image: 172.26.8.230/library/busybox:1.35.0

Port: <none>

Host Port: <none>

Command:

/bin/sh

-c

--

Args:

while true; do sleep 30; done;

Environment: <none>

Mounts:

/var/lib/clickhouse from data-volume-template (rw)

/var/log/clickhouse-server from log-volume-template (rw)

Volumes:

chi-clickhouse-cluster-common-configd:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: chi-clickhouse-cluster-common-configd

Optional: false

chi-clickhouse-cluster-common-usersd:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: chi-clickhouse-cluster-common-usersd

Optional: false

chi-clickhouse-cluster-deploy-confd-replicated-0-0:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: chi-clickhouse-cluster-deploy-confd-replicated-0-0

Optional: false

Volume Claims:

Name: data-volume-template

StorageClass:

Labels: clickhouse.altinity.com/app=chop

clickhouse.altinity.com/chi=clickhouse-cluster

clickhouse.altinity.com/cluster=replicated

clickhouse.altinity.com/namespace=clickhouse

clickhouse.altinity.com/replica=0

clickhouse.altinity.com/shard=0

Annotations: <none>

Capacity: 10Gi

Access Modes: [ReadWriteOnce]

Name: log-volume-template

StorageClass:

Labels: clickhouse.altinity.com/app=chop

clickhouse.altinity.com/chi=clickhouse-cluster

clickhouse.altinity.com/cluster=replicated

clickhouse.altinity.com/namespace=clickhouse

clickhouse.altinity.com/replica=0

clickhouse.altinity.com/shard=0

Annotations: <none>

Capacity: 10Gi

Access Modes: [ReadWriteOnce]

Events: <none>

[root@k8s-node5-230 ~]# kubectl describe pod -n clickhouse chi-clickhouse-cluster-replicated-0-0-0

Name: chi-clickhouse-cluster-replicated-0-0-0

Namespace: clickhouse

Priority: 0

Node: k8s-node9-67/172.26.8.67

Start Time: Mon, 11 Apr 2022 13:41:02 +0800

Labels: clickhouse.altinity.com/app=chop

clickhouse.altinity.com/chi=clickhouse-cluster

clickhouse.altinity.com/cluster=replicated

clickhouse.altinity.com/namespace=clickhouse

clickhouse.altinity.com/replica=0

clickhouse.altinity.com/settings-version=e01c061d13aed42a1872cb97fa7a5c1c8842b606

clickhouse.altinity.com/shard=0

clickhouse.altinity.com/zookeeper-version=1ccb7c4a4316400407d91546b41551b9d9acd5bb

controller-revision-hash=chi-clickhouse-cluster-replicated-0-0-74644b5995

ippool.network.kubesphere.io/name=default-ipv4-ippool

statefulset.kubernetes.io/pod-name=chi-clickhouse-cluster-replicated-0-0-0

Annotations: cni.projectcalico.org/containerID: 3b53d128f3ac1009b92065628b9c3f06b17a6ea0940fe42a79e6814bf9400b06

cni.projectcalico.org/podIP: 10.233.98.78/32

cni.projectcalico.org/podIPs: 10.233.98.78/32

Status: Running

IP: 10.233.98.78

IPs:

IP: 10.233.98.78

Controlled By: StatefulSet/chi-clickhouse-cluster-replicated-0-0

Containers:

clickhouse-pod:

Container ID: docker://19db8e38af34d19fb7559f7d5c36e0c892d6c5a6e5562c3ea870fab5c06de4e1

Image: 172.26.8.230/yandex/clickhouse-server:21.3.20.1

Image ID: docker-pullable://172.26.8.230/yandex/clickhouse-server@sha256:4eccfffb01d735ab7c1af9a97fbff0c532112a6871b2bb5fe5c478d86d247b7e

Ports: 9000/TCP, 8123/TCP, 9009/TCP

Host Ports: 0/TCP, 0/TCP, 0/TCP

State: Waiting

Reason: CrashLoopBackOff

Last State: Terminated

Reason: Error

Exit Code: 137

Started: Mon, 11 Apr 2022 14:42:30 +0800

Finished: Mon, 11 Apr 2022 14:44:27 +0800

Ready: False

Restart Count: 17

Liveness: http-get http://:http/ping delay=60s timeout=1s period=3s #success=1 #failure=10

Readiness: http-get http://:http/ping delay=10s timeout=1s period=3s #success=1 #failure=3

Environment: <none>

Mounts:

/etc/clickhouse-server/conf.d/ from chi-clickhouse-cluster-deploy-confd-replicated-0-0 (rw)

/etc/clickhouse-server/config.d/ from chi-clickhouse-cluster-common-configd (rw)

/etc/clickhouse-server/users.d/ from chi-clickhouse-cluster-common-usersd (rw)

/var/lib/clickhouse from data-volume-template (rw)

/var/log/clickhouse-server from log-volume-template (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-rxsbd (ro)

clickhouse-log:

Container ID: docker://d646ce2951f619f88270b930d5c4b2dd3e8a9eb7cff5384fba9f87362d3ebe02

Image: 172.26.8.230/library/busybox:1.35.0

Image ID: docker-pullable://172.26.8.230/library/busybox@sha256:e39d9c8ac4963d0b00a5af08678757b44c35ea8eb6be0cdfbeb1282e7f7e6003

Port: <none>

Host Port: <none>

Command:

/bin/sh

-c

--

Args:

while true; do sleep 30; done;

State: Running

Started: Mon, 11 Apr 2022 13:41:03 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/lib/clickhouse from data-volume-template (rw)

/var/log/clickhouse-server from log-volume-template (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-rxsbd (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

data-volume-template:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: data-volume-template-chi-clickhouse-cluster-replicated-0-0-0

ReadOnly: false

log-volume-template:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: log-volume-template-chi-clickhouse-cluster-replicated-0-0-0

ReadOnly: false

chi-clickhouse-cluster-common-configd:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: chi-clickhouse-cluster-common-configd

Optional: false

chi-clickhouse-cluster-common-usersd:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: chi-clickhouse-cluster-common-usersd

Optional: false

chi-clickhouse-cluster-deploy-confd-replicated-0-0:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: chi-clickhouse-cluster-deploy-confd-replicated-0-0

Optional: false

default-token-rxsbd:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-rxsbd

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning Unhealthy 6m24s (x588 over 66m) kubelet Readiness probe failed: Get "http://10.233.98.78:8123/ping": dial tcp 10.233.98.78:8123: connect: connection refused

Warning BackOff 75s (x148 over 52m) kubelet Back-off restarting failed container

Temporary DNS error while resolving: chi-clickhouse-cluster-replicated-2-0

mean your kubernetes services not resolved inside your clickhouse-pod container

after it your container restarted cause readiness probe failed

Warning Unhealthy 6m24s (x588 over 66m) kubelet Readiness probe failed: Get "http://10.233.98.78:8123/ping": dial tcp 10.233.98.78:8123: connect: connection refused Warning BackOff 75s (x148 over 52m) kubelet Back-off restarting failed container

please share following command

kubectl get svc -n clickhouse

@Slach [root@k8s-node5-230 ~]# kubectl get svc -n clickhouse

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

clickhouse-clickhouse-cluster LoadBalancer 10.233.49.55 <pending> 8123:32471/TCP,9000:30226/TCP 130m

clickhouse-operator-metrics ClusterIP 10.233.52.7 <none> 8888/TCP 2d19h

looks strange

no one services related to replicas and shards created

I expect six services with ClusterIP type

chi-clickhouse-cluster-replicated-0-0

chi-clickhouse-cluster-replicated-0-1

chi-clickhouse-cluster-replicated-1-0

chi-clickhouse-cluster-replicated-1-1

chi-clickhouse-cluster-replicated-2-0

chi-clickhouse-cluster-replicated-1-1

I don't see any serviceTemplate in your CHI manifest

could you share

kubectl get chit --all-namespaces?

how exactly do you install clickhouse-operator?

kubectl get deploy --all-namespaces | grep clickhouse

@Slach

kubectl get chit --all-namespaces